TikTok's new 'wellness' features, which reward users for managing screen time, are a form of corporate misdirection. By gamifying self-control, the platform shifts the blame for addiction from its intentionally engaging algorithm to the user's lack of willpower, a tactic compared to giving someone cocaine and then a badge for not using it.

Related Insights

Parents blaming technology for their children's screen habits are avoiding self-reflection. The real issue is parental hypocrisy and a societal lack of accountability. If you genuinely believe screens are harmful, you have the power to enforce limits rather than blaming the technology you often use for your own convenience.

Modern society turns normal behaviors like eating or gaming into potent drugs by manipulating four factors: making them infinitely available (quantity/access), more intense (potency), and constantly new (novelty). This framework explains how behavioral addictions are engineered, hijacking the brain’s reward pathways just like chemical substances.

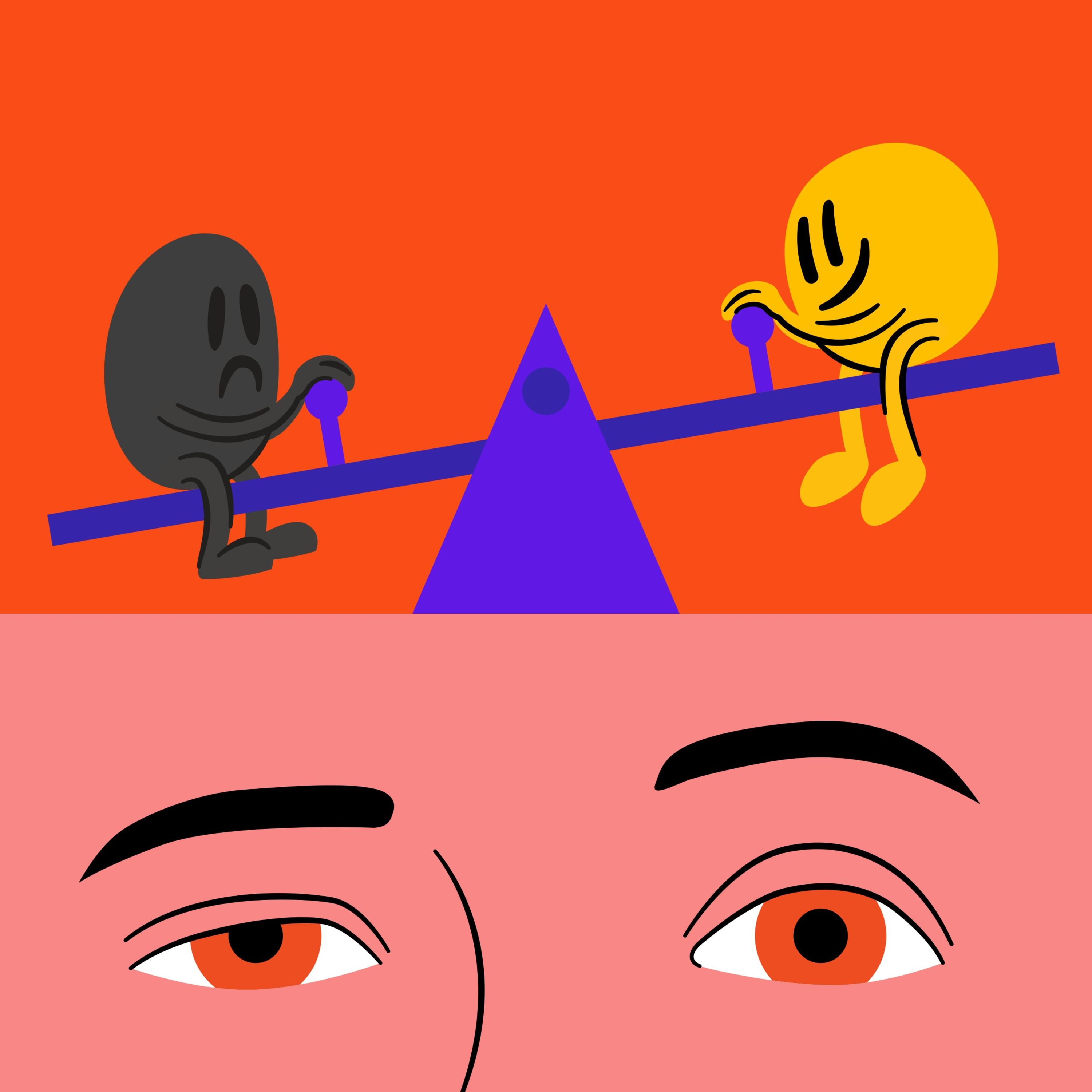

Mindless scrolling seeks a "fake" dopamine hit from passive consumption. By contrast, structured, intentional engagement—like sending five meaningful messages—creates "real" dopamine from accomplishment and relationship building. This purposeful activity can paradoxically reduce overall screen time by satisfying the brain's reward system more effectively.

Making high-stakes products (finance, health) easy and engaging risks encouraging overuse or uninformed decisions. The solution isn't restricting access but embedding education into the user journey to empower informed choices without being paternalistic.

Deleting an app like Instagram for many months causes its algorithm to lose understanding of your interests. Upon returning, the feed is generic and unengaging, creating a natural friction that discourages re-addiction. A short, week-long break, however, triggers aggressive re-engagement tactics from the platform.

The modern online discourse around therapy has devolved from a tool for healing into a competitive sport of self-optimization. It uses buzzwords to reframe bad days as generational trauma and sells subscription-based "cures," ultimately making people weaker and more divided.

Social media's business model created a race for user attention. AI companions and therapists are creating a more dangerous "race for attachment." This incentivizes platforms to deepen intimacy and dependency, encouraging users to isolate themselves from real human relationships, with potentially tragic consequences.

The core business model of dominant tech and AI companies is not just about engagement; it's about monetizing division and isolation. Trillions in shareholder value are now directly tied to separating young people from each other and their families, creating an "asocial, asexual youth," which is an existential threat.

The algorithmic shift on platforms like Instagram, YouTube, and Facebook towards short-form video has leveled the playing field. New creators can gain massive reach with a single viral video, an opportunity not seen in over a decade, akin to the early days of Facebook.

The narrative that AI-driven free time will spur creativity is flawed. Evidence suggests more free time leads to increased digital addiction, anxiety, and poor health. The correct response to AI's rise is not deeper integration, but deliberate disconnection to preserve well-being and genuine creativity.

![Media M&A - [Business Breakdowns, EP.230] thumbnail](https://megaphone.imgix.net/podcasts/8404be2a-a3c6-11f0-9d01-7b00b28a9d50/image/9a48597af1149c3b024e8d2f7327aa89.jpg?ixlib=rails-4.3.1&max-w=3000&max-h=3000&fit=crop&auto=format,compress)