All data inputs for AI are inherently biased (e.g., bullish management, bearish former employees). The most effective approach is not to de-bias the inputs but to use AI to compare and contrast these biased perspectives to form an independent conclusion.

Related Insights

Leaders are often trapped "inside the box" of their own assumptions when making critical decisions. By providing AI with context and assigning it an expert role (e.g., "world-class chief product officer"), you can prompt it to ask probing questions that reveal your biases and lead to more objective, defensible outcomes.

While AI can inherit biases from training data, those datasets can be audited, benchmarked, and corrected. In contrast, uncovering and remedying the complex cognitive biases of a human judge is far more difficult and less systematic, making algorithmic fairness a potentially more solvable problem.

Instead of accepting a single answer, prompt the AI to generate multiple options and then argue the pros and cons of each. This "debating partner" technique forces the model to stress-test its own logic, leading to more robust and nuanced outputs for strategic decision-making.

After an initial analysis, use a "stress-testing" prompt that forces the LLM to verify its own findings, check for contradictions, and correct its mistakes. This verification step is crucial for building confidence in the AI's output and creating bulletproof insights.

Go beyond using AI for data synthesis. Leverage it as a critical partner to stress-test your strategic opinions and assumptions. AI can challenge your thinking, identify conflicts in your data, and help you refine your point of view, ultimately hardening your final plan.

Treat AI as a critique partner. After synthesizing research, explain your takeaways and then ask the AI to analyze the same raw data to report on patterns, themes, or conclusions you didn't mention. This is a powerful method for revealing analytical blind spots.

The genius of X's Community Notes algorithm is that it surfaces a fact-check only when users from opposing ideological viewpoints agree on its validity. This mechanism actively filters for non-partisan, consensus-based truth rather than relying on biased fact-checkers.

AI models tend to be overly optimistic. To get a balanced market analysis, explicitly instruct AI research tools like Perplexity to act as a "devil's advocate." This helps uncover risks, challenge assumptions, and makes it easier for product managers to say "no" to weak ideas quickly.

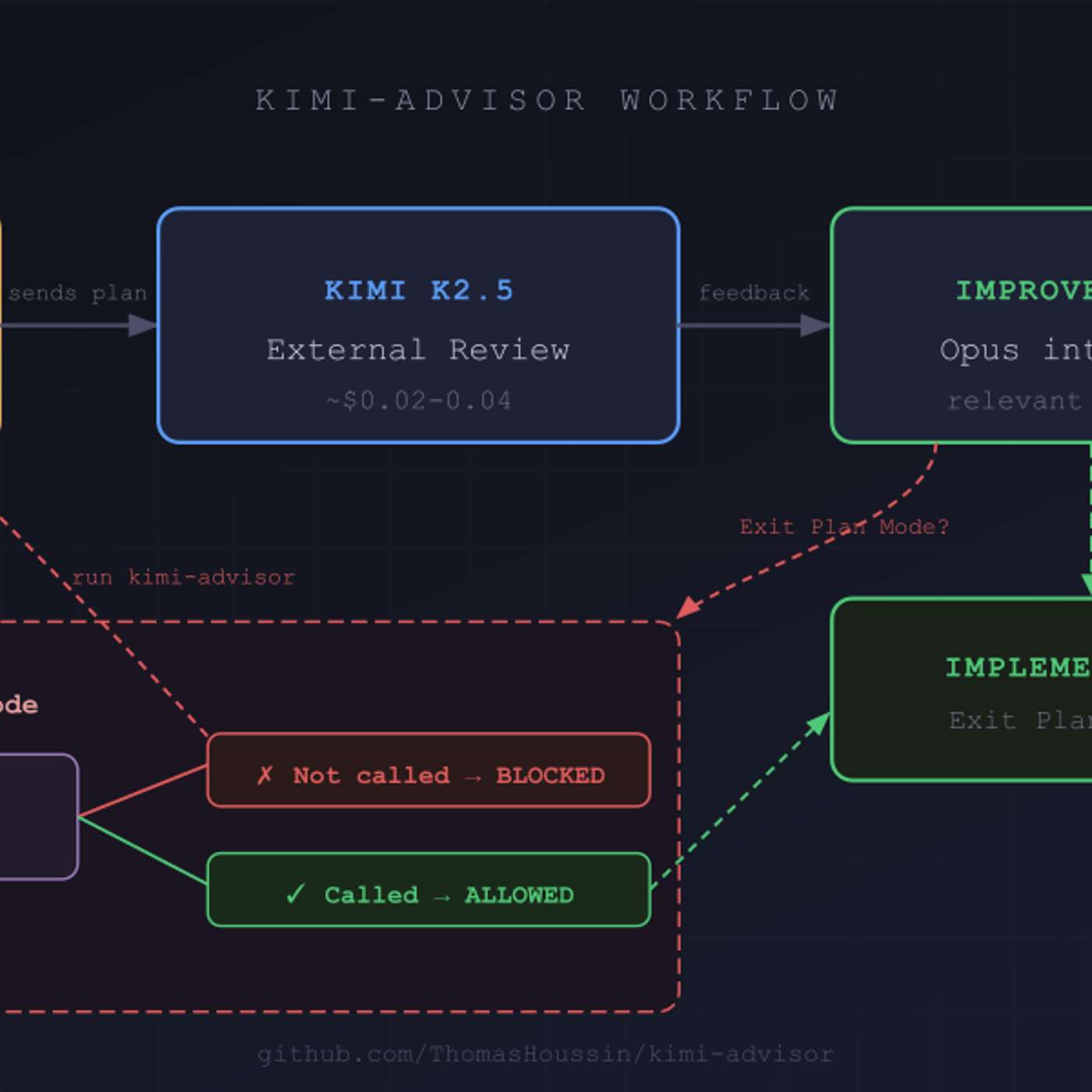

To improve code quality, use a secondary AI model from a different provider (e.g., Moonshot AI's Kimi) to review plans generated by a primary model (e.g., Anthropic's Claude). This introduces cognitive diversity and avoids the shared biases inherent in a single model family, leading to a more robust and enriching review process.

A comprehensive approach to mitigating AI bias requires addressing three separate components. First, de-bias the training data before it's ingested. Second, audit and correct biases inherent in pre-trained models. Third, implement human-centered feedback loops during deployment to allow the system to self-correct based on real-world usage and outcomes.