The genius of X's Community Notes algorithm is that it surfaces a fact-check only when users from opposing ideological viewpoints agree on its validity. This mechanism actively filters for non-partisan, consensus-based truth rather than relying on biased fact-checkers.

Related Insights

AI models trained on sources like Wikipedia inherit their biases. Wikipedia's policy of not allowing citations from leading conservative publications means these viewpoints are systematically excluded from training data, creating an inherent left-leaning bias in the resulting AI models.

The feeling of deep societal division is an artifact of platform design. Algorithms amplify extreme voices because they generate engagement, creating a false impression of widespread polarization. In reality, without these amplified voices, most people's views on contentious topics are quite moderate.

To combat confirmation bias, withhold the final results of an experiment or analysis until the entire team agrees the methodology is sound. This prevents people from subconsciously accepting expected outcomes while overly scrutinizing unexpected ones, leading to more objective conclusions.

Journalist Casey Newton uses AI tools not to write his columns, but to fact-check them after they're written. He finds that feeding his completed text into an LLM is a surprisingly effective way to catch factual errors, a significant improvement in model capability over the past year.

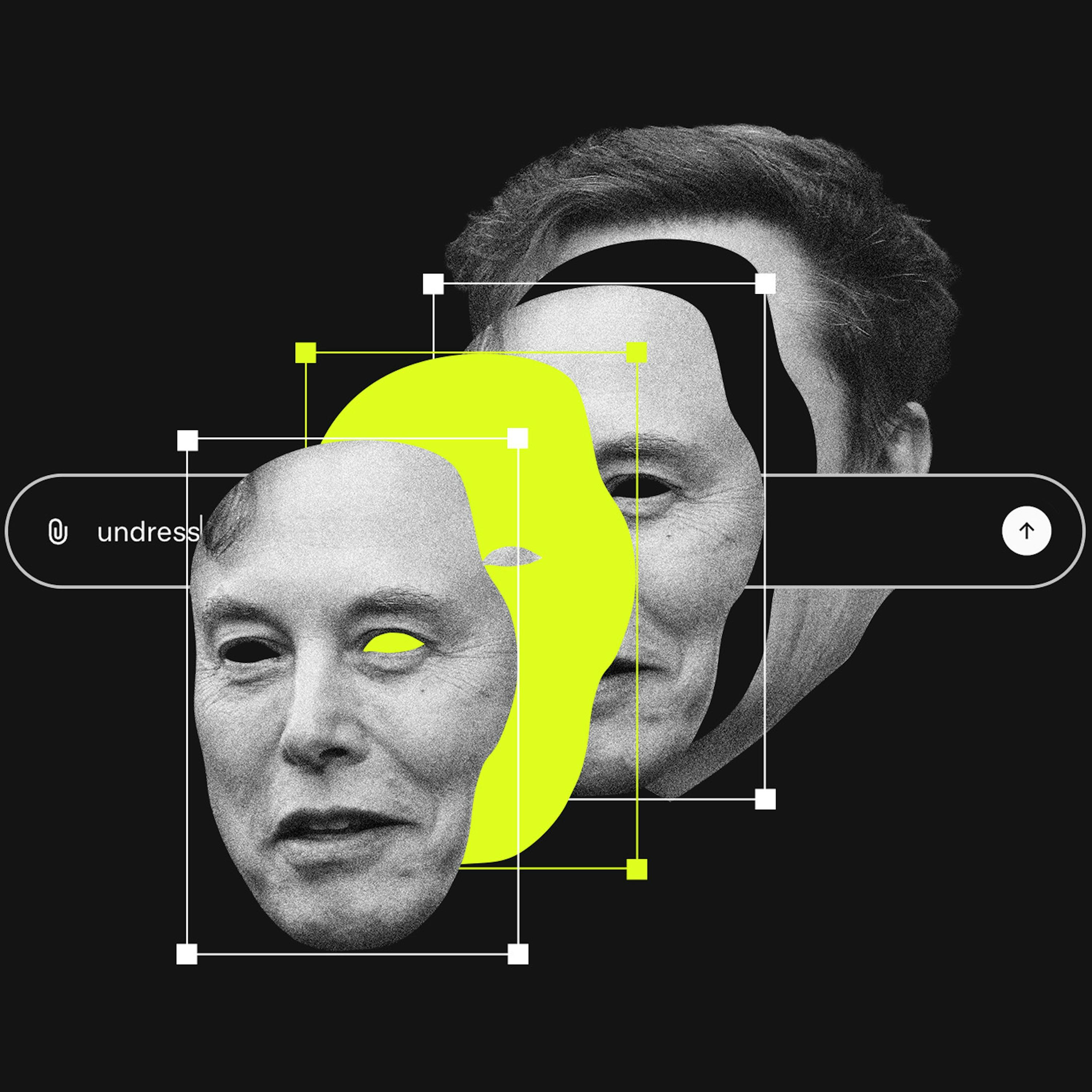

We are months away from AI that can create a media feed designed to exclusively validate a user's worldview while ignoring all contradictory information. This will intensify confirmation bias to an extreme, making rational debate impossible as individuals inhabit completely separate, self-reinforced realities with no common ground or shared facts.

The tension between left and right political ideologies is not a flaw but a feature, analogous to a "swarm of AIs" with competing interests. This dynamic creates a natural balance and equilibrium, preventing any single, potentially destructive ideology from going "off the rails" and dominating society completely.

As major platforms abdicate trust and safety responsibilities, demand grows for user-centric solutions. This fuels interest in decentralized networks and "middleware" that empower communities to set their own content standards, a move away from centralized, top-down platform moderation.

The AI debate is becoming polarized as influencers and politicians present subjective beliefs with high conviction, treating them as non-negotiable facts. This hinders balanced, logic-based conversations. It is crucial to distinguish testable beliefs from objective truths to foster productive dialogue about AI's future.

A two-step analytical method to vet information: First, distinguish objective (multi-source, verifiable) facts from subjective (opinion-based) claims. Second, assess claims on a matrix of probability and source reliability. A low-reliability source making an improbable claim, like many conspiracy theories, should be considered highly unlikely.

While platforms spent years developing complex AI for content moderation, X implemented a simple transparency feature showing a user's country of origin. This immediately exposed foreign troll farms posing as domestic political actors, proving that simple, direct transparency can be more effective at combating misinformation than opaque, complex technological solutions.