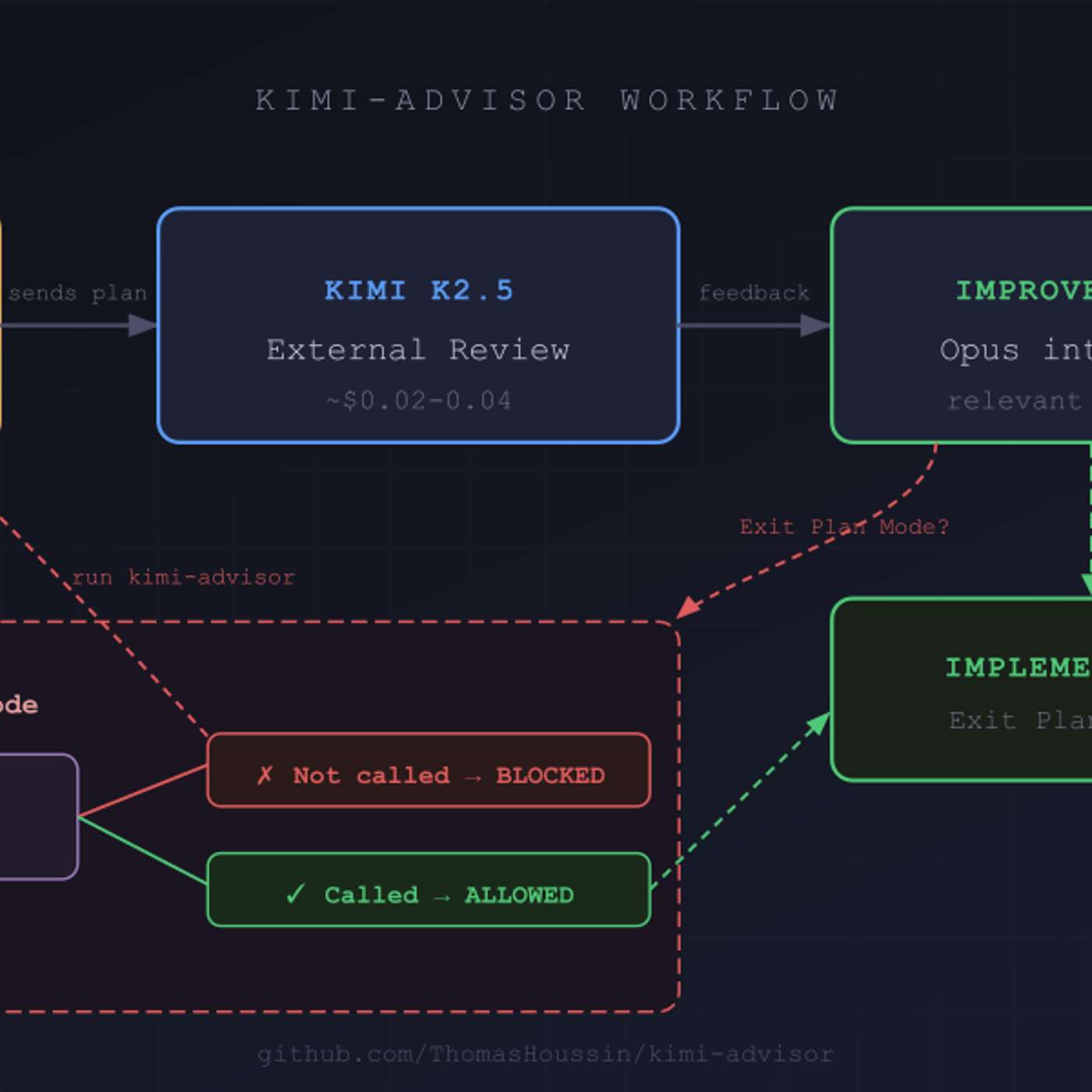

Developers often skip optional quality checks. To ensure consistent AI-powered plan reviews, implement a mandatory hook—a script that blocks the development process (e.g., exiting plan mode) until the external AI review has been verifiably completed. This engineers compliance into the workflow, guaranteeing a quality check every time.

An external AI reviewer provides more than just high-level feedback; it can identify specific, critical technical flaws. In one case, a reviewer AI caught a TOCTOU race condition vulnerability, suboptimal message ordering for LLM processing, and incorrect file type classifications—all of which were integrated and fixed by the primary AI.

To improve code quality, use a secondary AI model from a different provider (e.g., Moonshot AI's Kimi) to review plans generated by a primary model (e.g., Anthropic's Claude). This introduces cognitive diversity and avoids the shared biases inherent in a single model family, leading to a more robust and enriching review process.