When pre-training a large multimodal model, including small samples from many diverse modalities (like LiDAR or MRI data) is highly beneficial. This "tempts" the model, giving it an awareness that these data types exist and have structure. This initial exposure makes the model more adaptable for future fine-tuning on those specific domains.

Related Insights

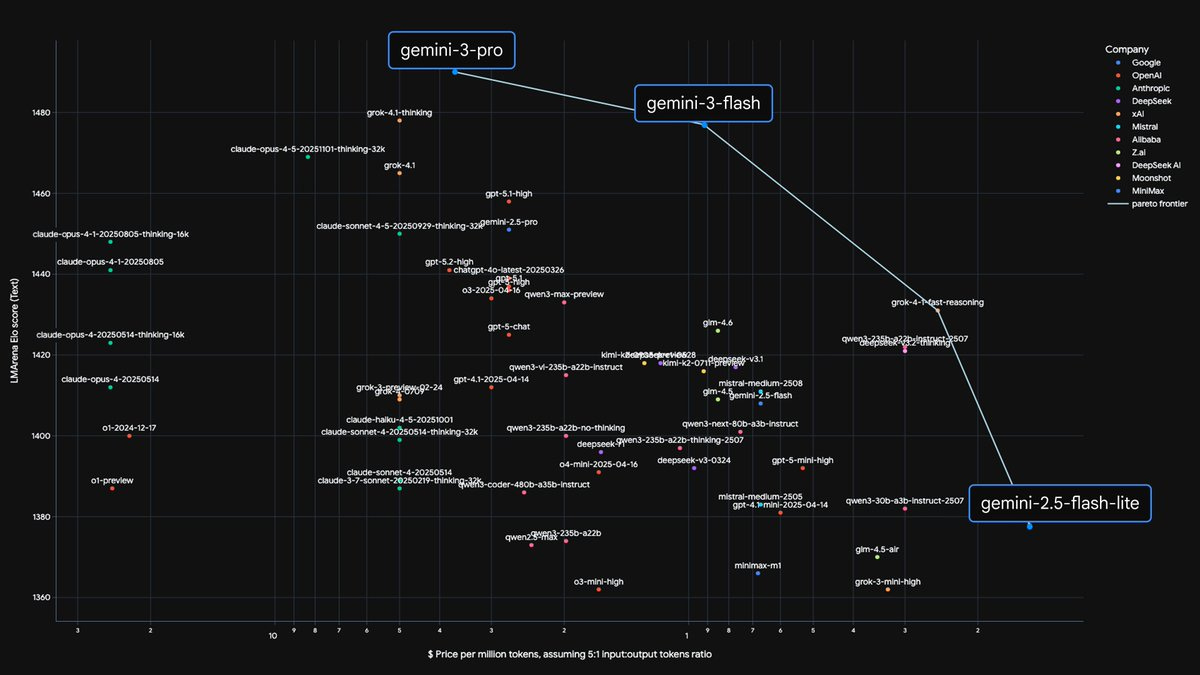

The AI industry is hitting data limits for training massive, general-purpose models. The next wave of progress will likely come from creating highly specialized models for specific domains, similar to DeepMind's AlphaFold, which can achieve superhuman performance on narrow tasks.

The path to a general-purpose AI model is not to tackle the entire problem at once. A more effective strategy is to start with a highly constrained domain, like generating only Minecraft videos. Once the model works reliably in that narrow distribution, incrementally expand the training data and complexity, using each step as a foundation for the next.

Instead of building a single, monolithic AI agent that uses a vast, unstructured dataset, a more effective approach is to create multiple small, precise agents. Each agent is trained on a smaller, more controllable dataset specific to its task, which significantly reduces the risk of unpredictable interpretations and hallucinations.

For creating specific image editing capabilities with AI, a small, curated dataset of "before and after" examples yields better results than a massive, generalized collection. This strategy prioritizes data quality and relevance over sheer volume, leading to more effective model fine-tuning for niche tasks.

Early AI models advanced by scraping web text and code. The next revolution, especially in "AI for science," requires overcoming a major hurdle: consolidating and formatting the world's vast but fragmented scientific data across disciplines like chemistry and materials science for model training.

Microsoft's research found that training smaller models on high-quality, synthetic, and carefully filtered data produces better results than training larger models on unfiltered web data. Data quality and curation, not just model size, are the new drivers of performance.

The most fundamental challenge in AI today is not scale or architecture, but the fact that models generalize dramatically worse than humans. Solving this sample efficiency and robustness problem is the true key to unlocking the next level of AI capabilities and real-world impact.

Fine-tuning an AI model is most effective when you use high-signal data. The best source for this is the set of difficult examples where your system consistently fails. The processes of error analysis and evaluation naturally curate this valuable dataset, making fine-tuning a logical and powerful next step after prompt engineering.

Specialized AI models no longer require massive datasets or computational resources. Using LoRA adaptations on models like FLUX.2, developers and creatives can fine-tune a model for a specific artistic style or domain with a small set of 50 to 100 images, making custom AI accessible even with limited hardware.

Google's strategy involves building specialized models (e.g., Veo for video) to push the frontier in a single modality. The learnings and breakthroughs from these focused efforts are then integrated back into the core, multimodal Gemini model, accelerating its overall capabilities.