Interacting with powerful coding agents requires a new skill: specifying requirements with extreme clarity. The creative process will be driven less by writing code line-by-line and more by crafting unambiguous natural language prompts. This elevates clear specification as a core competency for software engineers.

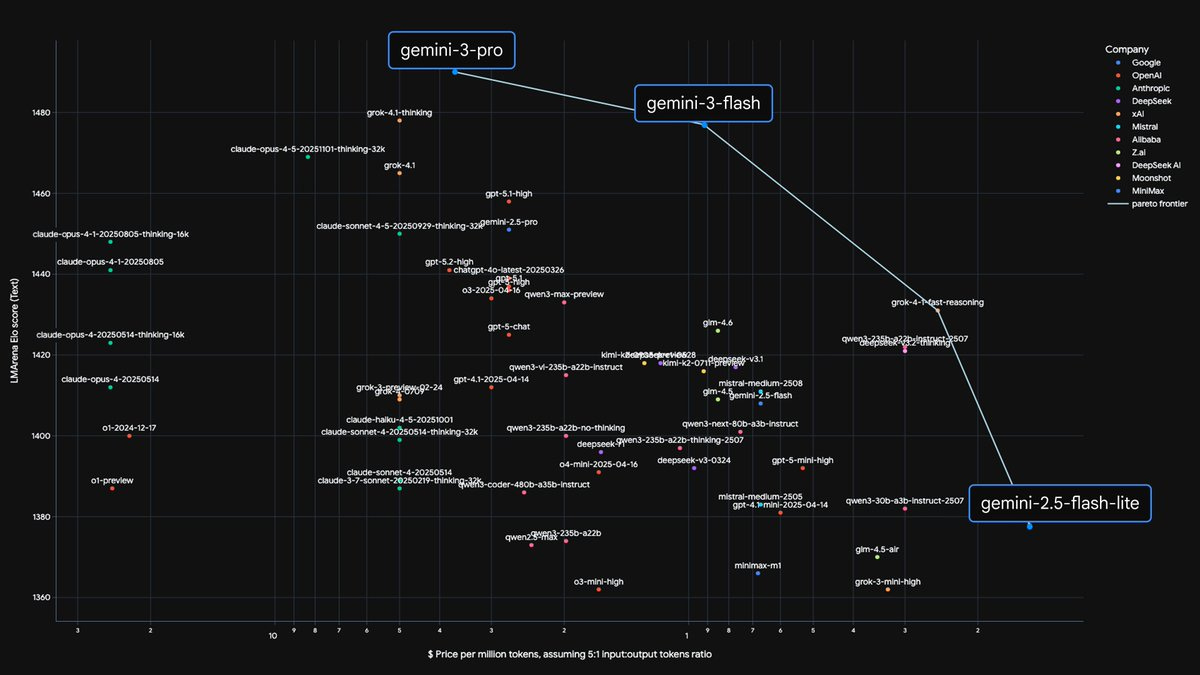

Google's strategy involves creating both cutting-edge models (Pro/Ultra) and efficient ones (Flash). The key is using distillation to transfer capabilities from large models to smaller, faster versions, allowing them to serve a wide range of use cases from complex reasoning to everyday applications.

The necessity of batching stems from a fundamental hardware reality: moving data is far more energy-intensive than computing with it. A single parameter's journey from on-chip SRAM to the multiplier can cost 1000x more energy than the multiplication itself. Batching amortizes this high data movement cost over many computations.

The Gemini project originated from a one-page memo by Jeff Dean arguing Google was fragmenting its best people, compute, and ideas across separate projects in Google Brain and DeepMind. He advocated for a unified effort to build a single powerful multimodal model, leading to the strategic merger that created Gemini.

When designing smaller models, it's inefficient to use limited parameters for memorizing facts that can be looked up. Jeff Dean advocates for focusing a model's capacity on core reasoning abilities and pairing it with a retrieval system. This makes the model more generally useful, as it can access a vast external knowledge base when needed.

Don't assume that a "good enough" cheap model will satisfy all future needs. Jeff Dean argues that as AI models become more capable, users' expectations and the complexity of their requests grow in tandem. This creates a perpetual need for pushing the performance frontier, as today's complex tasks become tomorrow's standard expectations.

When pre-training a large multimodal model, including small samples from many diverse modalities (like LiDAR or MRI data) is highly beneficial. This "tempts" the model, giving it an awareness that these data types exist and have structure. This initial exposure makes the model more adaptable for future fine-tuning on those specific domains.

In 2001, Google realized its combined server RAM could hold a full copy of its web index. Moving from disk-based to in-memory systems eliminated slow disk seeks, enabling complex queries with synonyms and semantic expansion. This fundamentally improved search quality long before LLMs became mainstream.

Designing custom AI hardware is a long-term bet. Google's TPU team co-designs chips with ML researchers to anticipate future needs. They aim to build hardware for the models that will be prominent 2-6 years from now, sometimes embedding speculative features that could provide massive speedups if research trends evolve as predicted.

Just as neural networks replaced hand-crafted features, large generalist models are replacing narrow, task-specific ones. Jeff Dean notes the era of unified models is "really upon us." A single, large model that can generalize across domains like math and language is proving more powerful than bespoke solutions for each, a modern take on the "bitter lesson."