A key way to improve consumer LLM speed and cost is to cache the results for frequently asked, static questions like "When was OpenAI founded?" This approach, similar to Google's knowledge panels, would provide instant answers for a large cohort of queries without engaging expensive GPU resources for every request.

Related Insights

The "Bitter Lesson" is not just about using more compute, but leveraging it scalably. Current LLMs are inefficient because they only learn during a discrete training phase, not during deployment where most computation occurs. This reliance on a special, data-intensive training period is not a scalable use of computational resources.

While often discussed for privacy, running models on-device eliminates API latency and costs. This allows for near-instant, high-volume processing for free, a key advantage over cloud-based AI services.

OpenAI found that significant upgrades to model intelligence, particularly for complex reasoning, did not improve user engagement. Users overwhelmingly prefer faster, simpler answers over more accurate but time-consuming responses, a disconnect that benefited competitors like Google.

In 2001, Google realized its combined server RAM could hold a full copy of its web index. Moving from disk-based to in-memory systems eliminated slow disk seeks, enabling complex queries with synonyms and semantic expansion. This fundamentally improved search quality long before LLMs became mainstream.

Model architecture decisions directly impact inference performance. AI company Zyphra pre-selects target hardware and then chooses model parameters—such as a hidden dimension with many powers of two—to align with how GPUs split up workloads, maximizing efficiency from day one.

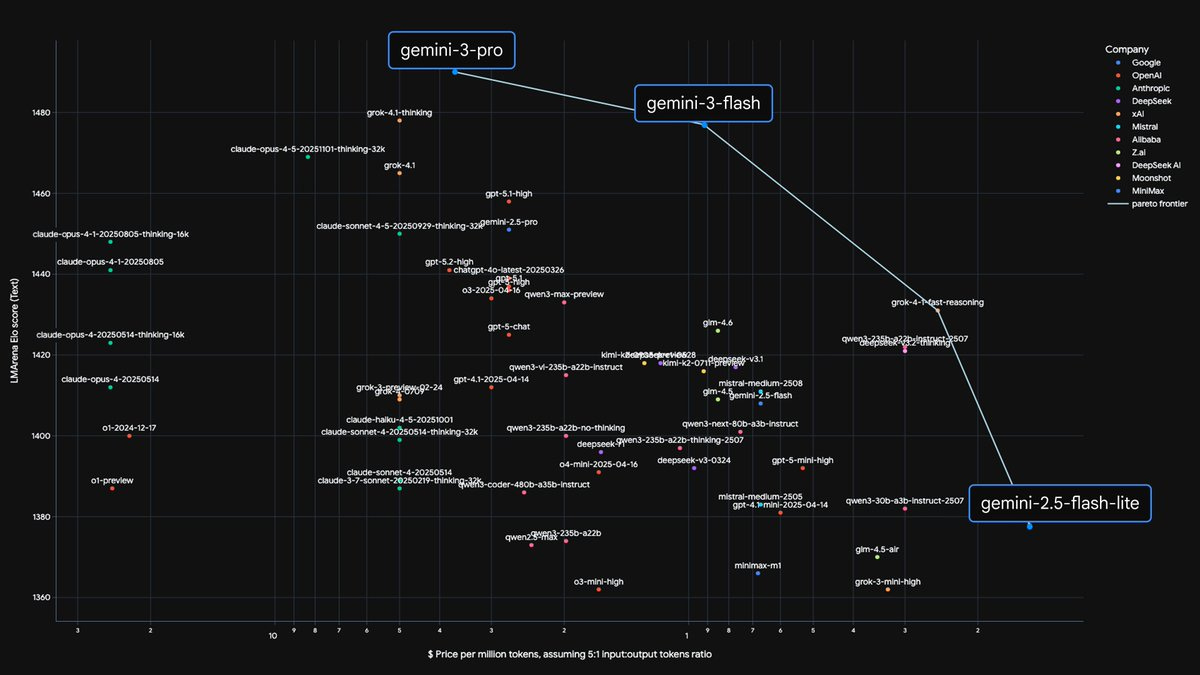

Companies like OpenAI and Anthropic are intentionally shrinking their flagship models (e.g., GPT-4.0 is smaller than GPT-4). The biggest constraint isn't creating more powerful models, but serving them at a speed users will tolerate. Slow models kill adoption, regardless of their intelligence.

Relying solely on premium models like Claude Opus can lead to unsustainable API costs ($1M/year projected). The solution is a hybrid approach: use powerful cloud models for complex tasks and cheaper, locally-hosted open-source models for routine operations.

The cost to achieve a specific performance benchmark dropped from $60 per million tokens with GPT-3 in 2021 to just $0.06 with Llama 3.2-3b in 2024. This dramatic cost reduction makes sophisticated AI economically viable for a wider range of enterprise applications, shifting the focus to on-premise solutions.

An emerging rule from enterprise deployments is to use small, fine-tuned models for well-defined, domain-specific tasks where they excel. Large models should be reserved for generic, open-ended applications with unknown query types where their broad knowledge base is necessary. This hybrid approach optimizes performance and cost.

A cost-effective AI architecture involves using a small, local model on the user's device to pre-process requests. This local AI can condense large inputs into an efficient, smaller prompt before sending it to the expensive, powerful cloud model, optimizing resource usage.

![Dylan Patel - Inside the Trillion-Dollar AI Buildout - [Invest Like the Best, EP.442] thumbnail](https://megaphone.imgix.net/podcasts/799253cc-9de9-11f0-8661-ab7b8e3cb4c1/image/d41d3a6f422989dc957ef10da7ad4551.jpg?ixlib=rails-4.3.1&max-w=3000&max-h=3000&fit=crop&auto=format,compress)