Identifying unauthorized sellers on platforms like Amazon is the easy part. Getting them removed requires building a massive, forensic-level data file that documents every instance of violation. This court-ready evidence is necessary to compel platforms to take action against bad actors.

Related Insights

Prime Day encourages third-party sellers to inflate pre-sale prices to create the illusion of a deep discount. While not Amazon's direct action, this practice of "fakeflation" erodes customer trust in the entire platform, turning a key marketing event into a significant brand liability.

Beyond data privacy, a key ethical responsibility for marketers using AI is ensuring content integrity. This means using platforms that provide a verifiable trail for every asset, check for originality, and offer AI-assisted verification for factual accuracy. This protects the brand, ensures content is original, and builds customer trust.

A key operational use of AI at Affirm is for regulatory compliance. The company deploys models to automatically scan thousands of merchant websites and ads, flagging incorrect or misleading claims about its financing products for which Affirm itself is legally responsible.

Tushy finds little sales cannibalization between its DTC site and Amazon because they serve different customer archetypes. Instead of forcing an 'Amazon shopper' to a .com site, brands should meet them where they are, focusing on mental and physical availability across all relevant channels.

For complex cases like "friendly fraud," traditional ground truth labels are often missing. Stripe uses an LLM to act as a judge, evaluating the quality of AI-generated labels for suspicious payments. This creates a proxy for ground truth, enabling faster model iteration.

Many social media and ad tech companies benefit financially from bot activity that inflates engagement and user counts. This perverse incentive means they are unlikely to solve the bot problem themselves, creating a need for independent, verifiable trust layers like blockchain.

Unlike service platforms like Uber that rely on real-world networks, Amazon's high-margin ad business is existentially threatened by AI agents that bypass sponsored listings. This vulnerability explains its uniquely aggressive legal stance against Perplexity, as it stands to lose a massive, growing revenue stream if users stop interacting directly with its site.

In environments plagued by counterfeits, like Nigeria's pharmaceutical market, product value isn't just about price or convenience. A core, defensible feature is guaranteeing authenticity. This requires solving complex supply chain and tracking problems, which in turn builds a critical moat against competitors.

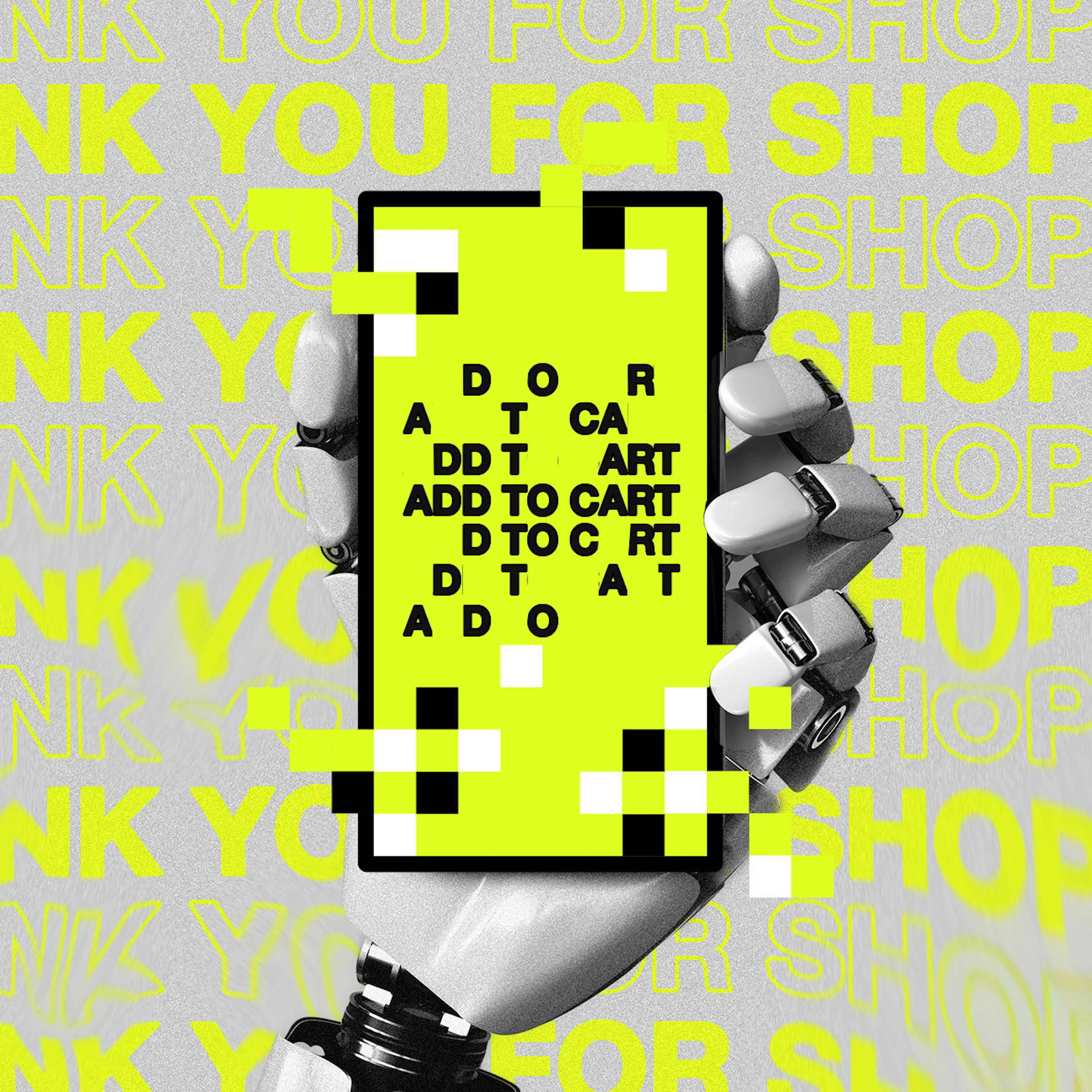

For years, businesses have focused on protecting their sites from malicious bots. This same architecture now blocks beneficial AI agents acting on behalf of consumers. Companies must rethink their technical infrastructure to differentiate and welcome these new 'good bots' for agentic commerce.

Amazon is suing Perplexity because its AI agent can autonomously log into user accounts and make purchases. This isn't just a legal spat over terms of service; it's the first major corporate conflict over AI agent-driven commerce, foreshadowing a future where brands must contend with non-human customers.