Don't assume that a "good enough" cheap model will satisfy all future needs. Jeff Dean argues that as AI models become more capable, users' expectations and the complexity of their requests grow in tandem. This creates a perpetual need for pushing the performance frontier, as today's complex tasks become tomorrow's standard expectations.

Related Insights

As frontier AI models reach a plateau of perceived intelligence, the key differentiator is shifting to user experience. Low-latency, reliable performance is becoming more critical than marginal gains on benchmarks, making speed the next major competitive vector for AI products like ChatGPT.

Building an AI-native product requires betting on the trajectory of model improvement, much like developers once bet on Moore's Law. Instead of designing for today's LLM constraints, assume rapid progress and build for the capabilities that will exist tomorrow. This prevents creating an application that is quickly outdated.

An AI product's job is never done because user behavior evolves. As users become more comfortable with an AI system, they naturally start pushing its boundaries with more complex queries. This requires product teams to continuously go back and recalibrate the system to meet these new, unanticipated demands.

A paradox of rapid AI progress is the widening "expectation gap." As users become accustomed to AI's power, their expectations for its capabilities grow even faster than the technology itself. This leads to a persistent feeling of frustration, even though the tools are objectively better than they were a year ago.

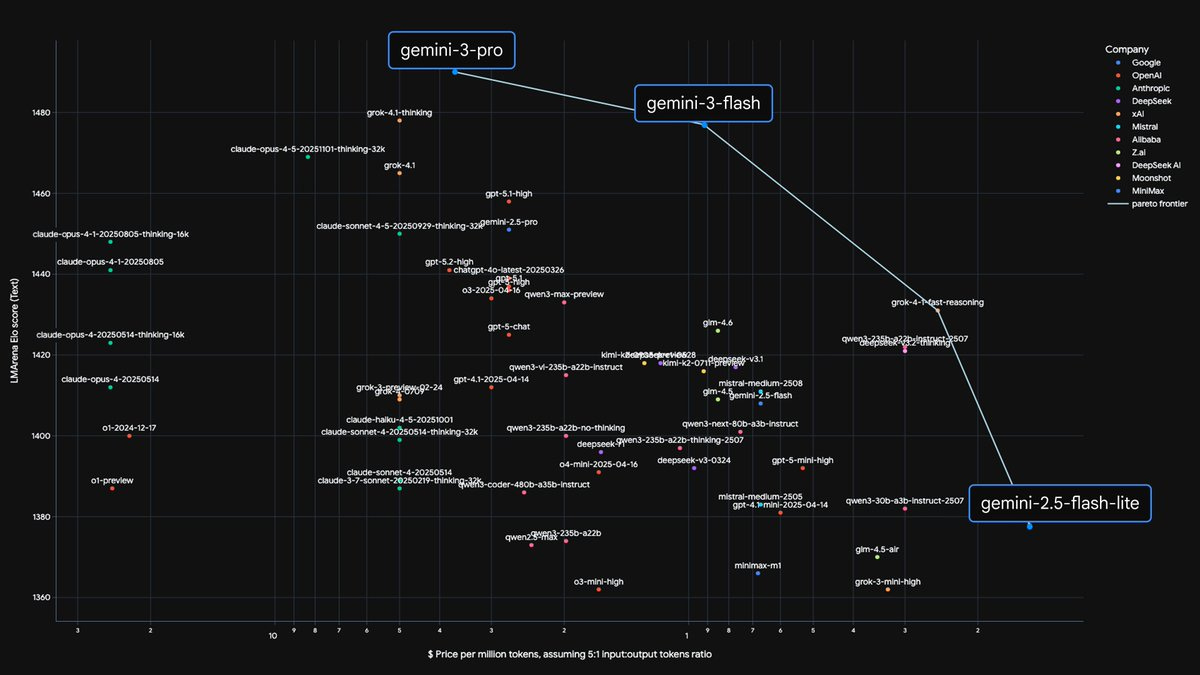

The novelty of new AI model capabilities is wearing off for consumers. The next competitive frontier is not about marginal gains in model performance but about creating superior products. The consensus is that current models are "good enough" for most applications, making product differentiation key.

When developing AI-powered tools, don't be constrained by current model limitations. Given the exponential improvement curve, design your product for the capabilities you anticipate models will have in six months. This ensures your product is perfectly timed to shine when the underlying tech catches up.

For consumer products like ChatGPT, models are already good enough for common queries. However, for complex enterprise tasks like coding, performance is far from solved. This gives model providers a durable path to sustained revenue growth through continued quality improvements aimed at professionals.

In the rapidly advancing field of AI, building products around current model limitations is a losing strategy. The most successful AI startups anticipate the trajectory of model improvements, creating experiences that seem 80% complete today but become magical once future models unlock their full potential.

The perceived limits of today's AI are not inherent to the models themselves but to our failure to build the right "agentic scaffold" around them. There's a "model capability overhang" where much more potential can be unlocked with better prompting, context engineering, and tool integrations.

AI models are more powerful than their current applications suggest. This 'capability overhang' exists because enterprises often deploy smaller, more efficient models that are 'good enough' and struggle with the impedance mismatch of integrating AI into legacy processes and data silos.