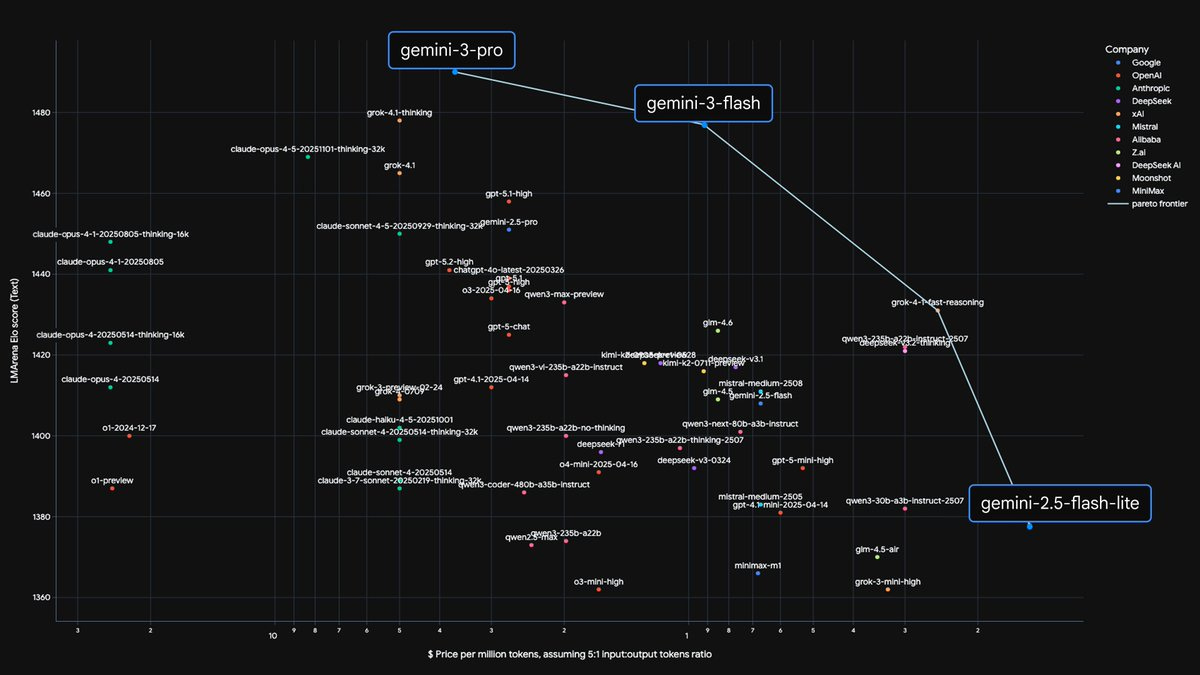

Google's strategy involves creating both cutting-edge models (Pro/Ultra) and efficient ones (Flash). The key is using distillation to transfer capabilities from large models to smaller, faster versions, allowing them to serve a wide range of use cases from complex reasoning to everyday applications.

Related Insights

The primary threat from competitors like Google may not be a superior model, but a more cost-efficient one. Google's Gemini 3 Flash offers "frontier-level intelligence" at a fraction of the cost. This shifts the competitive battleground from pure performance to price-performance, potentially undermining business models built on expensive, large-scale compute.

China is gaining an efficiency edge in AI by using "distillation"—training smaller, cheaper models from larger ones. This "train the trainer" approach is much faster and challenges the capital-intensive US strategy, highlighting how inefficient and "bloated" current Western foundational models are.

Google's competitive advantage in AI is its vertical integration. By controlling the entire stack from custom TPUs and foundational models (Gemini) to IDEs (AI Studio) and user applications (Workspace), it creates a deeply integrated, cost-effective, and convenient ecosystem that is difficult to replicate.

Google's Gemini models show that a company can recover from a late start to achieve technical parity, or even superiority, in AI. However, this comeback highlights that the real challenge is translating technological prowess into product market share and user adoption, where it still lags.

According to Cloudflare's network data, Google's enduring AI advantage comes from its data moat. Its web crawlers access 3.2 times more web pages than OpenAI's, providing a vastly larger training dataset that competitors struggle to match, potentially securing Google's long-term lead.

Unlike competitors who specialize, Google is the only company operating at scale across all four key layers of the AI stack. It has custom silicon (TPUs), a major cloud platform (GCP), a frontier foundational model (Gemini), and massive application distribution (Search, YouTube). This vertical integration is a unique strategic advantage in the AI race.

The belief that a single, god-level foundation model would dominate has proven false. Horowitz points to successful AI applications like Cursor, which uses 13 different models. This shows that value lies in the complex orchestration and design at the application layer, not just in having the largest single model.

Google's strategy involves building specialized models (e.g., Veo for video) to push the frontier in a single modality. The learnings and breakthroughs from these focused efforts are then integrated back into the core, multimodal Gemini model, accelerating its overall capabilities.

While competitors like OpenAI must buy GPUs from NVIDIA, Google trains its frontier AI models (like Gemini) on its own custom Tensor Processing Units (TPUs). This vertical integration gives Google a significant, often overlooked, strategic advantage in cost, efficiency, and long-term innovation in the AI race.

While startups like OpenAI can lead with a superior model, incumbents like Google and Meta possess the ultimate moat: distribution to billions of users across multiple top-ranked apps. They can rapidly deploy "good enough" models through established channels to reclaim market share from first-movers.