When designing smaller models, it's inefficient to use limited parameters for memorizing facts that can be looked up. Jeff Dean advocates for focusing a model's capacity on core reasoning abilities and pairing it with a retrieval system. This makes the model more generally useful, as it can access a vast external knowledge base when needed.

Related Insights

LLMs learn two things from pre-training: factual knowledge and intelligent algorithms (the "cognitive core"). Karpathy argues the vast memorized knowledge is a hindrance, making models rely on memory instead of reasoning. The goal should be to strip away this knowledge to create a pure, problem-solving cognitive entity.

An LLM shouldn't do math internally any more than a human would. The most intelligent AI systems will be those that know when to call specialized, reliable tools—like a Python interpreter or a search API—instead of attempting to internalize every capability from first principles.

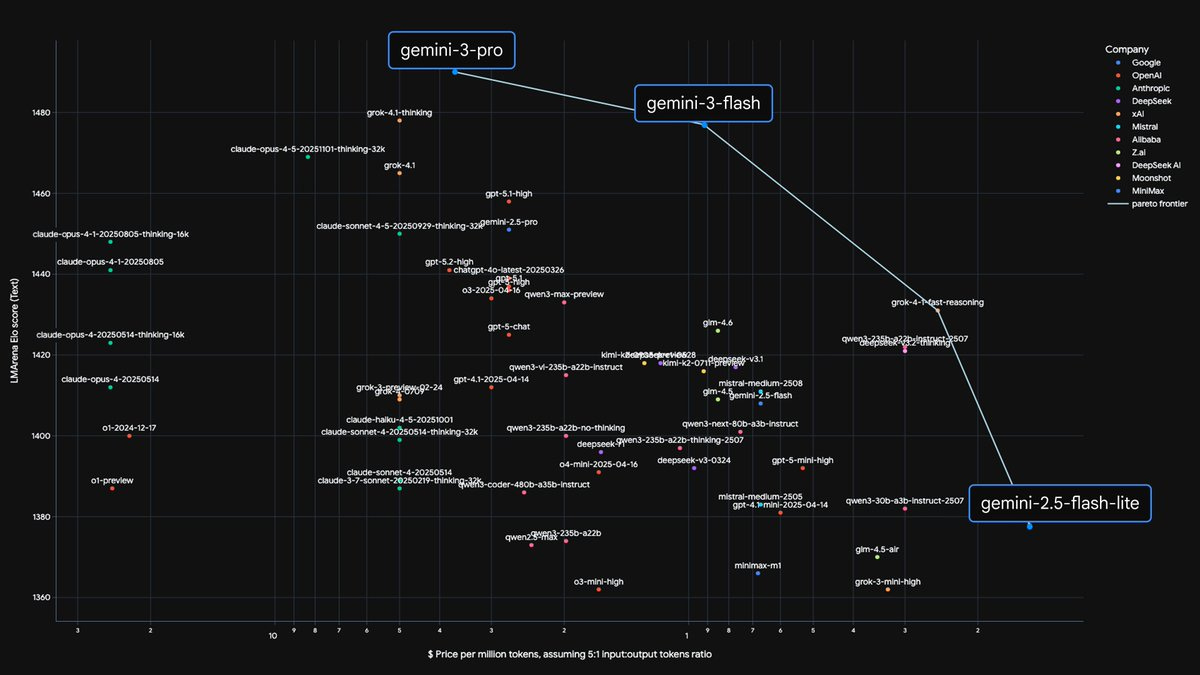

The "Omniscience" accuracy benchmark, which measures pure factual knowledge, tracks more closely with a model's total parameters than any other metric. This suggests embedded knowledge is a direct function of model size, distinct from reasoning abilities developed via training techniques.

The model uses a Mixture-of-Experts (MoE) architecture with over 200 billion parameters, but only activates a "sparse" 10 billion for any given task. This design provides the knowledge base of a massive model while keeping inference speed and cost comparable to much smaller models.

Performance on knowledge-intensive benchmarks correlates strongly with an MoE model's total parameter count, not its active parameter count. With leading models like Kimi K2 reportedly using only ~3% active parameters, this suggests there is significant room to increase sparsity and efficiency without degrading factual recall.

The traditional lever of `temperature` for controlling model creativity has been superseded in modern reasoning models, where it's often fixed. The new critical parameter is the "thinking budget"—the amount of reasoning tokens a model can use before responding. A larger budget allows for more internal review and higher-quality outputs.

The binary distinction between "reasoning" and "non-reasoning" models is becoming obsolete. The more critical metric is now "token efficiency"—a model's ability to use more tokens only when a task's difficulty requires it. This dynamic token usage is a key differentiator for cost and performance.

An emerging rule from enterprise deployments is to use small, fine-tuned models for well-defined, domain-specific tasks where they excel. Large models should be reserved for generic, open-ended applications with unknown query types where their broad knowledge base is necessary. This hybrid approach optimizes performance and cost.

The "memory" feature in today's LLMs is a convenience that saves users from re-pasting context. It is far from human memory, which abstracts concepts and builds pattern recognition. The true unlock will be when AI develops intuitive judgment from past "experiences" and data, a much longer-term challenge.

To improve LLM reasoning, researchers feed them data that inherently contains structured logic. Training on computer code was an early breakthrough, as it teaches patterns of reasoning far beyond coding itself. Textbooks are another key source for building smaller, effective models.

![How Investors are using AI - [Business Breakdowns, EP.240] thumbnail](https://megaphone.imgix.net/podcasts/f879fb14-024c-11f1-9c47-7bea8da34fd0/image/56ffcbcc6e03475c408ac0e35a01510d.jpg?ixlib=rails-4.3.1&max-w=3000&max-h=3000&fit=crop&auto=format,compress)