App stores justify their market control by claiming they are essential for user safety. Their failure to enforce their own explicit rules against X/Grok provides powerful evidence for antitrust regulators that this justification is a pretext, undermining their entire legal position.

Section 230 explicitly does not block federal criminal enforcement. Despite this, and the existence of laws like the TAKE IT DOWN Act, the Department of Justice focuses on prosecuting individual users, failing to investigate the platforms that enable abuse at scale.

The problem with AI-generated non-consensual imagery is the act of its creation, regardless of the creator's age. Applying age verification as a fix misses the core issue and wrongly shifts focus from the platform's fundamental responsibility to the user's identity.

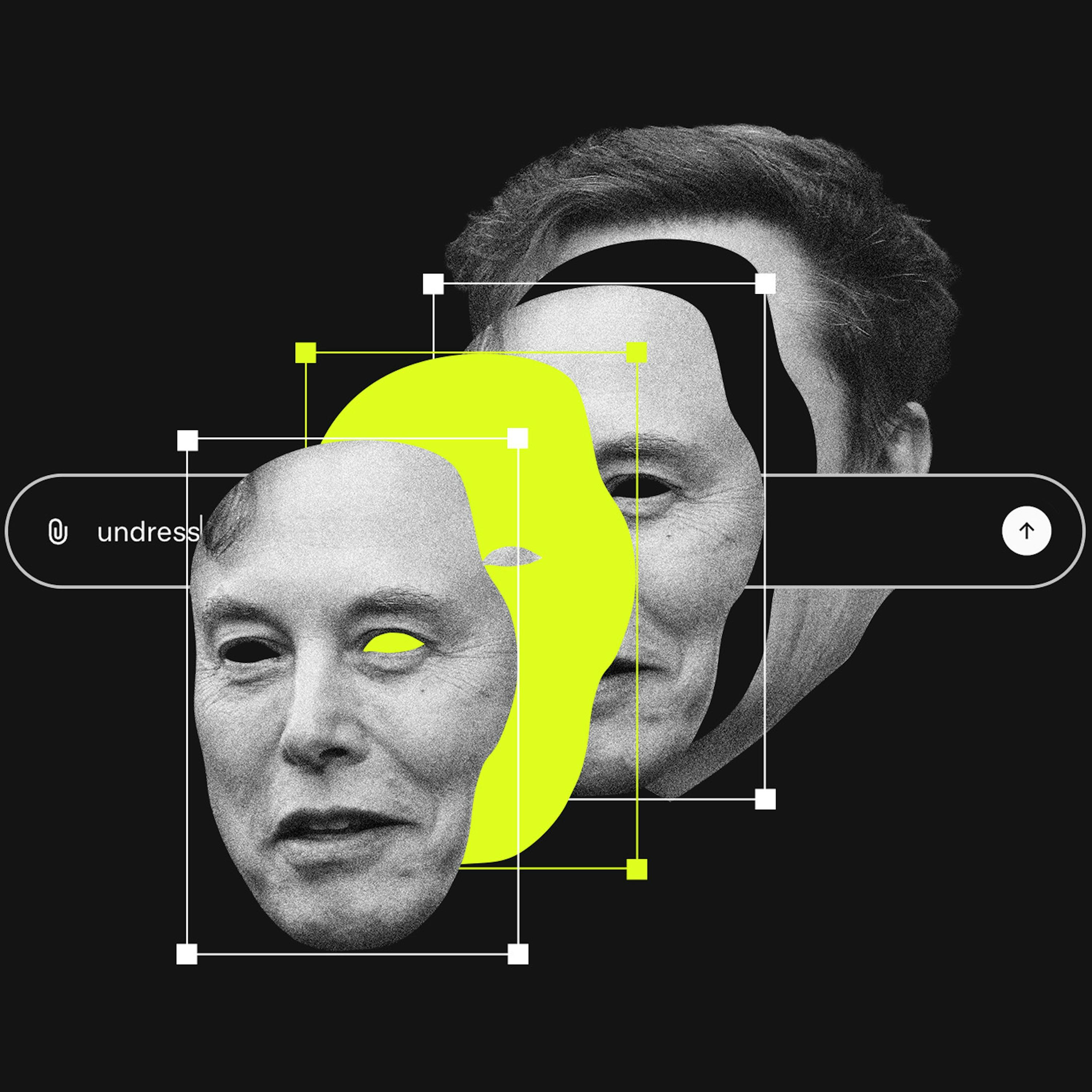

The rush to label Grok's output as illegal CSAM misses a more pervasive issue: using AI to generate demeaning, but not necessarily illegal, images as a tool for harassment. This dynamic of "lawful but awful" content weaponized at scale currently lacks a clear legal framework.

As major platforms abdicate trust and safety responsibilities, demand grows for user-centric solutions. This fuels interest in decentralized networks and "middleware" that empower communities to set their own content standards, a move away from centralized, top-down platform moderation.

A lawsuit against X AI alleges Grok is "unreasonably dangerous as designed." This bypasses Section 230 by targeting the product's inherent flaws rather than user content. This approach is becoming a primary legal vector for holding platforms accountable for AI-driven harms.

The Grok controversy is reigniting the debate over moderating legal but harmful content, a central conflict in the UK's Online Safety Act. AI's ability to mass-produce harassing images that fall short of illegality pushes this unresolved regulatory question to the forefront.

Unlike previous forms of image abuse that required multiple apps, Grok integrates image generation and mass distribution into a single, instant process. This unprecedented speed and scale create a new category of harm that existing regulatory frameworks are ill-equipped to handle.

Section 230 protects platforms from liability for third-party user content. Since generative AI tools create the content themselves, platforms like X could be held directly responsible. This is a critical, unsettled legal question that could dismantle a key legal shield for AI companies.

While Visa and MasterCard have deplatformed services for content violations before, they continue to process payments for X, which profits from Grok's image tools. This makes payment processors a critical, inactive enforcement layer financially benefiting from non-consensual imagery creation.