Section 230 explicitly does not block federal criminal enforcement. Despite this, and the existence of laws like the TAKE IT DOWN Act, the Department of Justice focuses on prosecuting individual users, failing to investigate the platforms that enable abuse at scale.

Related Insights

The current era of exploitative digital platforms was made possible by a multi-decade failure to enforce antitrust laws. This policy shift allowed companies to buy rivals (e.g., Facebook buying Instagram) and engage in predatory pricing (e.g., Uber), creating the monopolies that can now extract value without competitive consequence.

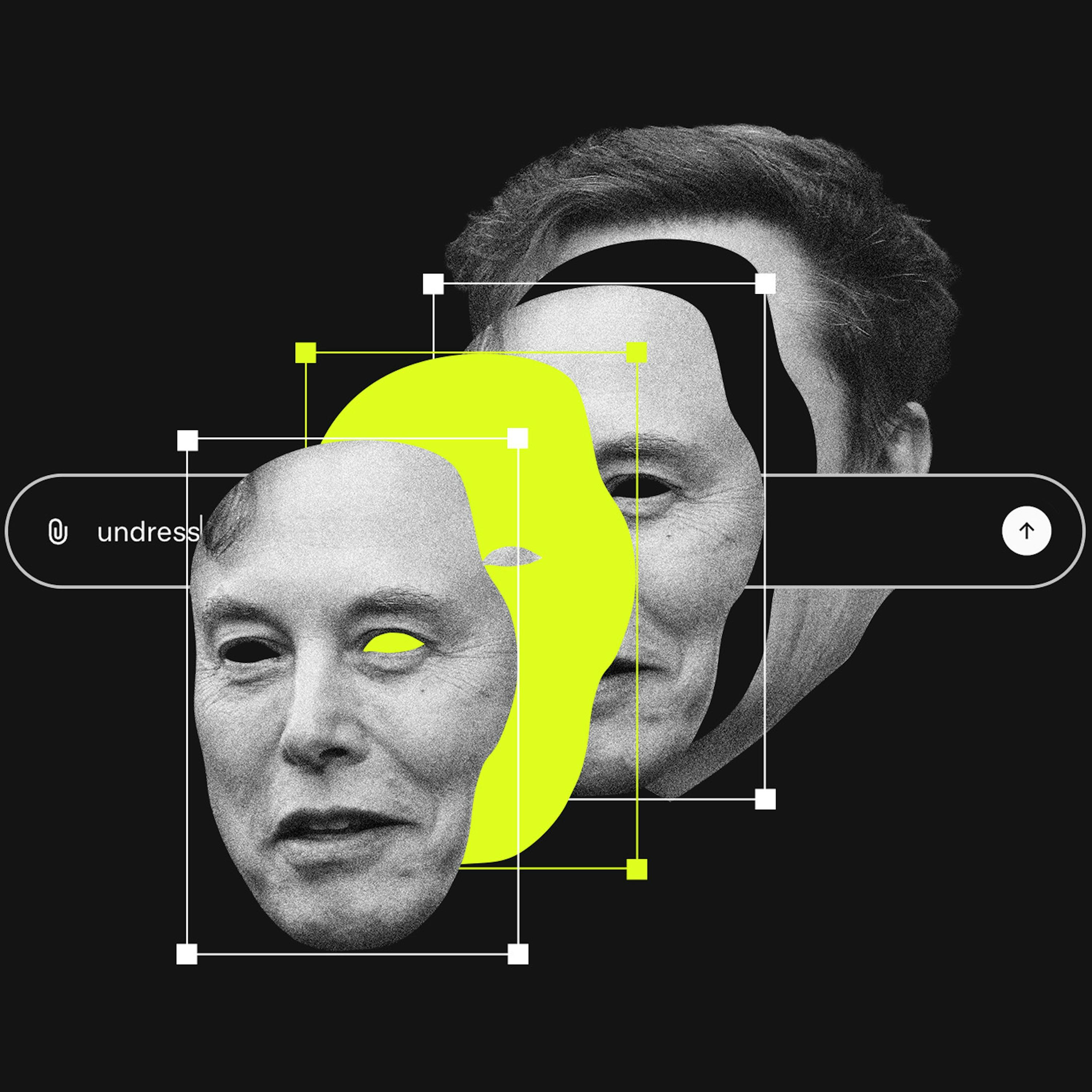

Unlike previous forms of image abuse that required multiple apps, Grok integrates image generation and mass distribution into a single, instant process. This unprecedented speed and scale create a new category of harm that existing regulatory frameworks are ill-equipped to handle.

The problem with social media isn't free speech itself, but algorithms that elevate misinformation for engagement. A targeted solution is to remove Section 230 liability protection *only* for content that platforms algorithmically boost, holding them accountable for their editorial choices without engaging in broad censorship.

A significant ideological inconsistency exists where political figures on the right fiercely condemn perceived federal overreach like the "Twitter files"—requests to remove content—while simultaneously defending aggressive, violent federal actions by agencies like ICE. This reveals a partisan, rather than principled, opposition to government power.

Platforms grew dominant by acquiring competitors, a direct result of failed antitrust enforcement. Cory Doctorow argues debates over intermediary liability (e.g., Section 230) are a distraction from the core issue: a decades-long drawdown of anti-monopoly law.

A lawsuit against X AI alleges Grok is "unreasonably dangerous as designed." This bypasses Section 230 by targeting the product's inherent flaws rather than user content. This approach is becoming a primary legal vector for holding platforms accountable for AI-driven harms.

As major platforms abdicate trust and safety responsibilities, demand grows for user-centric solutions. This fuels interest in decentralized networks and "middleware" that empower communities to set their own content standards, a move away from centralized, top-down platform moderation.

Laws intended for copyright, like the DMCA's anti-circumvention clause, are weaponized by platforms. They make it a felony to create software that modifies an app's behavior (e.g., an ad-blocker), preventing competition and user choice.

Section 230 protects platforms from liability for third-party user content. Since generative AI tools create the content themselves, platforms like X could be held directly responsible. This is a critical, unsettled legal question that could dismantle a key legal shield for AI companies.

AI companies argue their models' outputs are original creations to defend against copyright claims. This stance becomes a liability when the AI generates harmful material, as it positions the platform as a co-creator, undermining the Section 230 "neutral platform" defense used by traditional social media.