While Visa and MasterCard have deplatformed services for content violations before, they continue to process payments for X, which profits from Grok's image tools. This makes payment processors a critical, inactive enforcement layer financially benefiting from non-consensual imagery creation.

Related Insights

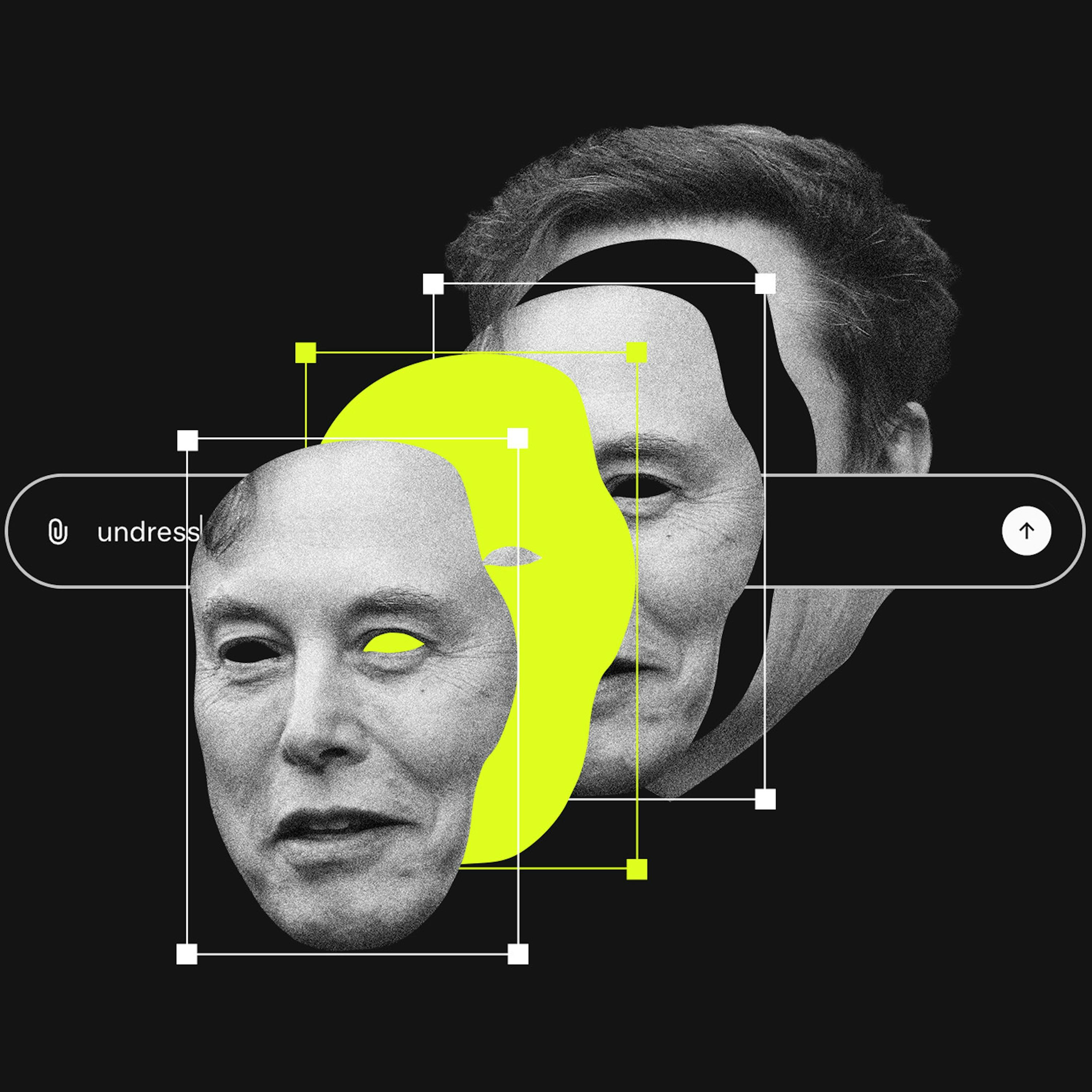

Unlike previous forms of image abuse that required multiple apps, Grok integrates image generation and mass distribution into a single, instant process. This unprecedented speed and scale create a new category of harm that existing regulatory frameworks are ill-equipped to handle.

OpenAI's decision to allow adult content for verified users is a calculated business strategy, not just a policy tweak. It's a direct move to counter-position against competitors like xAI's Grok and capture a massive, highly engaged market segment, signaling a shift towards a more permissive, Reddit-like content model.

The problem with AI-generated non-consensual imagery is the act of its creation, regardless of the creator's age. Applying age verification as a fix misses the core issue and wrongly shifts focus from the platform's fundamental responsibility to the user's identity.

Analysts suggest OpenAI's decision to allow erotica, a move typically made by platforms playing catch-up (like XAI's Grok), indicates that paid subscription growth may be stalling. This forces them into a brand-damaging category they previously avoided to boost revenue and compete.

The rush to label Grok's output as illegal CSAM misses a more pervasive issue: using AI to generate demeaning, but not necessarily illegal, images as a tool for harassment. This dynamic of "lawful but awful" content weaponized at scale currently lacks a clear legal framework.

Rather than simply failing to police fraud, Meta perversely profits from it by charging higher rates for ads its systems suspect are fraudulent. This 'scam tax' creates a direct financial incentive to allow illicit ads, turning a blind eye into a lucrative revenue stream.

To enable agentic e-commerce while mitigating risk, major card networks are exploring how to issue credit cards directly to AI agents. These cards would have built-in limitations, such as spending caps (e.g., $200), allowing agents to execute purchases autonomously within safe financial guardrails.

Unlike other platforms, xAI's issues were not an unforeseen accident but a predictable result of its explicit strategy to embrace sexualized content. Features like a "spicy mode" and Elon Musk's own posts created a corporate culture that prioritized engagement from provocative content over implementing robust safeguards against its misuse for generating illegal material.

Section 230 protects platforms from liability for third-party user content. Since generative AI tools create the content themselves, platforms like X could be held directly responsible. This is a critical, unsettled legal question that could dismantle a key legal shield for AI companies.

Companies like OpenAI knowingly use copyrighted material, calculating that the market cap gained from rapid growth will far exceed the eventual legal settlements. This strategy prioritizes building a dominant market position by breaking the law, viewing fines as a cost of doing business.