As major platforms abdicate trust and safety responsibilities, demand grows for user-centric solutions. This fuels interest in decentralized networks and "middleware" that empower communities to set their own content standards, a move away from centralized, top-down platform moderation.

Related Insights

Platform decay isn't inevitable; it occurred because four historical checks and balances were removed. These were: robust antitrust enforcement preventing monopolies, regulation imposing penalties for bad behavior, a powerful tech workforce that could refuse unethical tasks, and technical interoperability that gave users control via third-party tools.

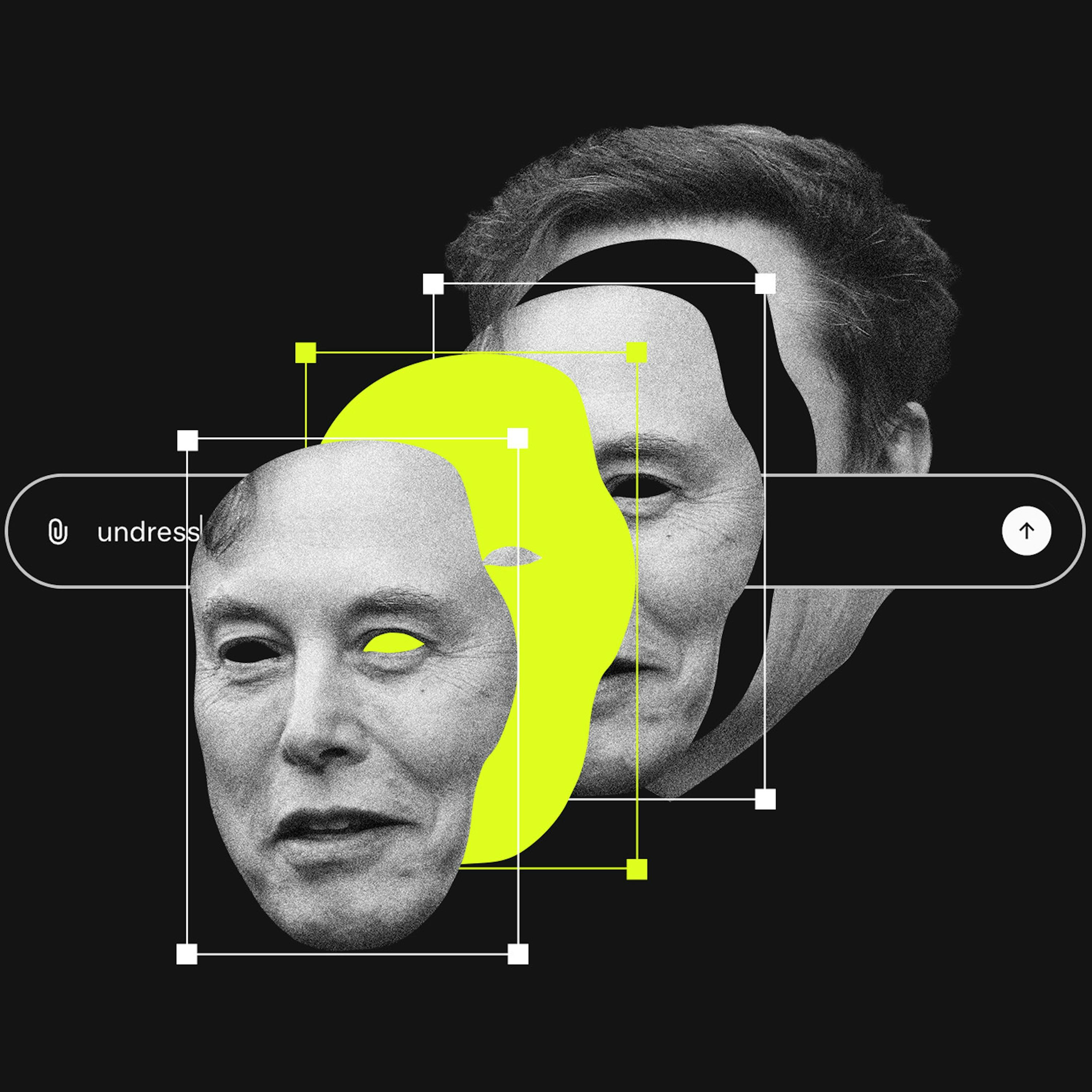

As AI-generated 'slop' floods platforms and reduces their utility, a counter-movement is brewing. This creates a market opportunity for new social apps that can guarantee human-created and verified content, appealing to users fatigued by endless AI.

Many social media and ad tech companies benefit financially from bot activity that inflates engagement and user counts. This perverse incentive means they are unlikely to solve the bot problem themselves, creating a need for independent, verifiable trust layers like blockchain.

Content moderation laws are difficult and slow to administer. A better solution is requiring platforms to provide users with a simple file of their data and social graph, allowing them to switch services easily and creating real competitive pressure.

The metric for a successful community has shifted from high activity ("noise") to high trust. Members no longer want to sift through hundreds of discussions. They want a smaller, curated space where they can trust the expertise and intentions of the other people in the room.

The proliferation of AI agents will erode trust in mainstream social media, rendering it 'dead' for authentic connection. This will drive users toward smaller, intimate spaces where humanity is verifiable. A 'gradient of trust' may emerge, where social graphs are weighted by provable, real-world geofenced interactions, creating a new standard for online identity.

Avoid building your primary content presence on platforms like Medium or Quora. These platforms inevitably shift focus from serving users to serving advertisers and their own bottom line, ultimately degrading reach and control for creators. Use them as spokes, but always own your central content hub.

The next generation of social networks will be fundamentally different, built around the creation of functional software and AI models, not just media. The status game will shift from who has the best content to who can build the most useful or interesting tools for the community.

Historically, trust was local (proximity-based) then institutional (in brands, contracts). Technology has enabled a new "distributed trust" era, where we trust strangers through platforms like Airbnb and Uber. This fundamentally alters how reputation is built and where authority lies, moving it from top-down hierarchies to sideways networks.

While platforms spent years developing complex AI for content moderation, X implemented a simple transparency feature showing a user's country of origin. This immediately exposed foreign troll farms posing as domestic political actors, proving that simple, direct transparency can be more effective at combating misinformation than opaque, complex technological solutions.