Section 230 protects platforms from liability for third-party user content. Since generative AI tools create the content themselves, platforms like X could be held directly responsible. This is a critical, unsettled legal question that could dismantle a key legal shield for AI companies.

Related Insights

Unlike platforms like YouTube that merely host user-uploaded content, new generative AI platforms are directly involved in creating the content themselves. This fundamental shift from distributor to creator introduces a new level of brand and moral responsibility for the platform's output.

The problem with social media isn't free speech itself, but algorithms that elevate misinformation for engagement. A targeted solution is to remove Section 230 liability protection *only* for content that platforms algorithmically boost, holding them accountable for their editorial choices without engaging in broad censorship.

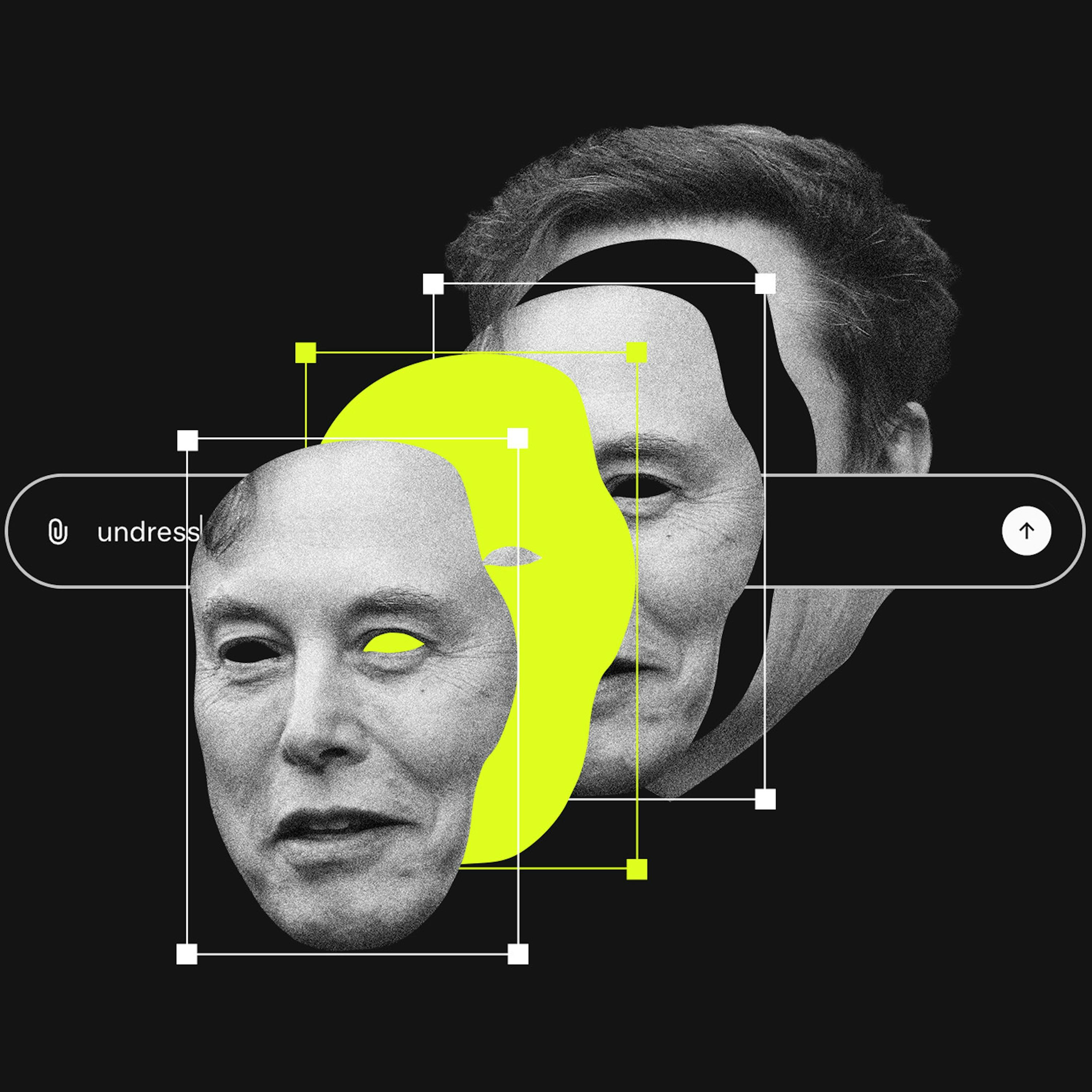

A lawsuit against X AI alleges Grok is "unreasonably dangerous as designed." This bypasses Section 230 by targeting the product's inherent flaws rather than user content. This approach is becoming a primary legal vector for holding platforms accountable for AI-driven harms.

The core issue with Grok generating abusive material wasn't the creation of a new capability, but its seamless integration into X. This made a previously niche, high-effort malicious activity effortlessly available to millions of users on a major social media platform, dramatically scaling the potential for harm.

The legality of using copyrighted material in AI tools hinges on non-commercial, individual use. If a user uploads protected IP to a tool for personal projects, liability rests with the user, not the toolmaker, similar to how a scissor company isn't liable for copyright infringement via collage.

When an AI tool generates copyrighted material, don't assume the technology provider bears sole legal responsibility. The user who prompted the creation is also exposed to liability. As legal precedent lags, users must rely on their own ethical principles to avoid infringement.

AI companies argue their models' outputs are original creations to defend against copyright claims. This stance becomes a liability when the AI generates harmful material, as it positions the platform as a co-creator, undermining the Section 230 "neutral platform" defense used by traditional social media.

The core legal battle is a referendum on "fair use" for the AI era. If AI summaries are deemed "transformative" (a new work), it's a win for AI platforms. If they're "derivative" (a repackaging), it could force widespread content licensing deals.

While an AI model itself may not be an infringement, its output could be. If you use AI-generated content for your business, you could face lawsuits from creators whose copyrighted material was used for training. The legal argument is that your output is a "derivative work" of their original, protected content.

When AI Overviews aggregate and present information, the platform (Google) becomes the publisher, inheriting blame for inaccuracies. This is a fundamental shift from traditional search, where the source website was held responsible. This increases reputational and legal risk for AI-powered information curators.