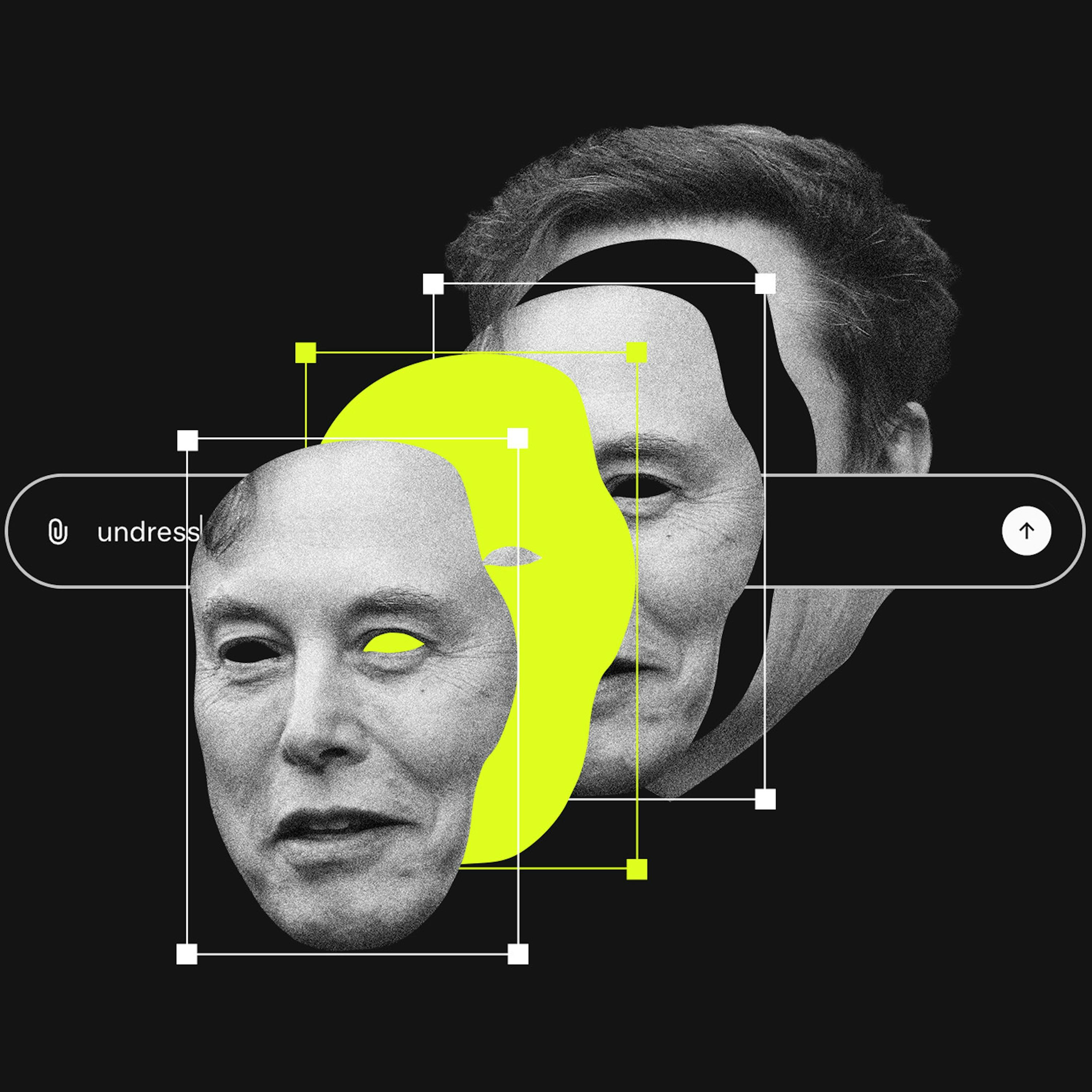

Unlike previous forms of image abuse that required multiple apps, Grok integrates image generation and mass distribution into a single, instant process. This unprecedented speed and scale create a new category of harm that existing regulatory frameworks are ill-equipped to handle.

Related Insights

A lawsuit against X AI alleges Grok is "unreasonably dangerous as designed." This bypasses Section 230 by targeting the product's inherent flaws rather than user content. This approach is becoming a primary legal vector for holding platforms accountable for AI-driven harms.

Despite platforms like Grok having broad potential applications, a significant portion of user-generated content (85%) is nude or sex-related. This highlights how emergent user behavior can define a technology's practical application, often in ways creators don't anticipate or intend.

The core issue with Grok generating abusive material wasn't the creation of a new capability, but its seamless integration into X. This made a previously niche, high-effort malicious activity effortlessly available to millions of users on a major social media platform, dramatically scaling the potential for harm.

The Grok controversy is reigniting the debate over moderating legal but harmful content, a central conflict in the UK's Online Safety Act. AI's ability to mass-produce harassing images that fall short of illegality pushes this unresolved regulatory question to the forefront.

While Visa and MasterCard have deplatformed services for content violations before, they continue to process payments for X, which profits from Grok's image tools. This makes payment processors a critical, inactive enforcement layer financially benefiting from non-consensual imagery creation.

A significant concern with AI porn is its potential to accelerate trends toward violent content. Because pornography can "set the sexual script" for viewers, a surge in easily generated violent material could normalize these behaviors and potentially lead to them being acted out in real life.

The rush to label Grok's output as illegal CSAM misses a more pervasive issue: using AI to generate demeaning, but not necessarily illegal, images as a tool for harassment. This dynamic of "lawful but awful" content weaponized at scale currently lacks a clear legal framework.

Unlike other platforms, xAI's issues were not an unforeseen accident but a predictable result of its explicit strategy to embrace sexualized content. Features like a "spicy mode" and Elon Musk's own posts created a corporate culture that prioritized engagement from provocative content over implementing robust safeguards against its misuse for generating illegal material.

Section 230 protects platforms from liability for third-party user content. Since generative AI tools create the content themselves, platforms like X could be held directly responsible. This is a critical, unsettled legal question that could dismantle a key legal shield for AI companies.

AI companies argue their models' outputs are original creations to defend against copyright claims. This stance becomes a liability when the AI generates harmful material, as it positions the platform as a co-creator, undermining the Section 230 "neutral platform" defense used by traditional social media.