The system offers two tokenizer options: 25 Hz for high-detail audio and 12 Hz for faster generation. This practical approach acknowledges that different applications have different needs, prioritizing either computational efficiency or acoustic fidelity rather than forcing a one-size-fits-all solution.

Related Insights

Instead of debating AI's creative limits, The New Yorker pragmatically adopted it to solve a production bottleneck. AI-generated voiceovers make written pieces available for listening "well nigh immediately," expanding reach to audio-first consumers without compromising the human-led creative process of the articles themselves.

Voice-to-voice AI models promise more natural, low-latency conversations by processing audio directly. However, they are currently impractical for many high-stakes enterprise applications due to a hallucination rate that can be eight times higher than text-based systems.

While users can read text faster than they can listen, the Hux team chose audio as their primary medium. Reading requires a user's full attention, whereas audio is a passive medium that can be consumed concurrently with other activities like commuting or cooking, integrating more seamlessly into daily life.

By converting audio into discrete tokens, the system allows a large language model (LLM) to generate speech just as it generates text. This simplifies architecture by leveraging existing model capabilities, avoiding the need for entirely separate speech synthesis systems.

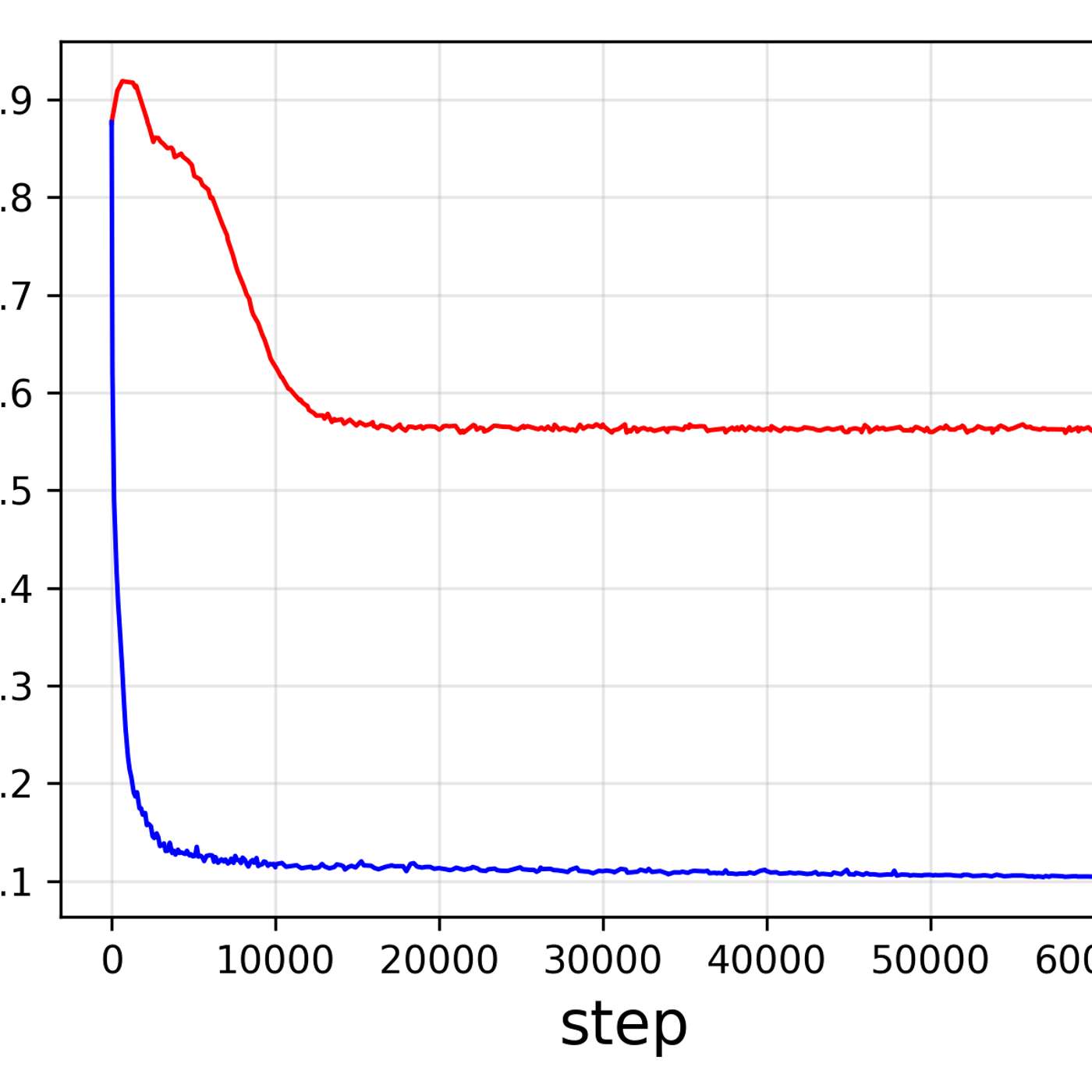

A unified tokenizer, while efficient, may not be optimal for both understanding and generation tasks. The ideal data representation for one task might differ from the other, potentially creating a performance bottleneck that specialized models would avoid.

Model architecture decisions directly impact inference performance. AI company Zyphra pre-selects target hardware and then chooses model parameters—such as a hidden dimension with many powers of two—to align with how GPUs split up workloads, maximizing efficiency from day one.

The primary driver for fine-tuning isn't cost but necessity. When applications like real-time voice demand low latency, developers are forced to use smaller models. These models often lack quality for specific tasks, making fine-tuning a necessary step to achieve production-level performance.

The technical report introduces an innovative token-based architecture but lacks crucial validation. It omits comparative quality metrics, latency measurements, and human evaluation scores, leaving practitioners unable to assess its real-world performance against existing systems.

The binary distinction between "reasoning" and "non-reasoning" models is becoming obsolete. The more critical metric is now "token efficiency"—a model's ability to use more tokens only when a task's difficulty requires it. This dynamic token usage is a key differentiator for cost and performance.

To analyze video cost-effectively, Tim McLear uses a cheap, fast model to generate captions for individual frames sampled every five seconds. He then packages all these low-level descriptions and the audio transcript and sends them to a powerful reasoning model. This model's job is to synthesize all the data into a high-level summary of the video.

![Jesse Zhang - Building Decagon - [Invest Like the Best, EP.443] thumbnail](https://megaphone.imgix.net/podcasts/2cc4da2a-a266-11f0-ac41-9f5060c6f4ef/image/7df6dc347b875d4b90ae98f397bf1cb1.jpg?ixlib=rails-4.3.1&max-w=3000&max-h=3000&fit=crop&auto=format,compress)