By converting audio into discrete tokens, the system allows a large language model (LLM) to generate speech just as it generates text. This simplifies architecture by leveraging existing model capabilities, avoiding the need for entirely separate speech synthesis systems.

Related Insights

Voice-to-voice AI models promise more natural, low-latency conversations by processing audio directly. However, they are currently impractical for many high-stakes enterprise applications due to a hallucination rate that can be eight times higher than text-based systems.

While companies readily use models that process images, audio, and text inputs, the practical application of generating multimodal outputs (like video or complex graphics) remains rare in business. The primary output is still text or structured data, with synthesized speech being the main exception.

With the release of OpenAI's new video generation model, Sora 2, a surprising inversion has occurred. The generated video is so realistic that the accompanying AI-generated audio is now the more noticeable and identifiable artificial component, signaling a new frontier in multimedia synthesis.

A unified tokenizer, while efficient, may not be optimal for both understanding and generation tasks. The ideal data representation for one task might differ from the other, potentially creating a performance bottleneck that specialized models would avoid.

The technical report introduces an innovative token-based architecture but lacks crucial validation. It omits comparative quality metrics, latency measurements, and human evaluation scores, leaving practitioners unable to assess its real-world performance against existing systems.

Traditional video models process an entire clip at once, causing delays. Descartes' Mirage model is autoregressive, predicting only the next frame based on the input stream and previously generated frames. This LLM-like approach is what enables its real-time, low-latency performance.

DSPy introduces a higher-level abstraction for programming LLMs, analogous to the jump from Assembly to C. It lets developers define program logic and intent, which is then "compiled" into optimal prompts, ensuring portability and maintainability across different models.

A common objection to voice AI is its robotic nature. However, current tools can clone voices, replicate human intonation, cadence, and even use slang. The speaker claims that 97% of people outside the AI industry cannot tell the difference, making it a viable front-line tool for customer interaction.

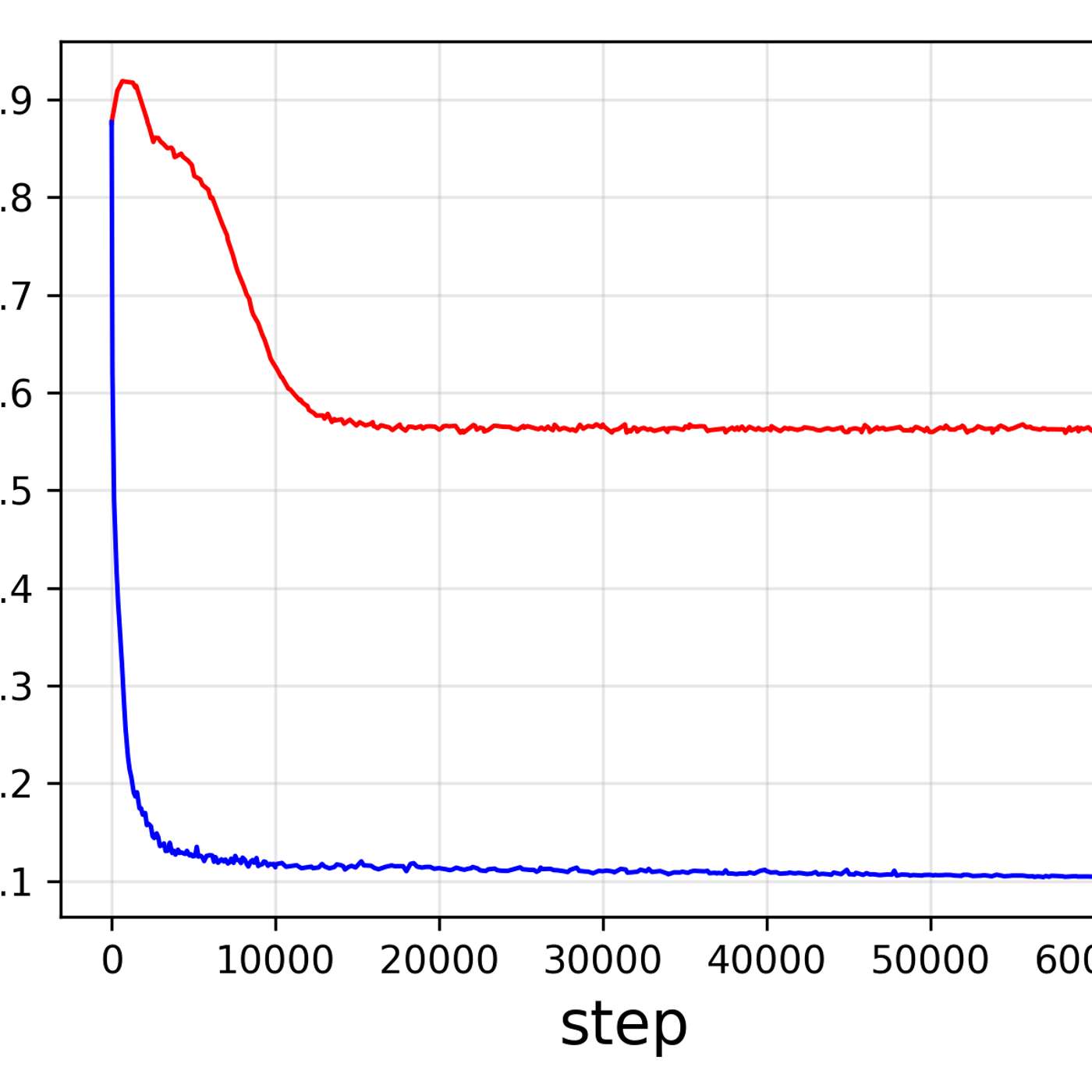

The system offers two tokenizer options: 25 Hz for high-detail audio and 12 Hz for faster generation. This practical approach acknowledges that different applications have different needs, prioritizing either computational efficiency or acoustic fidelity rather than forcing a one-size-fits-all solution.

Alexa's architecture is a model-agnostic system using over 70 different models. This allows them to use the best tool for any given task, focusing on the customer's goal rather than the underlying model brand, which is what most competitors focus on.

![Jesse Zhang - Building Decagon - [Invest Like the Best, EP.443] thumbnail](https://megaphone.imgix.net/podcasts/2cc4da2a-a266-11f0-ac41-9f5060c6f4ef/image/7df6dc347b875d4b90ae98f397bf1cb1.jpg?ixlib=rails-4.3.1&max-w=3000&max-h=3000&fit=crop&auto=format,compress)