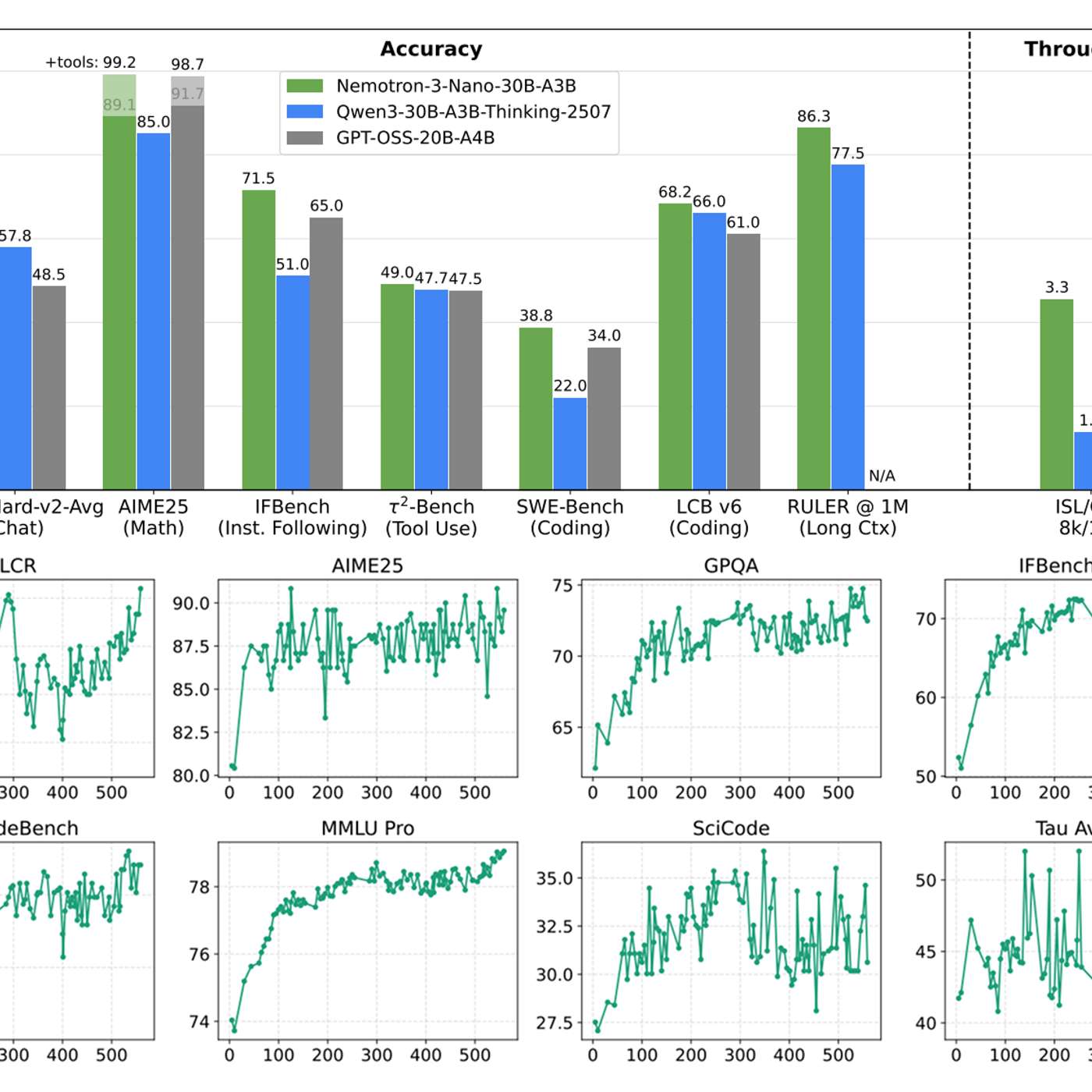

Standard Retrieval-Augmented Generation (RAG) systems often fail because they treat complex documents as pure text, missing crucial context within charts, tables, and layouts. The solution is to use vision language models for embedding and re-ranking, making visual and structural elements directly retrievable and improving accuracy.

Related Insights

For enterprise AI, standard RAG struggles with granular permissions and relationship-based questions. Atlassian's "teamwork graph" maps entities like teams, tasks, and documents. This allows it to answer complex queries like "What did my team do last week?"—a task where simple vector search would fail by just returning top documents.

To move beyond keyword search in their media archive, Tim McLear's system generates two vector embeddings for each asset: one from the image thumbnail and another from its AI-generated text description. Fusing these enables a powerful semantic search that understands visual similarity and conceptual relationships, not just exact text matches.

The vast majority of enterprise information, previously trapped in formats like PDFs and documents, was largely unusable. AI, through techniques like RAG and automated structure extraction, is unlocking this data for the first time, making it queryable and enabling new large-scale analysis.

Current LLMs abstract language into discrete tokens, losing rich information like font, layout, and spatial arrangement. A "pixel maximalist" view argues that processing visual representations of text (as humans do) is a more lossless, general approach that captures the physical manifestation of language in the world.

Retrieval Augmented Generation (RAG) uses vector search to find relevant documents based on a user's query. This factual context is then fed to a Large Language Model (LLM), forcing it to generate responses based on provided data, which significantly reduces the risk of "hallucinations."

Teams often agonize over which vector database to use for their Retrieval-Augmented Generation (RAG) system. However, the most significant performance gains come from superior data preparation, such as optimizing chunking strategies, adding contextual metadata, and rewriting documents into a Q&A format.

Image models like Google's NanoBanana Pro can now connect to live search to ground their output in real-world facts. This breakthrough allows them to generate dense, text-heavy infographics with coherent, accurate information, a task previously impossible for image models which notoriously struggled with rendering readable text.

Current multimodal models shoehorn visual data into a 1D text-based sequence. True spatial intelligence is different. It requires a native 3D/4D representation to understand a world governed by physics, not just human-generated language. This is a foundational architectural shift, not an extension of LLMs.

New image models like Google's Nano Banana Pro can transform lengthy articles and research papers into detailed whiteboard diagrams. This represents a powerful new form of information compression, moving beyond simple text summarization to a complete modality shift for easier comprehension and knowledge transfer.

Classic RAG involves a single data retrieval step. Its evolution, "agentic retrieval," allows an AI to perform a series of conditional fetches from different sources (APIs, databases). This enables the handling of complex queries where each step informs the next, mimicking a research process.