While government regulation might seem simpler, Susan Wojcicki suggests it would be too slow to address rapidly evolving threats like new COVID-19 conspiracies. She argues that a private company can make more detailed, fine-grained policy decisions much faster than a legislative body could, framing self-regulation as a matter of speed and specificity.

Related Insights

YouTube's CEO justifies stricter past policies by citing the extreme uncertainty of early 2020 (e.g., 5G tower conspiracies). He implies moderation is not static but flexible, adapting to the societal context. Today's more open policies reflect the world's changed understanding, suggesting a temporal rather than ideological approach.

YouTube's content rules change weekly without warning. A sudden demonetization or age-restriction can cripple an episode's reach after it's published, highlighting the significant platform risk creators face when distribution is controlled by a third party with unclear policies.

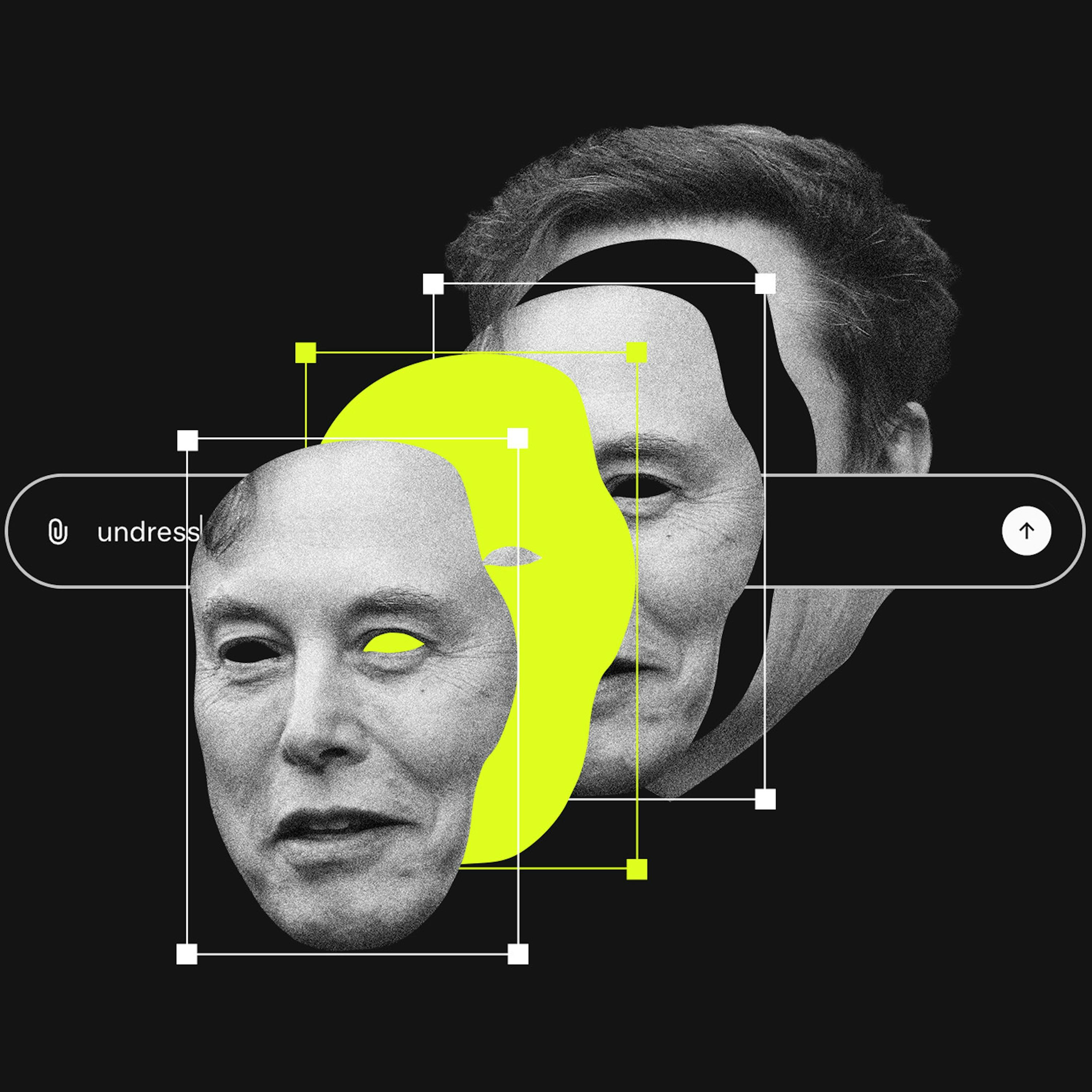

The problem with social media isn't free speech itself, but algorithms that elevate misinformation for engagement. A targeted solution is to remove Section 230 liability protection *only* for content that platforms algorithmically boost, holding them accountable for their editorial choices without engaging in broad censorship.

When questioned about censorship alongside Twitter and Facebook, CEO Neal Mohan deliberately reframes YouTube's identity. He asserts YouTube has more in common with streaming platforms than social media feeds. This is a strategic move to distance the brand from social media's controversies and align it with the entertainment industry.

Effective content moderation is more than just removing violative videos. YouTube employs a "grayscale" approach. For borderline content, it removes the two primary incentives for creators: revenue (by demonetizing) and audience growth (by removing it from recommendation algorithms). This strategy aims to make harmful content unviable on the platform.

Similar to the financial sector, tech companies are increasingly pressured to act as a de facto arm of the government, particularly on issues like censorship. This has led to a power struggle, with some tech leaders now publicly pre-committing to resist future government requests.

As major platforms abdicate trust and safety responsibilities, demand grows for user-centric solutions. This fuels interest in decentralized networks and "middleware" that empower communities to set their own content standards, a move away from centralized, top-down platform moderation.

Tyler Cowen's experience actively moderating his "Marginal Revolution" blog has made him more tolerant of large tech platforms removing content. Seeing the necessity of curation to improve discourse firsthand, he views platform moderation not as censorship but as a private owner's prerogative to maintain quality.

The existence of internal teams like Anthropic's "Societal Impacts Team" serves a dual purpose. Beyond their stated mission, they function as a strategic tool for AI companies to demonstrate self-regulation, thereby creating a political argument that stringent government oversight is unnecessary.

While both the Biden administration's pressure on YouTube and Trump's threats against ABC are anti-free speech, the former is more insidious. Surreptitious, behind-the-scenes censorship is harder to identify and fight publicly, making it a greater threat to open discourse than loud, transparent attacks that can be openly condemned.