The C2PA standard's effectiveness depends on a complete ecosystem of participation, from capture (cameras) to distribution (platforms). The refusal of major players like Apple and X to join creates fatal gaps, rendering the entire system ineffective and preventing a network effect.

Related Insights

Major tech companies publicly champion their support for the C2PA standard to appear proactive about the deepfake problem. However, this support is often superficial, serving as a "meritless badge" or PR move while they avoid the hard work of robust implementation and ecosystem-wide collaboration.

A novel framework rates tech giants based on content policies: Apple is PG (no adult content on iOS), Microsoft is G (professional focus), Google is PG-13 (YouTube content), and Amazon is NC-17 (Kindle erotica). This clarifies their distinct brand positions on sensitive content.

The promise of the smart home has failed, leading to a "big regression" where technology complicates simple tasks. This is caused by a flood of mid-tier, proprietary devices lacking polish and interoperability. The market is a barbell: only a fully integrated ecosystem can deliver a superior experience.

Dominant tech platforms lack the market incentive to open their ecosystems. Berners-Lee argues that government intervention is the only viable path to mandate interoperability and break down digital walled gardens, as market forces alone have failed.

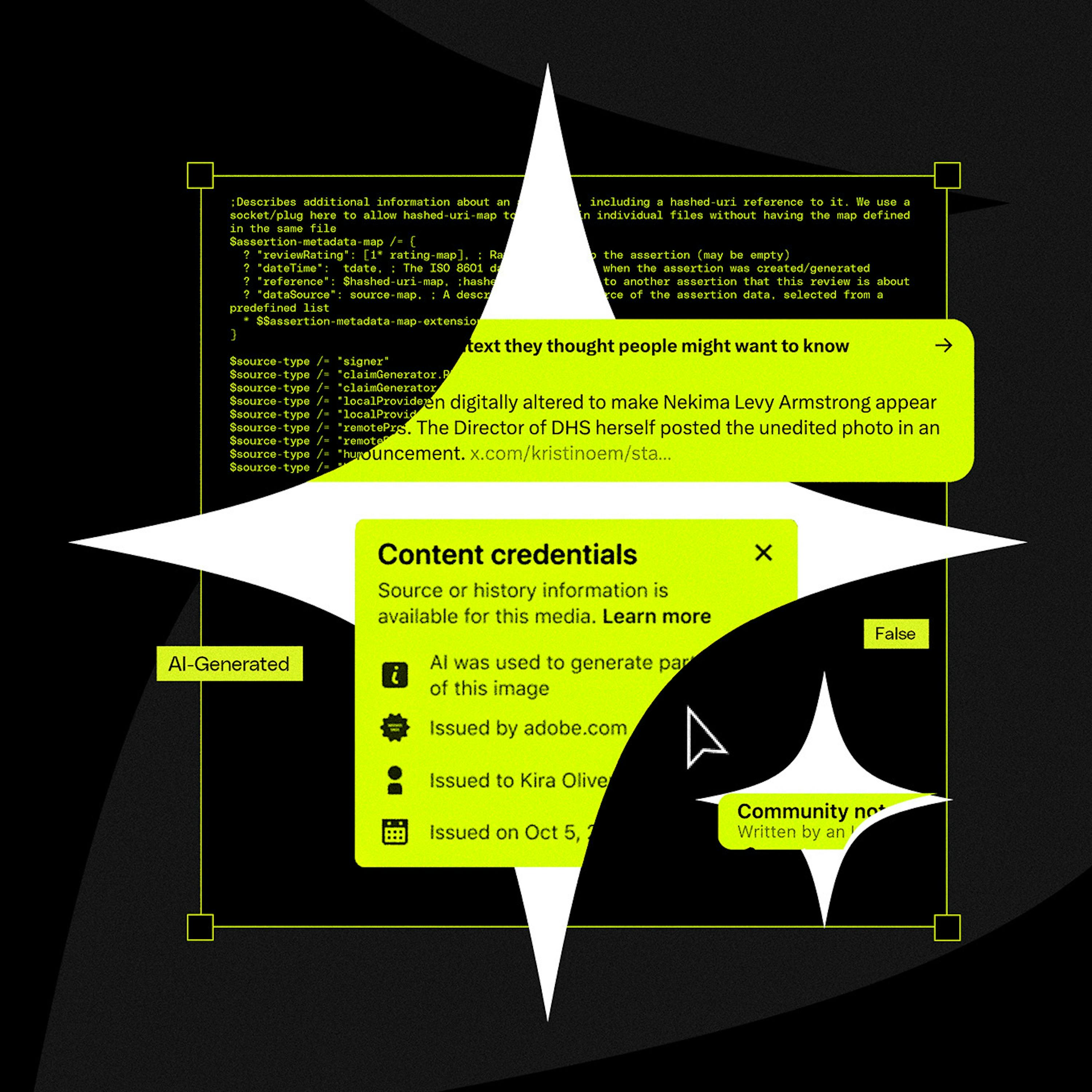

A critical failure point for C2PA is that social media platforms themselves can inadvertently strip the crucial metadata during their standard image and video processing pipelines. This technical flaw breaks the chain of provenance before the content is even displayed to users.

Politician Alex Boris argues that expecting humans to spot increasingly sophisticated deepfakes is a losing battle. The real solution is a universal metadata standard (like C2PA) that cryptographically proves if content is real or AI-generated, making unverified content inherently suspect, much like an unsecure HTTP website today.

Unlike the early iPhone era, developers are hesitant to build for new hardware like the Apple Vision Pro without a proven audience. They now expect platform creators to de-risk development by first demonstrating a massive user base, shifting the market-building burden entirely onto the hardware maker.

While camera brands like Sony and Nikon support C2PA on new models, the standard's adoption is crippled by the inability to update firmware on millions of existing professional cameras. This means the vast majority of photos taken will lack provenance data for years, undermining the entire system.

C2PA was designed to track a file's provenance (creation, edits), not specifically to detect AI. This fundamental mismatch in purpose is why it's an ineffective solution for the current deepfake crisis, as it wasn't built to be a simple binary validator of reality.

Companies like Google (YouTube) and Meta (Instagram) face a fundamental conflict: they invest billions in AI while running the platforms that would display AI labels. Aggressively labeling AI content would devalue their own technology investments, creating a powerful incentive to be slow and ineffective on implementation.