While camera brands like Sony and Nikon support C2PA on new models, the standard's adoption is crippled by the inability to update firmware on millions of existing professional cameras. This means the vast majority of photos taken will lack provenance data for years, undermining the entire system.

Related Insights

As AI makes it easy to fake video and audio, blockchain's immutable and decentralized ledger offers a solution. Creators can 'mint' their original content, creating a verifiable record of authenticity that nobody—not even governments or corporations—can alter.

Major tech companies publicly champion their support for the C2PA standard to appear proactive about the deepfake problem. However, this support is often superficial, serving as a "meritless badge" or PR move while they avoid the hard work of robust implementation and ecosystem-wide collaboration.

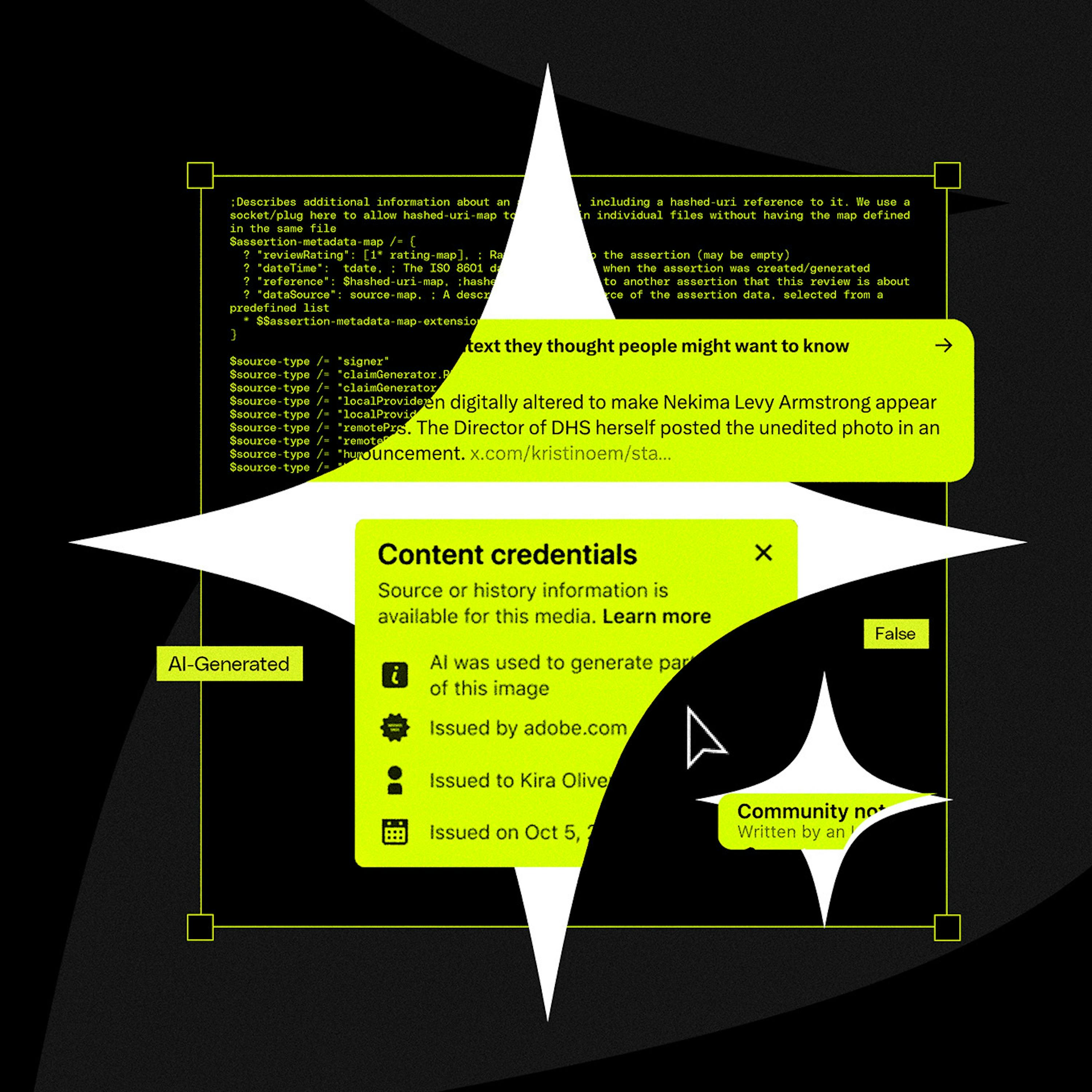

The C2PA standard's effectiveness depends on a complete ecosystem of participation, from capture (cameras) to distribution (platforms). The refusal of major players like Apple and X to join creates fatal gaps, rendering the entire system ineffective and preventing a network effect.

A critical failure point for C2PA is that social media platforms themselves can inadvertently strip the crucial metadata during their standard image and video processing pipelines. This technical flaw breaks the chain of provenance before the content is even displayed to users.

Politician Alex Boris argues that expecting humans to spot increasingly sophisticated deepfakes is a losing battle. The real solution is a universal metadata standard (like C2PA) that cryptographically proves if content is real or AI-generated, making unverified content inherently suspect, much like an unsecure HTTP website today.

When building data platforms for industries with legacy hardware like automotive, the real work is data normalization. Different product lines use inconsistent signal names and units (e.g., speed as MPH vs. radians/sec), requiring a complex 'decoder' layer to create usable, standardized data.

While Over-the-Air (OTA) updates seem to make hardware software flexible, the initial OS version that enables those updates is unchangeable once flashed onto units at the factory. This creates an early, critical point of commitment for any features included in that first boot-up experience.

Instead of storing AI-generated descriptions in a separate database, Tim McLear's "Flip Flop" app embeds metadata directly into each image file's EXIF data. This makes each file a self-contained record where rich context travels with the image, accessible by any system or person, regardless of access to the original database.

Ring's founder argues that seemingly permanent hardware choices, like communication protocols, are not truly "one-way doors." By offloading intelligence to the cloud, even legacy hardware can be continuously upgraded with new features like AI, mitigating the risk of being stuck on an outdated standard.

C2PA was designed to track a file's provenance (creation, edits), not specifically to detect AI. This fundamental mismatch in purpose is why it's an ineffective solution for the current deepfake crisis, as it wasn't built to be a simple binary validator of reality.