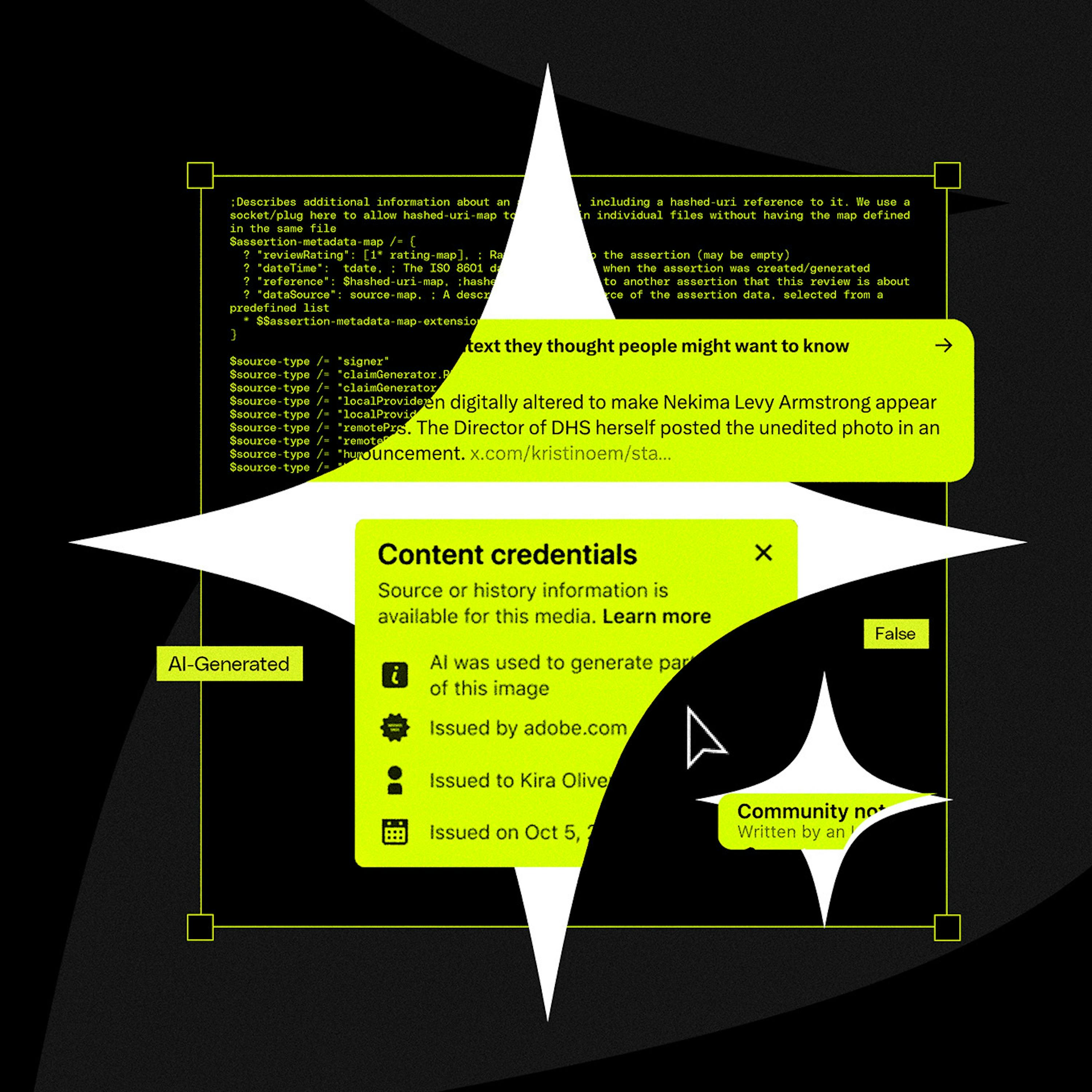

C2PA was designed to track a file's provenance (creation, edits), not specifically to detect AI. This fundamental mismatch in purpose is why it's an ineffective solution for the current deepfake crisis, as it wasn't built to be a simple binary validator of reality.

Related Insights

Creating reliable AI detectors is an endless arms race against ever-improving generative models, which often have detectors built into their training process (like GANs). A better approach is using algorithmic feeds to filter out low-quality "slop" content, regardless of its origin, based on user behavior.

Adam Mosseri’s public statement that we can no longer assume photos or videos are real marks a pivotal shift. He suggests moving from a default of trust to a default of skepticism, effectively admitting platforms have lost the war on deepfakes and placing the burden of verification on users.

Major tech companies publicly champion their support for the C2PA standard to appear proactive about the deepfake problem. However, this support is often superficial, serving as a "meritless badge" or PR move while they avoid the hard work of robust implementation and ecosystem-wide collaboration.

The C2PA standard's effectiveness depends on a complete ecosystem of participation, from capture (cameras) to distribution (platforms). The refusal of major players like Apple and X to join creates fatal gaps, rendering the entire system ineffective and preventing a network effect.

A critical failure point for C2PA is that social media platforms themselves can inadvertently strip the crucial metadata during their standard image and video processing pipelines. This technical flaw breaks the chain of provenance before the content is even displayed to users.

Politician Alex Boris argues that expecting humans to spot increasingly sophisticated deepfakes is a losing battle. The real solution is a universal metadata standard (like C2PA) that cryptographically proves if content is real or AI-generated, making unverified content inherently suspect, much like an unsecure HTTP website today.

While camera brands like Sony and Nikon support C2PA on new models, the standard's adoption is crippled by the inability to update firmware on millions of existing professional cameras. This means the vast majority of photos taken will lack provenance data for years, undermining the entire system.

The rise of convincing AI-generated deepfakes will soon make video and audio evidence unreliable. The solution will be the blockchain, a decentralized, unalterable ledger. Content will be "minted" on-chain to provide a verifiable, timestamped record of authenticity that no single entity can control or manipulate.

Cryptographically signing media doesn't solve deepfakes because the vulnerability shifts to the user. Attackers use phishing tactics with nearly identical public keys or domains (a "Sybil problem") to trick human perception. The core issue is human error, not a lack of a technical solution.

Attempts to label "AI content" fail because AI is integrated into countless basic editing tools, not just generative ones. It's impossible to draw a clear line for what constitutes an "AI edit," leading to creator frustration and rendering binary labels meaningless and confusing for users.