Laws intended for copyright, like the DMCA's anti-circumvention clause, are weaponized by platforms. They make it a felony to create software that modifies an app's behavior (e.g., an ad-blocker), preventing competition and user choice.

Related Insights

Businesses become critically dependent on platforms for even a small fraction of their revenue (e.g., 20%). This 'monopsony power' creates a stronger lock-in than user network effects, as losing that customer base can bankrupt the business.

Platforms grew dominant by acquiring competitors, a direct result of failed antitrust enforcement. Cory Doctorow argues debates over intermediary liability (e.g., Section 230) are a distraction from the core issue: a decades-long drawdown of anti-monopoly law.

A company's monopoly power can be measured not just by its pricing power, but by the 'noneconomic costs' it imposes on society. Dominant platforms can ignore negative externalities, like their product's impact on teen mental health, because their market position insulates them from accountability and user churn.

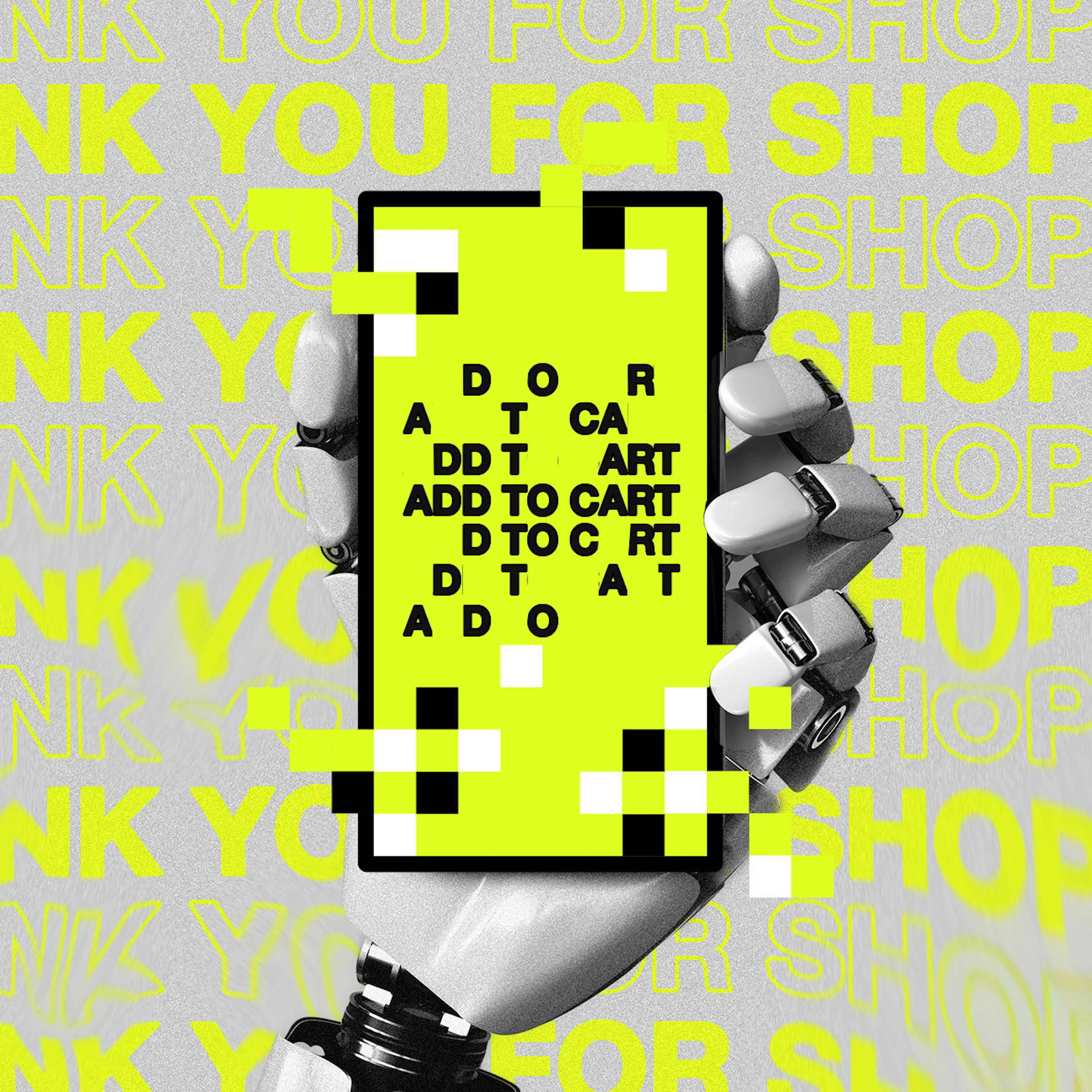

Platforms first attract users with good service, then lock them in. Next, they worsen the user experience to benefit business customers. Finally, they squeeze business customers, extracting all value for shareholders, leaving behind a dysfunctional service.

Many laws were written before technological shifts like the smartphone or AI. Companies like Uber and OpenAI found massive opportunities by operating in legal gray areas where old regulations no longer made sense and their service provided immense consumer value.

Recent security breaches (e.g., Gainsight/Drift on Salesforce) signal a shift. As AI agents access more data, incumbents can leverage security concerns to block third-party apps and promote their own integrated solutions, effectively using security as a competitive weapon.

Unlike service platforms like Uber that rely on real-world networks, Amazon's high-margin ad business is existentially threatened by AI agents that bypass sponsored listings. This vulnerability explains its uniquely aggressive legal stance against Perplexity, as it stands to lose a massive, growing revenue stream if users stop interacting directly with its site.

Laws like California's SB243, allowing lawsuits for "emotional harm" from chatbots, create an impossible compliance maze for startups. This fragmented regulation, while well-intentioned, benefits incumbents who can afford massive legal teams, thus stifling innovation and competition from smaller players.

OpenAI's platform strategy, which centralizes app distribution through ChatGPT, mirrors Apple's iOS model. This creates a 'walled garden' that could follow Cory Doctorow's 'inshittification' pattern: initially benefiting users, then locking them in, and finally exploiting them once they cannot easily leave the ecosystem.