An AI model trained on public legal documents performed well. However, when applied to actual, consented customer contracts, its accuracy plummeted by 15 percentage points. This reveals the significant performance gap between clean, public training data and complex, private enterprise data.

Related Insights

While public benchmarks show general model improvement, they are almost orthogonal to enterprise adoption. Enterprises don't care about general capabilities; they need near-perfect precision on highly specific, internal workflows. This requires extensive fine-tuning and validation, not chasing leaderboard scores.

There's a significant gap between AI performance in simulated benchmarks and in the real world. Despite scoring highly on evaluations, AIs in real deployments make "silly mistakes that no human would ever dream of doing," suggesting that current benchmarks don't capture the messiness and unpredictability of reality.

Public internet data has been largely exhausted for training AI models. The real competitive advantage and source for next-generation, specialized AI will be the vast, untapped reservoirs of proprietary data locked inside corporations, like R&D data from pharmaceutical or semiconductor companies.

A key competitive advantage for AI companies lies in capturing proprietary outcomes data by owning a customer's end-to-end workflow. This data, such as which legal cases are won or lost, is not publicly available. It creates a powerful feedback loop where the AI gets smarter at predicting valuable outcomes, a moat that general models cannot replicate.

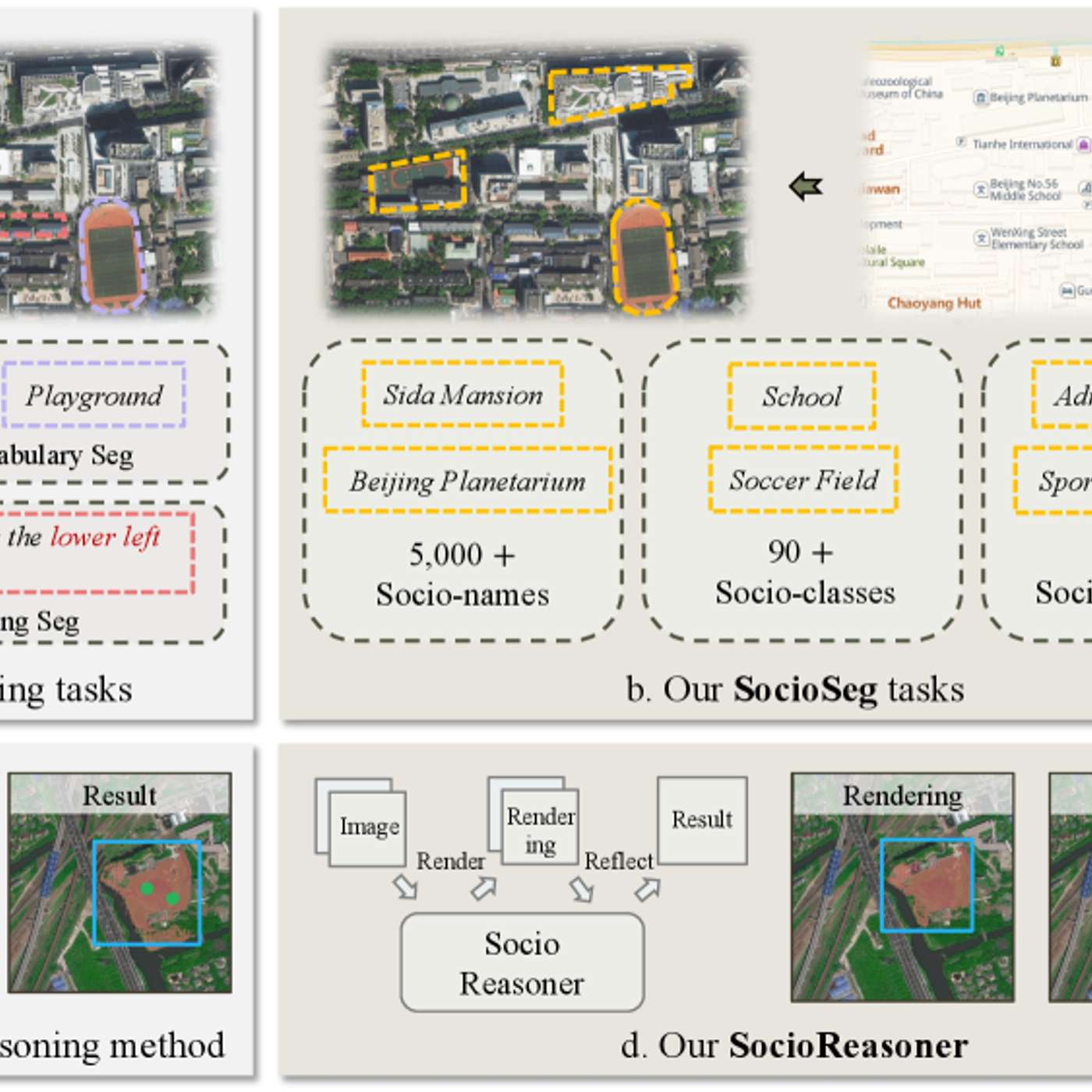

The most significant gap in AI research is its focus on academic evaluations instead of tasks customers value, like medical diagnosis or legal drafting. The solution is using real-world experts to define benchmarks that measure performance on economically relevant work.

The researchers' failure case analysis is highlighted as a key contribution. Understanding why the model fails—due to ambiguous data or unusual inputs—provides a realistic scope of application and a clear roadmap for improvement, which is more useful for practitioners than high scores alone.

Off-the-shelf AI models can only go so far. The true bottleneck for enterprise adoption is "digitizing judgment"—capturing the unique, context-specific expertise of employees within that company. A document's meaning can change entirely from one company to another, requiring internal labeling.

Despite strong benchmark scores, top Chinese AI models (from ZAI, Kimi, DeepSeek) are "nowhere close" to US models like Claude or Gemini on complex, real-world vision tasks, such as accurately reading a messy scanned document. This suggests benchmarks don't capture a significant real-world performance gap.

Standardized AI benchmarks are saturated and becoming less relevant for real-world use cases. The true measure of a model's improvement is now found in custom, internal evaluations (evals) created by application-layer companies. Progress for a legal AI tool, for example, is a more meaningful indicator than a generic test score.

The CEO contrasts general-purpose AI with their "courtroom-grade" solution, built on a proprietary, authoritative data set of 160 billion documents. This ensures outputs are grounded in actual case law and verifiable, addressing the core weaknesses of consumer models for professional use.