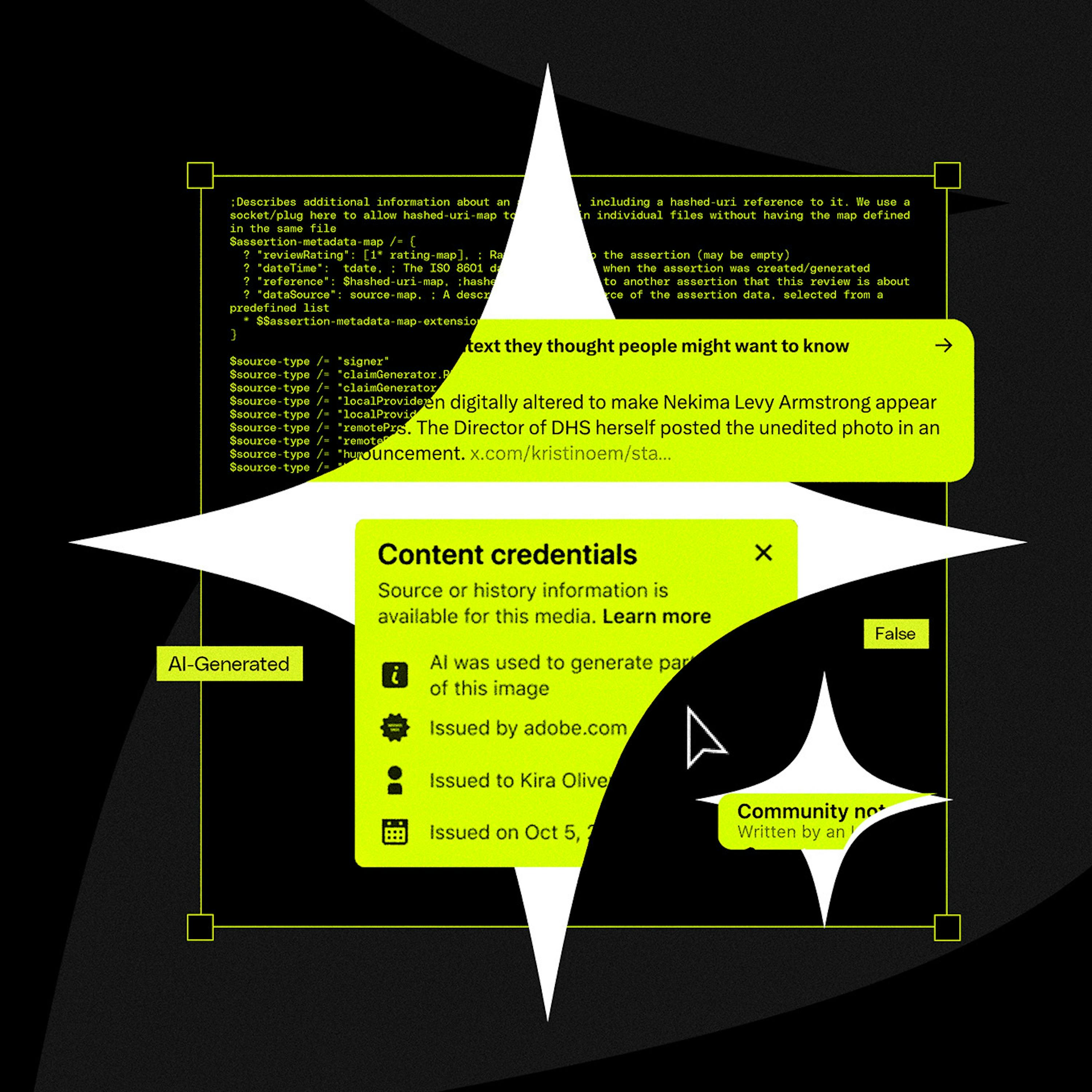

Attempts to label "AI content" fail because AI is integrated into countless basic editing tools, not just generative ones. It's impossible to draw a clear line for what constitutes an "AI edit," leading to creator frustration and rendering binary labels meaningless and confusing for users.

Related Insights

Creating reliable AI detectors is an endless arms race against ever-improving generative models, which often have detectors built into their training process (like GANs). A better approach is using algorithmic feeds to filter out low-quality "slop" content, regardless of its origin, based on user behavior.

The legal question of AI authorship has a historical parallel. Just as early photos were deemed copyrightable because of the photographer's judgment in composition and lighting, AI works can be copyrighted if a human provides detailed prompts, makes revisions, and exercises significant creative judgment. The AI is the tool, not the author.

The definition of "AI slop" is evolving from obviously fake images to a flood of perfectly polished, generic, and boring content. As AI makes flattering imagery cheap to produce, authentic, unpolished, and even unflattering content becomes more valuable for creators trying to stand out on platforms like Instagram.

While AI tools excel at generating initial drafts of code or designs, their editing capabilities are poor. The difficulty of making specific changes often forces creators to discard the AI output and start over, as editing is where the "magic" breaks down.

As AI makes creating complex visuals trivial, audiences will become skeptical of content like surrealist photos or polished B-roll. They will increasingly assume it is AI-generated rather than the result of human skill, leading to lower trust and engagement.

Don't accept the false choice between AI generation and professional editing tools. The best workflows integrate both, allowing for high-level generation and fine-grained manual adjustments without giving up critical creative control.

The "AI-generated" label carries a negative connotation of being cheap, efficient, and lacking human creativity. This perception devalues the final product in the eyes of consumers and creators, disincentivizing platforms from implementing labels that would anger their user base and advertisers.

Because AI can generate content in seconds, it is perceived as low-effort. This violates the "labor illusion," where effort signals quality. A study showed that when a poster was labeled "AI-powered" instead of "hand-drawn," purchase intent dropped by 61%. Brands using AI must reframe the narrative around the effort of building the system.

C2PA was designed to track a file's provenance (creation, edits), not specifically to detect AI. This fundamental mismatch in purpose is why it's an ineffective solution for the current deepfake crisis, as it wasn't built to be a simple binary validator of reality.

The current debate focuses on labeling AI-generated content. However, as AI content floods the internet and becomes the default, the more efficient system will be to label the smaller, scarcer category: authentic, human-created content.