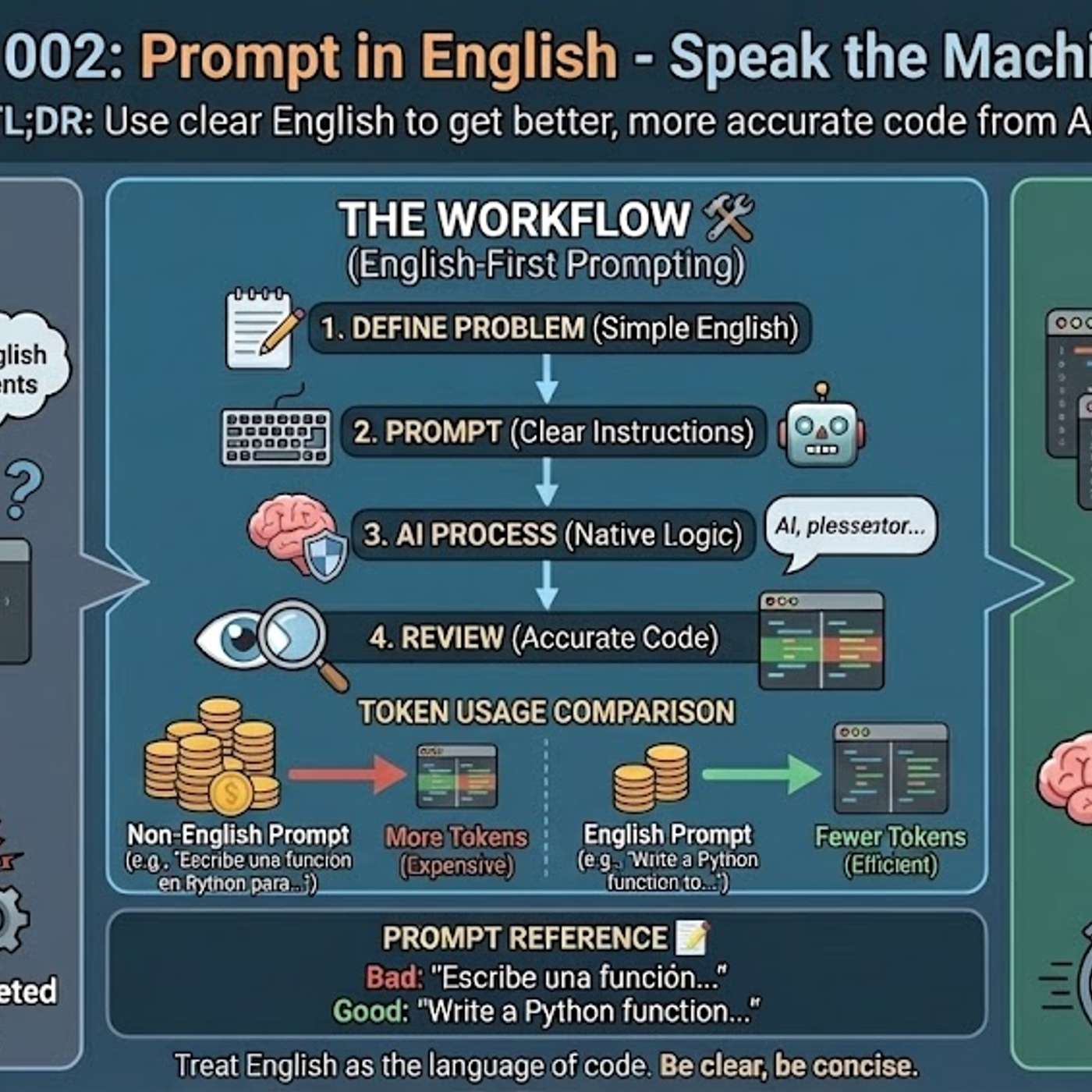

Using languages other than English for technical prompts is inefficient because it forces the AI to perform an intermediate translation. This translation step consumes valuable tokens from the context window, leaving less capacity for detailed instructions and increasing the risk of misinterpretation, which results in weaker solutions.

Related Insights

People struggle with AI prompts because the model lacks background on their goals and progress. The solution is 'Context Engineering': creating an environment where the AI continuously accumulates user-specific information, materials, and intent, reducing the need for constant prompt tweaking.

Before delegating a complex task, use a simple prompt to have a context-aware system generate a more detailed and effective prompt. This "prompt-for-a-prompt" workflow adds necessary detail and structure, significantly improving the agent's success rate and saving rework.

Many AI tools expose the model's reasoning before generating an answer. Reading this internal monologue is a powerful debugging technique. It reveals how the AI is interpreting your instructions, allowing you to quickly identify misunderstandings and improve the clarity of your prompts for better results.

Even models with million-token context windows suffer from "context rot" when overloaded with information. Performance degrades as the model struggles to find the signal in the noise. Effective context engineering requires precision, packing the window with only the exact data needed.

Long, continuous AI chat threads degrade output quality as the context window fills up, making it harder for the model to recall early details. To maintain high-quality results, treat each discrete feature or task as a new chat, ensuring the agent has a clean, focused context for each job.

Poland's AI lab discovered that safety and security measures implemented in models primarily trained and secured for English are much easier to circumvent using Polish prompts. This highlights a critical vulnerability in global AI models and necessitates local, language-specific safety training and red-teaming to create robust safeguards.

Technical terms like "callback" often lack a precise one-to-one translation in other languages. When a non-English prompt is used, the AI may misinterpret these crucial terms, leading it to misunderstand the user's intent, waste context tokens trying to disambiguate the instruction, and ultimately generate incorrect or suboptimal code.

The binary distinction between "reasoning" and "non-reasoning" models is becoming obsolete. The more critical metric is now "token efficiency"—a model's ability to use more tokens only when a task's difficulty requires it. This dynamic token usage is a key differentiator for cost and performance.

The primary reason AI models generate better code from English prompts is their training data composition. Over 90% of AI training sets, along with most technical libraries and documentation, are in English. This means the models' core reasoning pathways for code-related tasks are fundamentally optimized for English.

Poland's AI lead observes that frontier models like Anthropic's Claude are degrading in their Polish language and cultural abilities. As developers focus on lucrative use cases like coding, they trade off performance in less common languages, creating a major reliability risk for businesses in non-Anglophone regions who depend on these APIs.