The current chaos of online misinformation isn't just a tech outcome; it was legally enabled. The 1996 Telecommunications Act shielded both users and platforms from liability, effectively removing the libel laws that governed traditional media and creating a legal free-for-all.

Related Insights

The problem with social media isn't free speech itself, but algorithms that elevate misinformation for engagement. A targeted solution is to remove Section 230 liability protection *only* for content that platforms algorithmically boost, holding them accountable for their editorial choices without engaging in broad censorship.

A US Diplomat argues that laws like the EU's DSA and the UK's Online Safety Act create a chilling effect. By imposing vague obligations with massive fines, they push risk-averse corporations to censor content excessively, leading to ridiculous outcomes like parliamentary speeches being blocked.

Platforms grew dominant by acquiring competitors, a direct result of failed antitrust enforcement. Cory Doctorow argues debates over intermediary liability (e.g., Section 230) are a distraction from the core issue: a decades-long drawdown of anti-monopoly law.

A coming battle will focus on 'malinformation'—facts that are true but inconvenient to established power structures. Expect coordinated international efforts to pressure social media platforms into censoring this content at key chokepoints.

Andreessen pinpoints a post-2015 'gravity inversion' where journalists, once defenders of free speech, began aggressively demanding more content censorship from tech platforms like Facebook. This marked a fundamental, hostile shift in the media landscape.

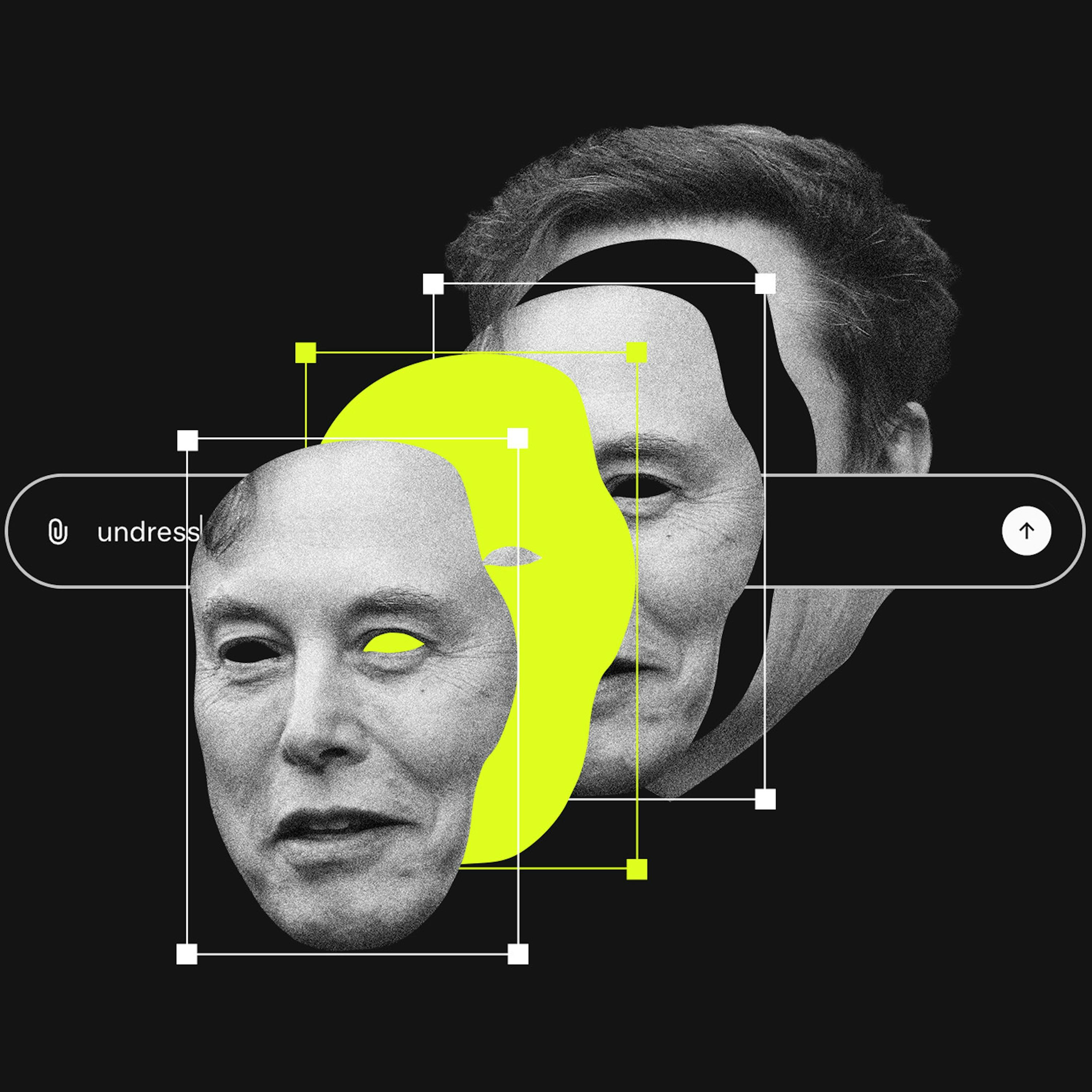

Section 230 protects platforms from liability for third-party user content. Since generative AI tools create the content themselves, platforms like X could be held directly responsible. This is a critical, unsettled legal question that could dismantle a key legal shield for AI companies.

AI companies argue their models' outputs are original creations to defend against copyright claims. This stance becomes a liability when the AI generates harmful material, as it positions the platform as a co-creator, undermining the Section 230 "neutral platform" defense used by traditional social media.

A 1994 law discouraging shareholder lawsuits created a sense of diminished risk for executives and accountants. This regulatory shift fostered a permissive climate where misleading financial reports and accounting fraud could flourish with fewer perceived legal consequences, directly contributing to the bubble.

The landmark trial against Meta and YouTube is framed as the start of a 20-30 year societal correction against social media's negative effects. This mirrors historical battles against Big Tobacco and pharmaceutical companies, suggesting a long and costly legal fight for big tech is just beginning.

The era of limited information sources allowed for a controlled, shared narrative. The current media landscape, with its volume and velocity of information, fractures consensus and erodes trust, making it nearly impossible for society to move forward in lockstep.