Claims by AI companies that their tech won't be used for direct harm are unenforceable in military contracts. Militaries and nation-states do not follow commercial terms of service; the procurement process gives the government complete control over how technology is ultimately deployed.

Related Insights

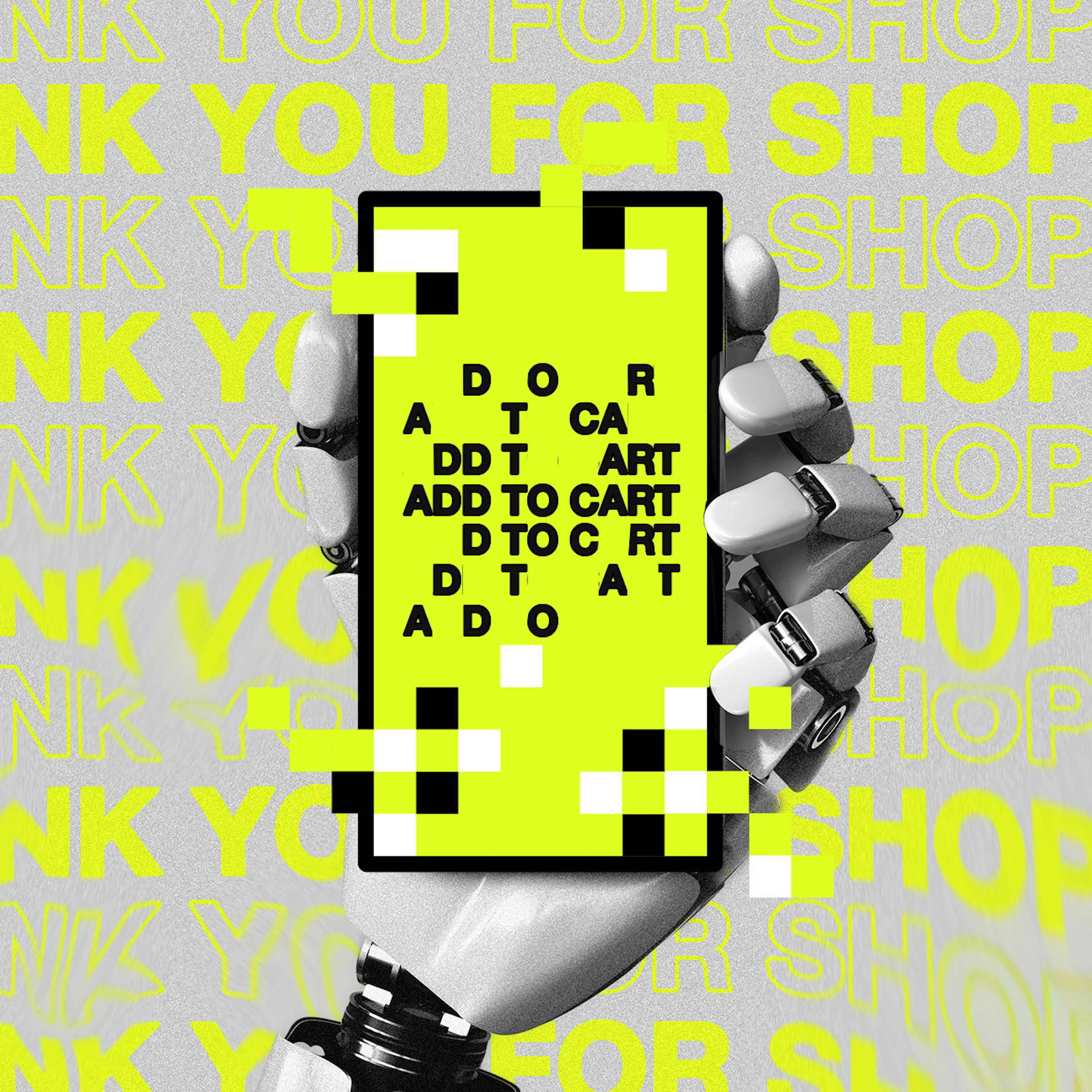

Perplexity's legal defense against Amazon's lawsuit reframes its AI agent not as a scraper bot, but as a direct extension of the user. By arguing "software is becoming labor," it claims the agent inherits the user's permissions to access websites. This novel legal argument fundamentally challenges the enforceability of current terms of service in the age of AI.

Leading AI companies, facing high operational costs and a lack of profitability, are turning to lucrative government and military contracts. This provides a stable revenue stream and de-risks their portfolios with government subsidies, despite previous ethical stances against military use.

The government's procurement process often defaults to bidding out projects to established players like Lockheed Martin, even if a startup presents a breakthrough. Success requires navigating this bureaucratic reality, not just superior engineering.

The AI systems used for mass censorship were not created for social media. They began as military and intelligence projects (DARPA, CIA, NSA) to track terrorists and foreign threats, then were pivoted to target domestic political narratives after the 2016 election.

Tech companies often use government and military contracts as a proving ground to refine complex technologies. This gives military personnel early access to tools, like Palantir a decade ago, long before they become mainstream in the corporate world.

AI companies engage in "safety revisionism," shifting the definition from preventing tangible harm to abstract concepts like "alignment" or future "existential risks." This tactic allows their inherently inaccurate models to bypass the traditional, rigorous safety standards required for defense and other critical systems.

Public fear focuses on AI hypothetically creating new nuclear weapons. The more immediate danger is militaries trusting highly inaccurate AI systems for critical command and control decisions over existing nuclear arsenals, where even a small error rate could be catastrophic.

Anthropic faces a critical dilemma. Its reputation for safety attracts lucrative enterprise clients, but this very stance risks being labeled "woke" by the Trump administration, which has banned such AI in government contracts. This forces the company to walk a fine line between its brand identity and political reality.

Even when air-gapped, commercial foundation models are fundamentally compromised for military use. Their training on public web data makes them vulnerable to "data poisoning," where adversaries can embed hidden "sleeper agents" that trigger harmful behavior on command, creating a massive security risk.

Contrary to popular belief, military procurement involves some of the most rigorous safety and reliability testing. Current generative AI models, with their inherent high error rates, fall far short of these established thresholds that have long been required for defense systems.