Most believe dopamine spikes with rewards. In reality, it continuously tracks the difference between your current and next expectation, even without a final outcome. This "temporal difference error" is the brain's core learning mechanism, mirroring algorithms in advanced AI, which constantly updates your behavior as you move through the world.

Related Insights

Our perception of sensing then reacting is an illusion. The brain constantly predicts the next moment based on past experiences, preparing actions before sensory information fully arrives. This predictive process is far more efficient than constantly reacting to the world from scratch, meaning we act first, then sense.

Normally, dopamine signals positive outcomes. However, in extreme survival states like starvation, its function inverts to signal punishment prediction errors. This powerfully reinforces learning about and avoiding threats rather than seeking rewards, ensuring survival takes precedence over all other goals.

Dopamine is often misunderstood as a 'pleasure molecule.' Its more crucial role is in motivation—the drive to seek a reward. Experiments show rats without dopamine receptors enjoy food but won't move to get it, starving to death. This seeking behavior is often triggered by the brain's craving to escape a dopamine deficit state.

Reward isn't just about indulgence. The dopamine system can learn to value self-control and resistance. This is pathologically evident in anorexia but is also the mechanism behind healthy discipline. For athletes, the act of choosing training over socializing can itself become a dopaminergic reward, reinforcing difficult choices.

In humans, learning a new skill is a highly conscious process that becomes unconscious once mastered. This suggests a link between learning and consciousness. The error signals and reward functions in machine learning could be computational analogues to the valenced experiences (pain/pleasure) that drive biological learning.

After age 25, the brain stops changing from passive experience. To learn new skills or unlearn patterns, one must be highly alert and focused. This triggers a release of neuromodulators like dopamine and epinephrine, signaling the brain to physically reconfigure its connections during subsequent rest.

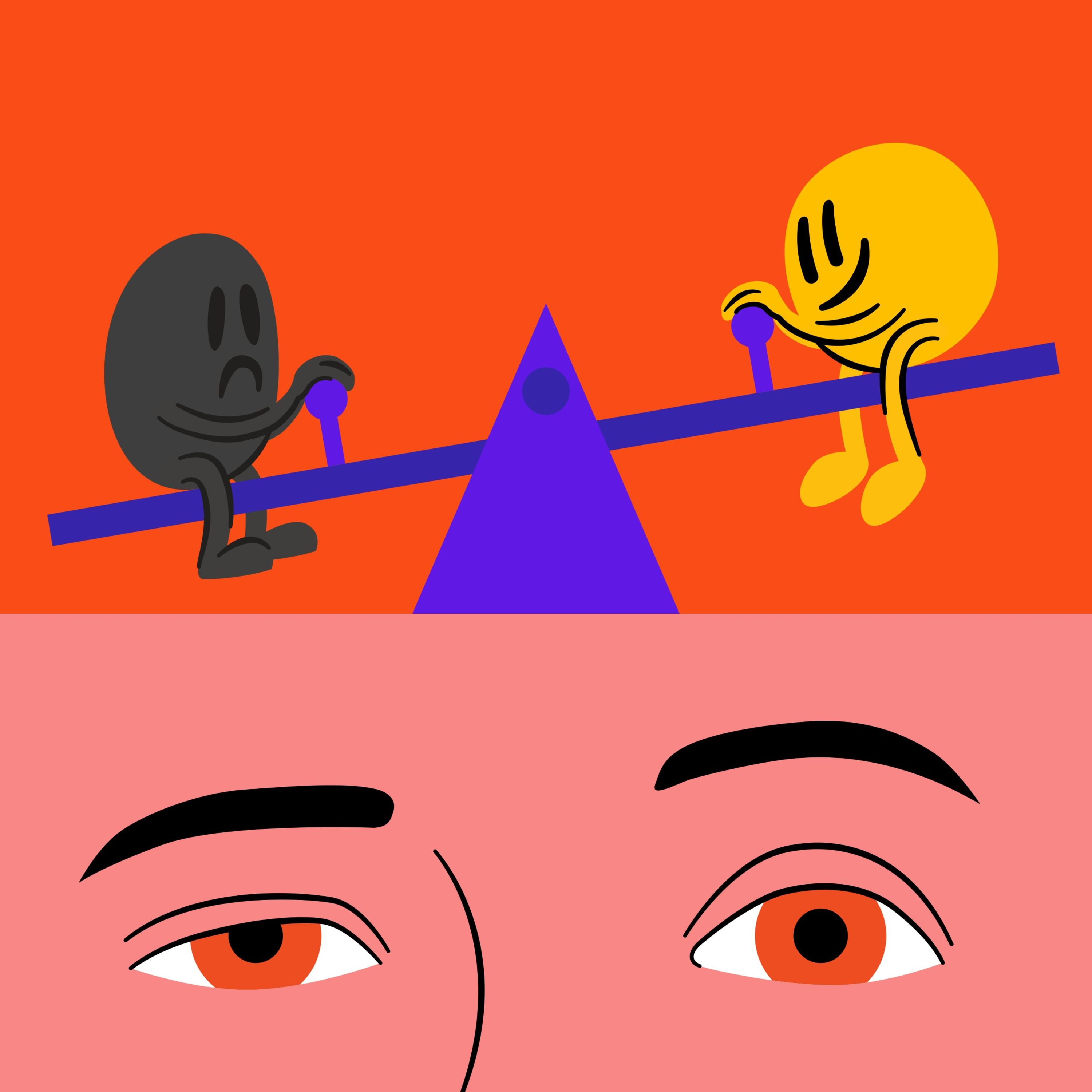

Human brain recordings reveal a seesaw relationship between dopamine and serotonin. Dopamine levels rise with positive events or anticipation, while serotonin falls. Conversely, serotonin—the signal for negative outcomes or "active waiting"—rises in response to adversity, while dopamine falls. This opponent dynamic is crucial for learning and motivation.

The brain needs a way to compare the value of disparate items like food, money, or social status. Dopamine serves as this common currency. It creates a standardized value signal, allowing the brain to make decisions and allocate effort across different domains by translating everything into a single, comparable scale.

The "temporal difference" algorithm, which tracks changing expectations, isn't just a theoretical model. It is biologically installed in brains via dopamine. This same algorithm was externalized by DeepMind to create a world-champion Go-playing AI, representing a unique instance of biology directly inspiring a major technological breakthrough.

The feeling of dissatisfaction after achieving a major goal is a feature, not a bug. The brain's dopamine system is designed to keep you moving forward. If any single achievement—a partner, a food, a drug—were permanently satisfying, the drive to live and procreate would cease. The system ensures you always have another place to go.