Advanced reasoning models excel with ambiguous inputs because they first deduce the user's underlying needs before executing a task. This ability to intelligently fill in the blanks from a poor prompt creates a "wow effect" by producing a high-quality, praised result.

Related Insights

People struggle with AI prompts because the model lacks background on their goals and progress. The solution is 'Context Engineering': creating an environment where the AI continuously accumulates user-specific information, materials, and intent, reducing the need for constant prompt tweaking.

With models like Gemini 3, the key skill is shifting from crafting hyper-specific, constrained prompts to making ambitious, multi-faceted requests. Users trained on older models tend to pare down their asks, but the latest AIs are 'pent up with creative capability' and yield better results from bigger challenges.

As models become more powerful, the primary challenge shifts from improving capabilities to creating better ways for humans to specify what they want. Natural language is too ambiguous and code too rigid, creating a need for a new abstraction layer for intent.

Before delegating a complex task, use a simple prompt to have a context-aware system generate a more detailed and effective prompt. This "prompt-for-a-prompt" workflow adds necessary detail and structure, significantly improving the agent's success rate and saving rework.

To get the best results from AI, treat it like a virtual assistant you can have a dialogue with. Instead of focusing on the perfect single prompt, provide rich context about your goals and then engage in a back-and-forth conversation. This collaborative approach yields more nuanced and useful outputs.

Many AI tools expose the model's reasoning before generating an answer. Reading this internal monologue is a powerful debugging technique. It reveals how the AI is interpreting your instructions, allowing you to quickly identify misunderstandings and improve the clarity of your prompts for better results.

The early focus on crafting the perfect prompt is obsolete. Sophisticated AI interaction is now about 'context engineering': architecting the entire environment by providing models with the right tools, data, and retrieval mechanisms to guide their reasoning process effectively.

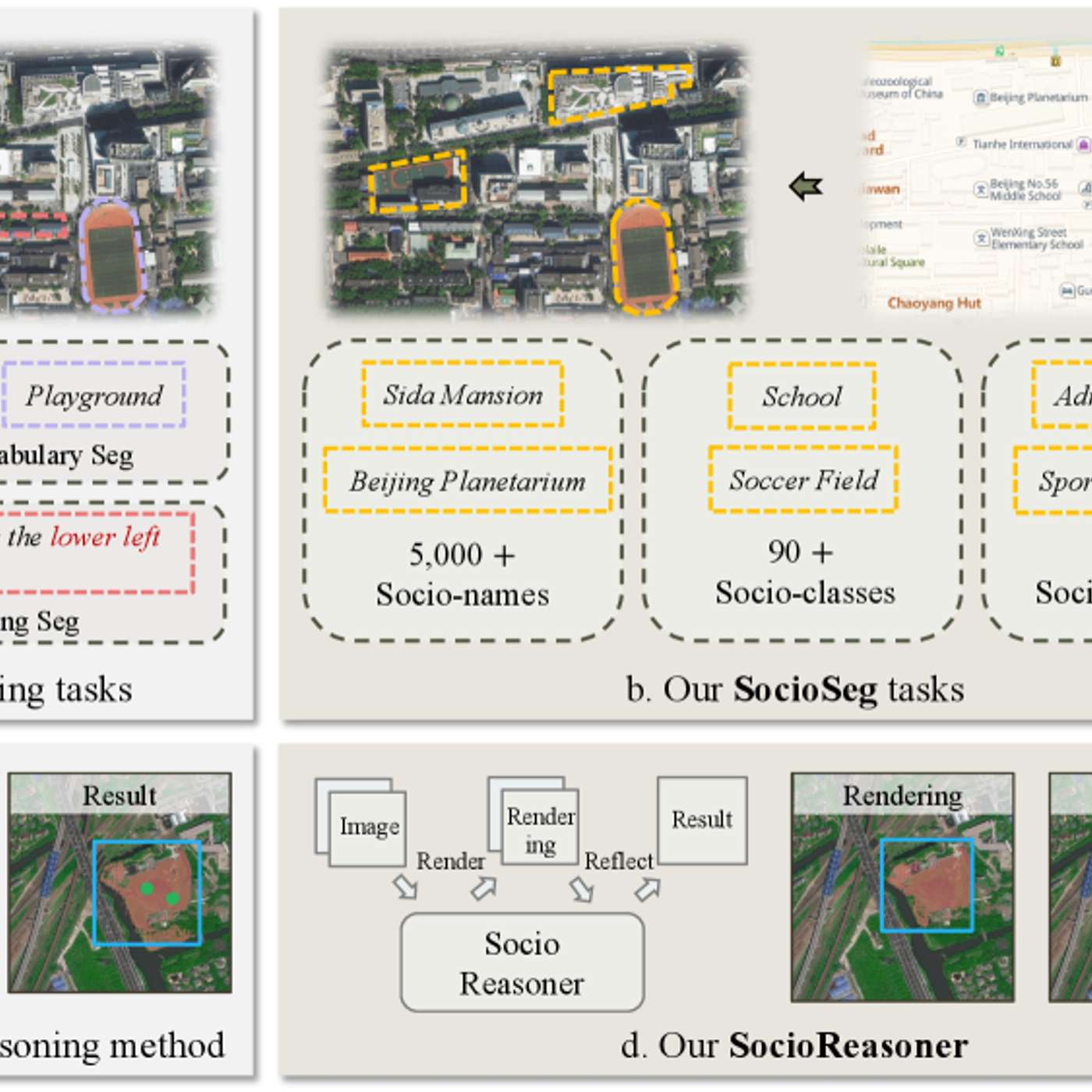

The featured AI model succeeds by reframing urban analysis as a reasoning problem. It uses a two-stage process—generating broad hypotheses then refining with detailed evidence—which mimics human cognition and outperforms traditional single-pass pattern recognition systems.

The test intentionally used a simple, conversational prompt one might give a colleague ("our blog is not good...make it better"). The models' varying success reveals that a key differentiator is the ability to interpret high-level intent and independently research best practices, rather than requiring meticulously detailed instructions.

Seemingly simple user requests require a complex sequence of reasoning, tool use, and contextual understanding that is absent from internet training data. AI must be explicitly taught the implicit logic of how a human assistant would research preferences, evaluate options, and use various tools.