Braintrust's CEO argues that developer productivity is already 'tapped out.' Even if AI models become 5% better at writing code, it won't dramatically increase output because the true bottleneck is the human capacity to manage, test, deploy, and respond to user feedback—not the speed of code generation itself.

Related Insights

While AI accelerates code generation, it creates significant new chokepoints. The high volume of AI-generated code leads to "pull request fatigue," requiring more human reviewers per change. It also overwhelms automated testing systems, which must run full cycles for every minor AI-driven adjustment, offsetting initial productivity gains.

As AI coding agents generate vast amounts of code, the most tedious part of a developer's job shifts from writing code to reviewing it. This creates a new product opportunity: building tools that help developers validate and build confidence in AI-written code, making the review process less of a chore.

As AI agents handle the mechanics of code generation, the primary role of a developer is elevated. The new bottlenecks are not typing speed or syntax, but higher-level cognitive tasks: deciding what to build, designing system architecture, and curating the AI's work.

Simply deploying AI to write code faster doesn't increase end-to-end velocity. It creates a new bottleneck where human engineers are overwhelmed with reviewing a flood of AI-generated code. To truly benefit, companies must also automate verification and validation processes.

Most AI coding tools automate the creative part developers enjoy. Factory AI's CEO argues the real value is automating the “organizational molasses”—documentation, testing, and reviews—that consumes most of an enterprise developer’s time and energy.

As AI makes the act of writing code a commodity, the primary challenge is no longer execution but discovery. The most valuable work becomes prototyping and exploring to determine *what* should be built, increasing the strategic importance of the design function.

Even if AI perfects software engineering, automating AI R&D will be limited by non-coding tasks, as AI companies aren't just software engineers. Furthermore, AI assistance might only be enough to maintain the current rate of progress as 'low-hanging fruit' disappears, rather than accelerate it.

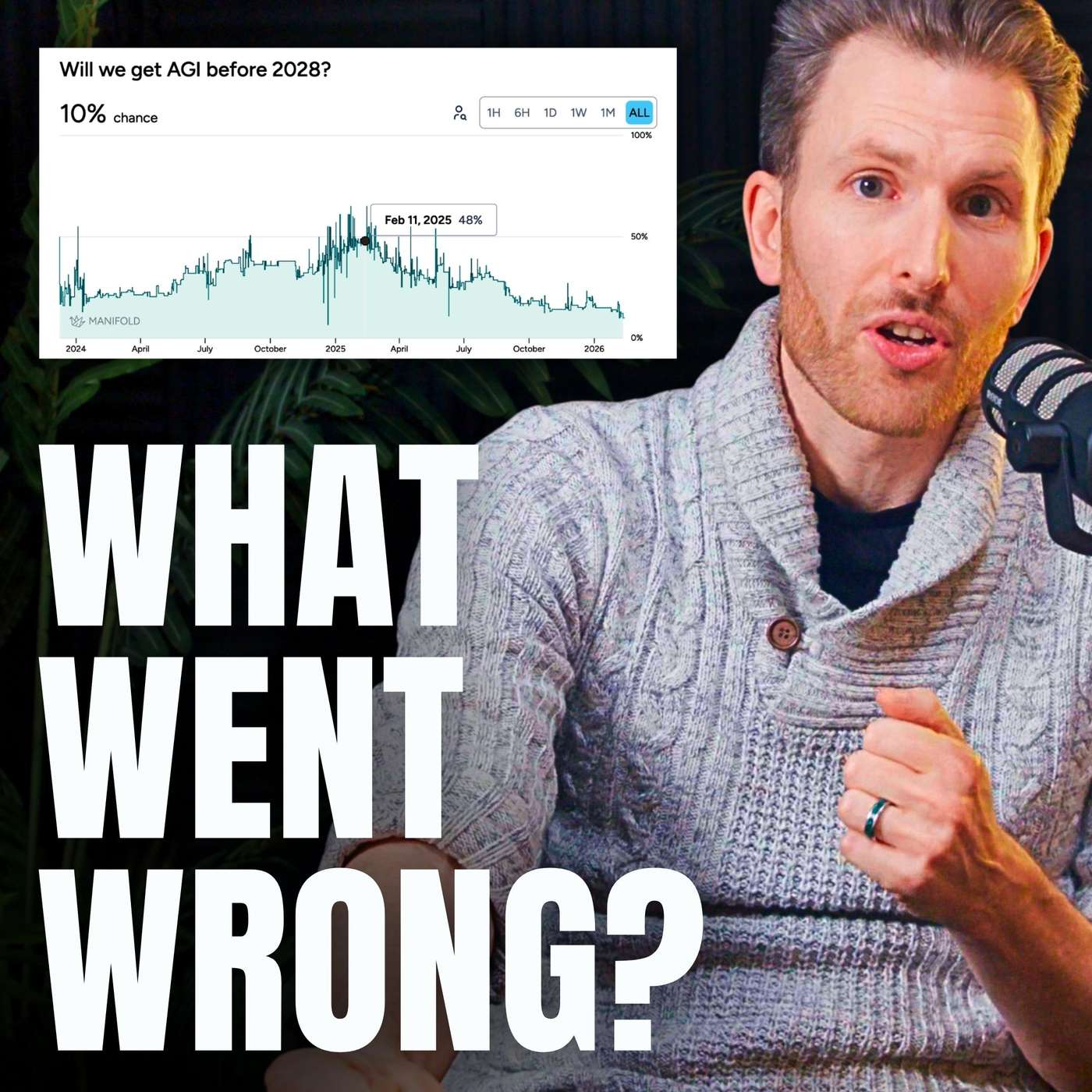

The true exponential acceleration towards AGI is currently limited by a human bottleneck: our speed at prompting AI and, more importantly, our capacity to manually validate its work. The hockey stick growth will only begin when AI can reliably validate its own output, closing the productivity loop.

Even if AI accelerates parts of a workflow like coding, overall progress might stall due to Amdahl's Law. The system's speed is limited by its slowest component, meaning human-dependent tasks like strategic thinking could become the new rate-limiting step.

AI agents can generate code far faster than humans can meaningfully review it. The primary challenge is no longer creation but comprehension. Developers spend most of their time trying to understand and validate AI output, a task for which current tools like standard PR interfaces are inadequate.