Even if AI perfects software engineering, automating AI R&D will be limited by non-coding tasks, as AI companies aren't just software engineers. Furthermore, AI assistance might only be enough to maintain the current rate of progress as 'low-hanging fruit' disappears, rather than accelerate it.

Related Insights

As AI agents handle the mechanics of code generation, the primary role of a developer is elevated. The new bottlenecks are not typing speed or syntax, but higher-level cognitive tasks: deciding what to build, designing system architecture, and curating the AI's work.

Simply deploying AI to write code faster doesn't increase end-to-end velocity. It creates a new bottleneck where human engineers are overwhelmed with reviewing a flood of AI-generated code. To truly benefit, companies must also automate verification and validation processes.

As AI agents eliminate the time and skill needed for technical execution, the primary constraint on output is no longer the ability to build, but the quality of ideas. Human value shifts entirely from execution to creative ideation, making it the key driver of progress.

While compute and capital are often cited as AI bottlenecks, the most significant limiting factor is the lack of human talent. There is a fundamental shortage of AI practitioners and data scientists, a gap that current university output and immigration policies are failing to fill, making expertise the most constrained resource.

AI can produce scientific claims and codebases thousands of times faster than humans. However, the meticulous work of validating these outputs remains a human task. This growing gap between generation and verification could create a backlog of unproven ideas, slowing true scientific advancement.

A key strategy for labs like Anthropic is automating AI research itself. By building models that can perform the tasks of AI researchers, they aim to create a feedback loop that dramatically accelerates the pace of innovation.

The ultimate goal for leading labs isn't just creating AGI, but automating the process of AI research itself. By replacing human researchers with millions of "AI researchers," they aim to trigger a "fast takeoff" or recursive self-improvement. This makes automating high-level programming a key strategic milestone.

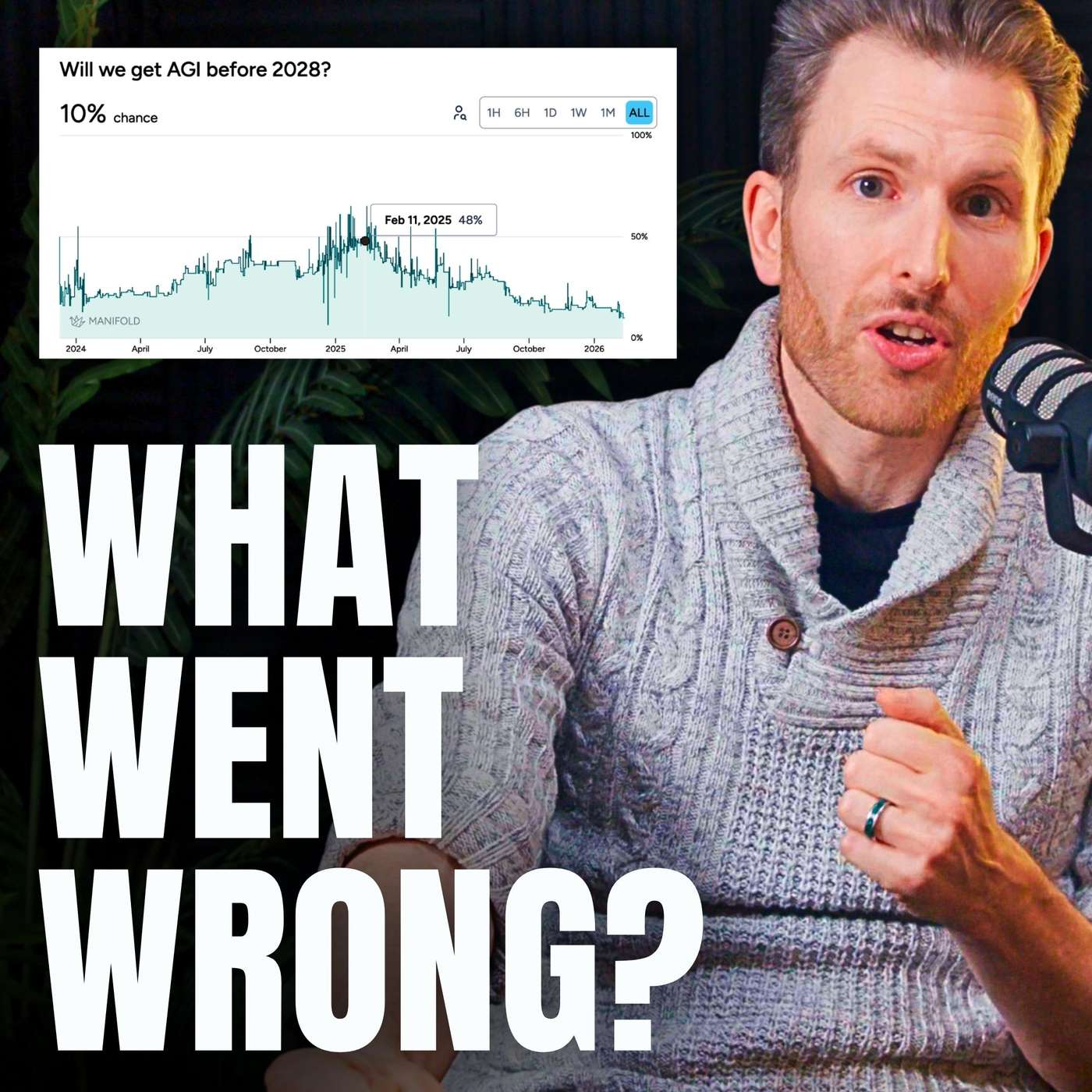

The true exponential acceleration towards AGI is currently limited by a human bottleneck: our speed at prompting AI and, more importantly, our capacity to manually validate its work. The hockey stick growth will only begin when AI can reliably validate its own output, closing the productivity loop.

The focus on AI writing code is narrow, as coding represents only 10-20% of the total software development effort. The most significant productivity gains will come from AI automating other critical, time-consuming stages like testing, security, and deployment, fundamentally reshaping the entire lifecycle.

The primary obstacle to creating a fully autonomous AI software engineer isn't just model intelligence but "controlling entropy." This refers to the challenge of preventing the compounding accumulation of small, 1% errors that eventually derail a complex, multi-step task and get the agent irretrievably off track.