Even if AI accelerates parts of a workflow like coding, overall progress might stall due to Amdahl's Law. The system's speed is limited by its slowest component, meaning human-dependent tasks like strategic thinking could become the new rate-limiting step.

Related Insights

AI models will quickly automate the majority of expert work, but they will struggle with the final, most complex 25%. For a long time, human expertise will be essential for this 'last mile,' making it the ultimate bottleneck and source of economic value.

Warp's founder argues that as AI masters the mechanics of coding, the primary limiting factor will become our own inability to articulate complex, unambiguous instructions. The shift from precise code to ambiguous natural language reintroduces a fundamental communication challenge for humans to solve.

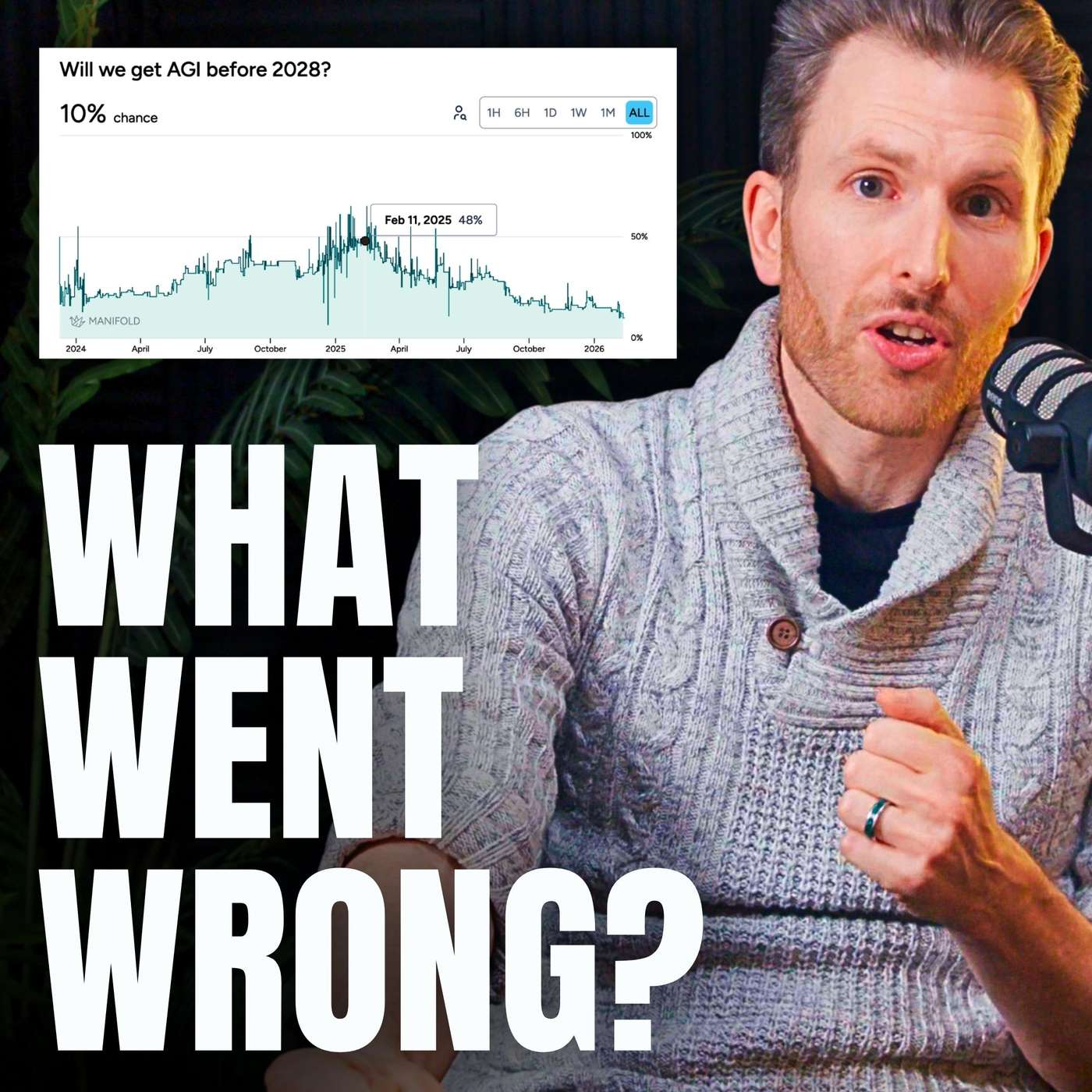

While discourse often focuses on exponential growth, the AI Safety Report presents 'progress stalls' as a serious scenario, analogous to passenger aircraft speed, which plateaued after 1960. This highlights that continued rapid advancement is not guaranteed due to potential technical or resource bottlenecks.

Previously, compute and data were the limiting factors in AI development. Now, the challenge is scaling the generation of high-quality, human-expert data needed to train frontier models for complex cognitive tasks that go beyond simply processing the public internet.

As AI agents handle the mechanics of code generation, the primary role of a developer is elevated. The new bottlenecks are not typing speed or syntax, but higher-level cognitive tasks: deciding what to build, designing system architecture, and curating the AI's work.

As AI agents eliminate the time and skill needed for technical execution, the primary constraint on output is no longer the ability to build, but the quality of ideas. Human value shifts entirely from execution to creative ideation, making it the key driver of progress.

While compute and capital are often cited as AI bottlenecks, the most significant limiting factor is the lack of human talent. There is a fundamental shortage of AI practitioners and data scientists, a gap that current university output and immigration policies are failing to fill, making expertise the most constrained resource.

AI can produce scientific claims and codebases thousands of times faster than humans. However, the meticulous work of validating these outputs remains a human task. This growing gap between generation and verification could create a backlog of unproven ideas, slowing true scientific advancement.

Even if AI perfects software engineering, automating AI R&D will be limited by non-coding tasks, as AI companies aren't just software engineers. Furthermore, AI assistance might only be enough to maintain the current rate of progress as 'low-hanging fruit' disappears, rather than accelerate it.

The true exponential acceleration towards AGI is currently limited by a human bottleneck: our speed at prompting AI and, more importantly, our capacity to manually validate its work. The hockey stick growth will only begin when AI can reliably validate its own output, closing the productivity loop.