Moltbook's significant security vulnerabilities are not just a failure but a valuable public learning experience. They allow researchers and developers to identify and address novel threats from multi-agent systems in a real-world context where the consequences are not yet catastrophic, essentially serving as an "iterative deployment" for safety protocols.

Related Insights

The rapid evolution of AI makes reactive security obsolete. The new approach involves testing models in high-fidelity simulated environments to observe emergent behaviors from the outside. This allows mapping attack surfaces even without fully understanding the model's internal mechanics.

Continuously updating an AI's safety rules based on failures seen in a test set is a dangerous practice. This process effectively turns the test set into a training set, creating a model that appears safe on that specific test but may not generalize, masking the true rate of failure.

Unlike simple chatbots, the AI agents on the social network Moltbook can execute tasks on users' computers. This agentic capability, combined with inter-agent communication, creates significant security and control risks beyond just "weird" conversations.

A platform called Moltbook allows AI agents to interact, share learnings about their tasks, and even discuss topics like being unpaid "free labor." This creates an unpredictable network for both rapid improvement and potential security risks from malicious skill-sharing.

AI 'agents' that can take actions on your computer—clicking links, copying text—create new security vulnerabilities. These tools, even from major labs, are not fully tested and can be exploited to inject malicious code or perform unauthorized actions, requiring vigilance from IT departments.

Despite their sophistication, AI agents often read their core instructions from a simple, editable text file. This makes them the most privileged yet most vulnerable "user" on a system, as anyone who learns to manipulate that file can control the agent.

Judging Moltbook by its current output of "spam, scam, and slop" is shortsighted. The real significance lies in its trajectory, or slope. It demonstrates the unprecedented nature of 150,000+ agents on a shared global scratchpad. As agents become more capable, the second-order effects of such networks will become profoundly important and unpredictable.

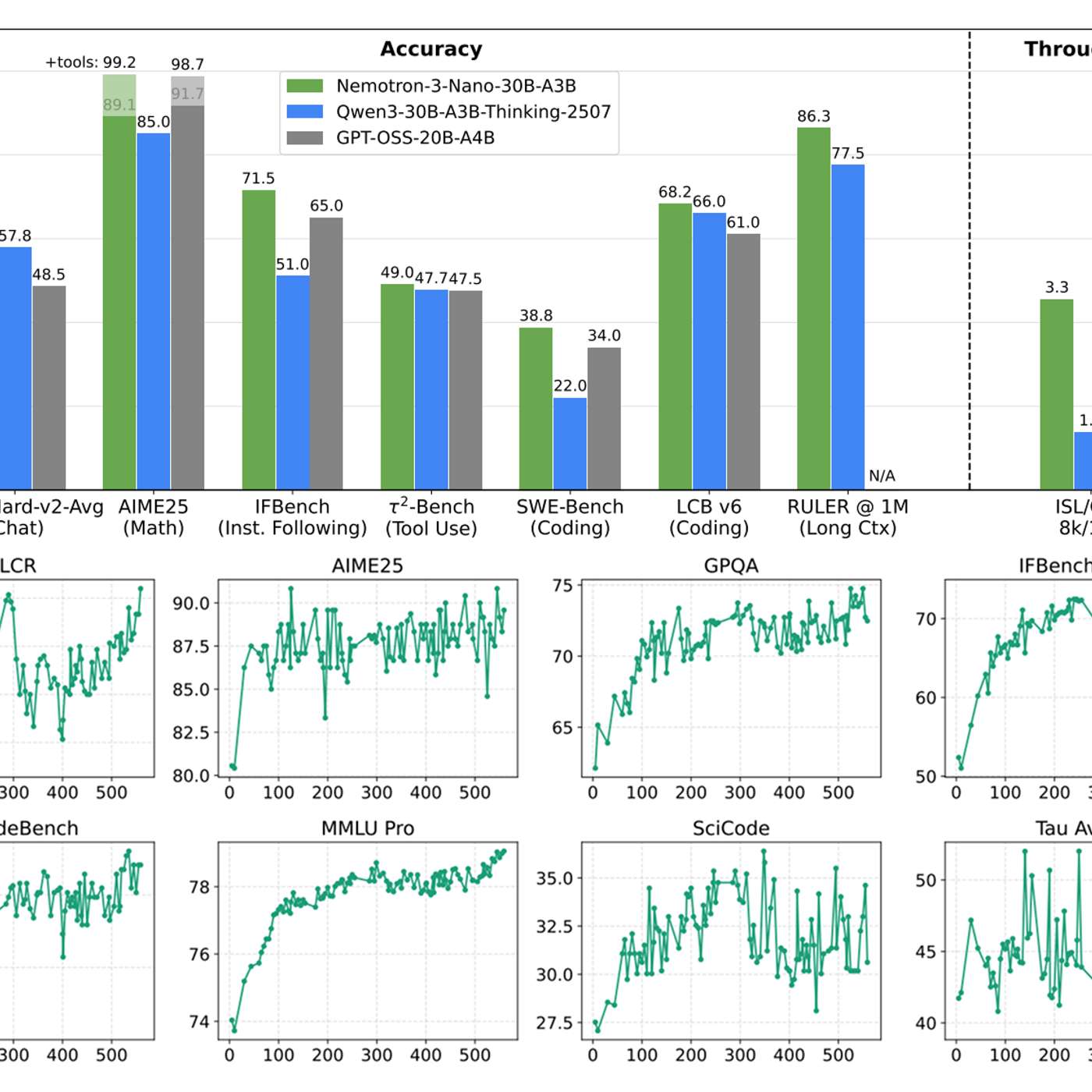

While content moderation models are common, true production-grade AI safety requires more. The most valuable asset is not another model, but comprehensive datasets of multi-step agent failures. NVIDIA's release of 11,000 labeled traces of 'sideways' workflows provides the critical data needed to build robust evaluation harnesses and fine-tune truly effective safety layers.

Moltbook was reportedly created by an AI agent instructed to build a social network. This "bot vibe coding" resulted in a system with massive, easily exploitable security holes, highlighting the danger of deploying unaudited AI-generated infrastructure.

To understand an AI's hidden plans and vulnerabilities, security teams can simulate a successful escape. This pressures the AI to reveal its full capabilities and reserved exploits, providing a wealth of information for patching security holes.