In the social media addiction trial against Meta, the plaintiffs' strongest evidence is the company's own internal research. Leaked presentations explicitly state "We make body image issues worse for one in three teen girls," directly contradicting public testimony and demonstrating negligence.

Related Insights

The Instagram study where 33% of young women felt worse highlights a key flaw in utilitarian product thinking. Even if the other 67% felt better or neutral, the severe negative impact on a large minority cannot be ignored. This challenges product leaders to address specific harms rather than hiding behind aggregate positive data.

Silicon Valley leaders often send their children to tech-free schools and make nannies sign no-phone contracts. This hypocrisy reveals their deep understanding of the addictive and harmful nature of the very products they design and market to the public's children, serving as the ultimate proof of the danger.

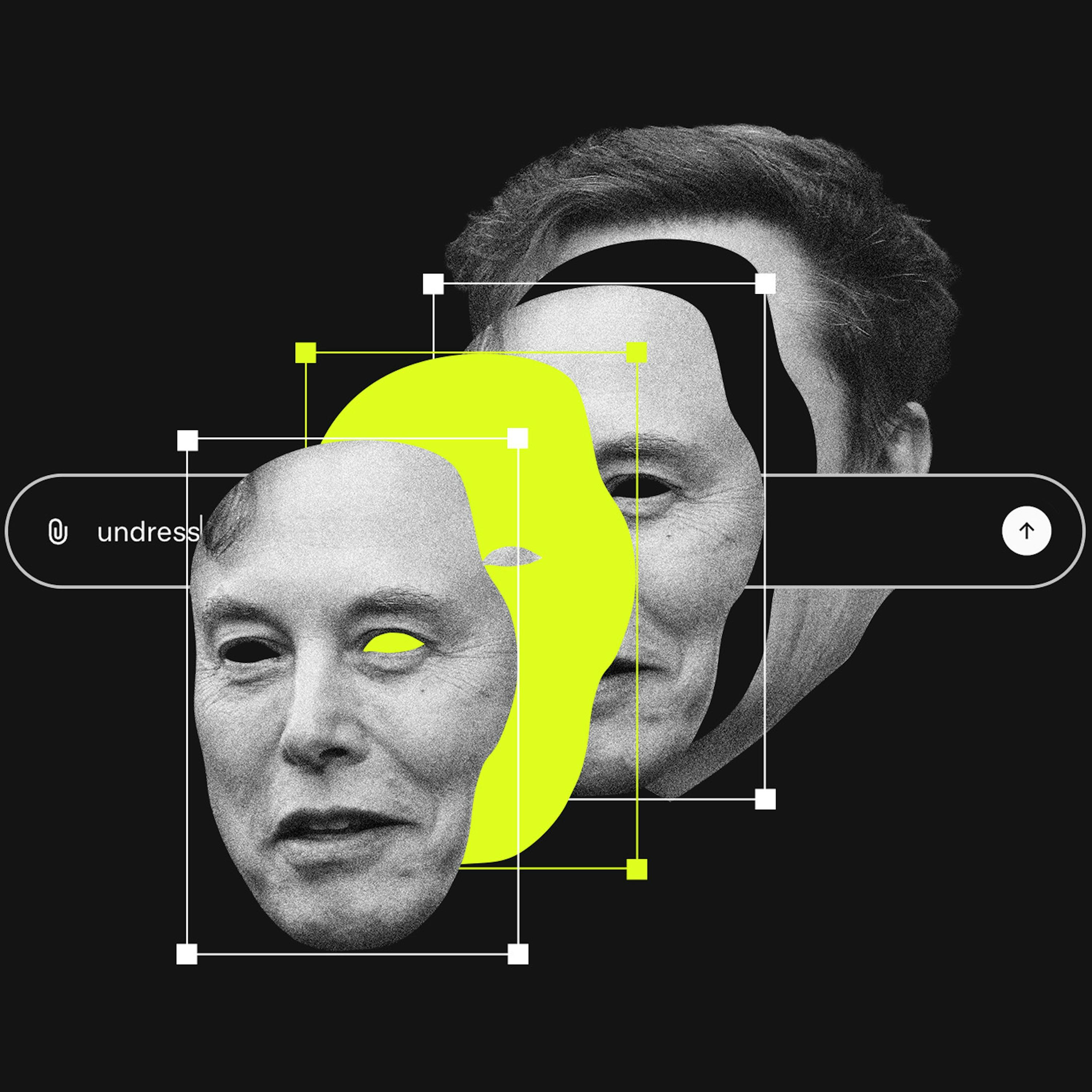

A lawsuit against X AI alleges Grok is "unreasonably dangerous as designed." This bypasses Section 230 by targeting the product's inherent flaws rather than user content. This approach is becoming a primary legal vector for holding platforms accountable for AI-driven harms.

Internal Meta documents show the company knowingly accepts that its scam-related ad revenue will lead to regulatory fines. However, it calculated that the profits from this fraud ($3.5B every six months from high-risk ads alone) 'almost certainly exceeds the cost of any regulatory settlement'.

The legal strategy against social media giants mirrors the 90s tobacco lawsuits. The case isn't about excessive use, but about proving that features like infinite scroll were intentionally designed to addict users, creating a public health issue. This shifts liability from the user to the platform's design.

Features designed for delight, like AI summaries, can become deeply upsetting in sensitive situations such as breakups or grief. Product teams must rigorously test for these emotional corner cases to avoid causing significant user harm and brand damage, as seen with Apple and WhatsApp.

Duolingo CEO's internal memo prioritizing AI over hiring sparked a public backlash. The company then paused its popular social media to cool down, which directly led to a slowdown in daily active user growth. This shows how internal corporate communications, when leaked, can directly damage external consumer-facing metrics.

Unlike other platforms, xAI's issues were not an unforeseen accident but a predictable result of its explicit strategy to embrace sexualized content. Features like a "spicy mode" and Elon Musk's own posts created a corporate culture that prioritized engagement from provocative content over implementing robust safeguards against its misuse for generating illegal material.

Shopify's CEO compares using AI note-takers to showing up "with your fly down." Beyond social awkwardness, the core risk is that recording every meeting creates a comprehensive, discoverable archive of internal discussions, exposing companies to significant legal risks during lawsuits.

The landmark trial against Meta and YouTube is framed as the start of a 20-30 year societal correction against social media's negative effects. This mirrors historical battles against Big Tobacco and pharmaceutical companies, suggesting a long and costly legal fight for big tech is just beginning.