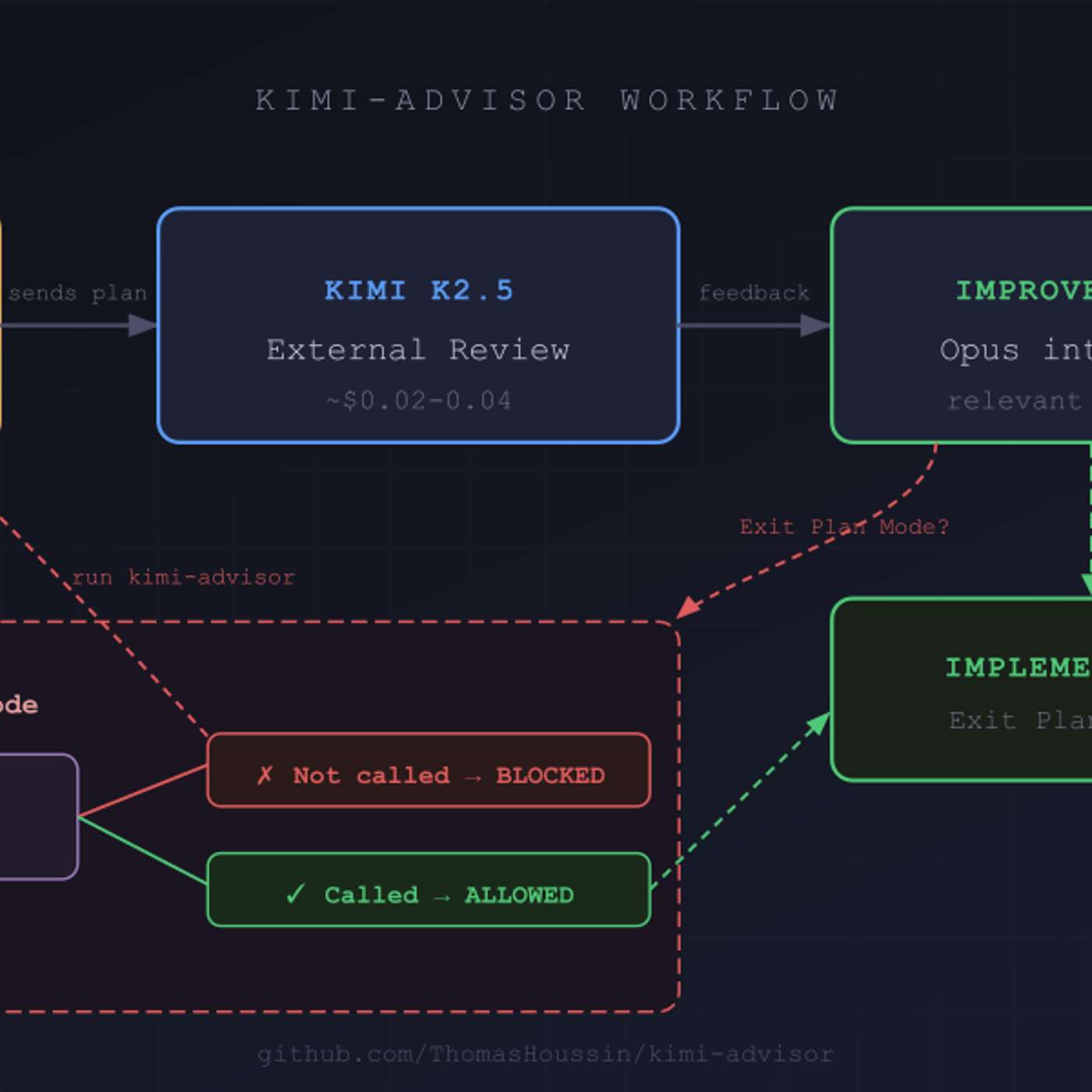

Developers often skip optional quality checks. To ensure consistent AI-powered plan reviews, implement a mandatory hook—a script that blocks the development process (e.g., exiting plan mode) until the external AI review has been verifiably completed. This engineers compliance into the workflow, guaranteeing a quality check every time.

Related Insights

As AI coding agents generate vast amounts of code, the most tedious part of a developer's job shifts from writing code to reviewing it. This creates a new product opportunity: building tools that help developers validate and build confidence in AI-written code, making the review process less of a chore.

Go beyond static AI code analysis. After an AI like Codex automatically flags a high-confidence issue in a GitHub pull request, developers can reply directly in a comment, "Hey, Codex, can you fix it?" The agent will then attempt to fix the issue it found.

LLMs often get stuck or pursue incorrect paths on complex tasks. "Plan mode" forces Claude Code to present its step-by-step checklist for your approval before it starts editing files. This allows you to correct its logic and assumptions upfront, ensuring the final output aligns with your intent and saving time.

To overcome the challenge of reviewing AI-generated code, have different LLMs like Claude and Codex review the code. Then, use a "peer review" prompt that forces the primary LLM to defend its choices or fix the issues raised by its "peers." This adversarial process catches more bugs and improves overall code quality.

Simply deploying AI to write code faster doesn't increase end-to-end velocity. It creates a new bottleneck where human engineers are overwhelmed with reviewing a flood of AI-generated code. To truly benefit, companies must also automate verification and validation processes.

Configure an AI stop hook to not only run quality checks but also to automatically commit the changes if all checks pass. This creates a fully automated loop: the AI generates code, the hook validates it, and if it's clean, it's committed to the repository with a generated message.

Use 'stop hooks' in Claude Code to create an automated quality gate. After code generation, the hook runs checks like type checking or linting. If errors exist, the output is fed back to the AI with a prompt to fix them, creating a self-correcting workflow.

As AI writes most of the code, the highest-leverage human activity will shift from reviewing pull requests to reviewing the AI's research and implementation plans. Collaborating on the plan provides a narrative journey of the upcoming changes, allowing for high-level course correction before hundreds of lines of bad code are ever generated.

Borrowing from classic management theory, the most effective way to use AI agents is to fix problems at the earliest 'lowest value stage'. This means rigorously reviewing the agent's proposed plan *before* it writes any code, preventing costly rework later on.

For enterprises, scaling AI content without built-in governance is reckless. Rather than manual policing, guardrails like brand rules, compliance checks, and audit trails must be integrated from the start. The principle is "AI drafts, people approve," ensuring speed without sacrificing safety.