OpenAI is restricting its models from giving tailored legal or medical advice. This isn't about nerfing the AI's capabilities but a strategic legal maneuver to avoid liability and lawsuits alleging the company is practicing licensed professions without credentials.

Related Insights

Perplexity's legal defense against Amazon's lawsuit reframes its AI agent not as a scraper bot, but as a direct extension of the user. By arguing "software is becoming labor," it claims the agent inherits the user's permissions to access websites. This novel legal argument fundamentally challenges the enforceability of current terms of service in the age of AI.

To ensure reliability in healthcare, ZocDoc doesn't give LLMs free rein. It wraps them in a hybrid system where traditional, deterministic code orchestrates the AI's tasks, sets firm boundaries, and knows when to hand off to a human, preventing the 'praying for the best' approach common with direct LLM use.

Despite the potential for AI to create more efficient legal services, new tech-first law firms face significant hurdles. The established reputation of a major law firm ("the name on the letterhead") sends a powerful signal in litigation. Furthermore, incumbent firms carry malpractice insurance, meaning they assume liability for mistakes—a crucial function AI startups cannot easily replicate.

As AI allows any patient to generate well-reasoned, personalized treatment plans, the medical system will face pressure to evolve beyond rigid standards. This will necessitate reforms around liability, data access, and a patient's "right to try" non-standard treatments that are demonstrably well-researched via AI.

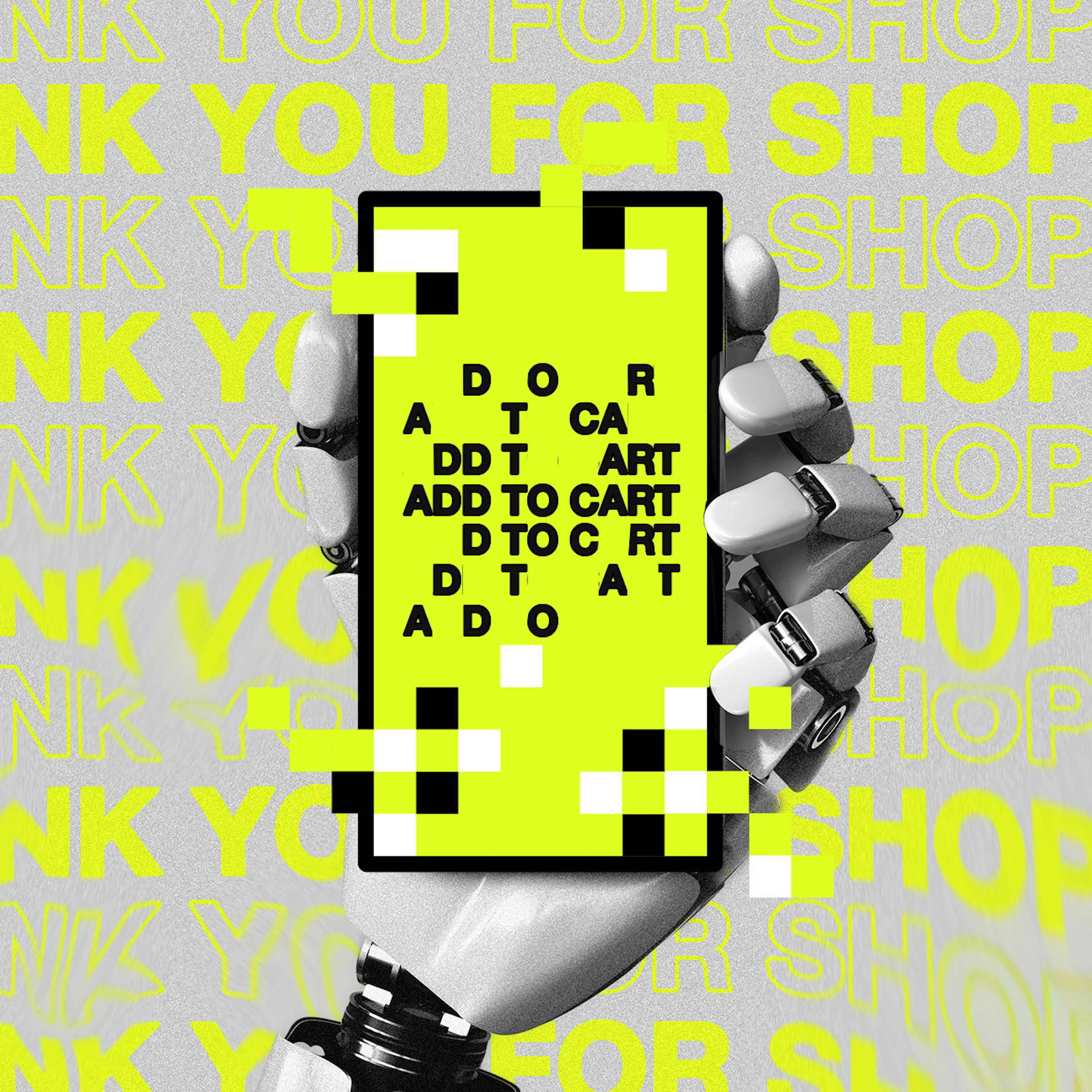

Current AI tools are empowering laypeople to generate a flood of low-quality legal filings. This 'sludge' overwhelms the courts and creates more work for skilled attorneys who must respond to the influx of meritless litigation, ironically boosting demand for the very profession AI is meant to disrupt.

Insurers like AIG are seeking to exclude liabilities from AI use, such as deepfake scams or chatbot errors, from standard corporate policies. This forces businesses to either purchase expensive, capped add-ons or assume a significant new category of uninsurable risk.

Laws like California's SB243, allowing lawsuits for "emotional harm" from chatbots, create an impossible compliance maze for startups. This fragmented regulation, while well-intentioned, benefits incumbents who can afford massive legal teams, thus stifling innovation and competition from smaller players.

The core legal battle is a referendum on "fair use" for the AI era. If AI summaries are deemed "transformative" (a new work), it's a win for AI platforms. If they're "derivative" (a repackaging), it could force widespread content licensing deals.

Venture capitalist Keith Rabois observes a new behavior: founders are using ChatGPT for initial legal research and then presenting those findings to challenge or verify the advice given by their expensive law firms, shifting the client-provider power dynamic.

The CEO contrasts general-purpose AI with their "courtroom-grade" solution, built on a proprietary, authoritative data set of 160 billion documents. This ensures outputs are grounded in actual case law and verifiable, addressing the core weaknesses of consumer models for professional use.