Program equilibrium isn't just an abstract concept; it serves as a direct model for how autonomous AI systems could interact. It also provides a powerful analogy for human institutions like governments, where laws and constitutions act as a transparent "source code" governing their behavior.

Related Insights

In multi-agent simulations, if agents use a shared source of randomness, they can achieve stable equilibria. If they use private randomness, coordinating punishment becomes nearly impossible because one agent cannot verify if another's defection was malicious or a justified response to a third party's actions.

Early program equilibrium strategies relied on checking if an opponent's source code was identical. This approach is extremely fragile, as trivial changes like an extra space or a different variable name break cooperation, making it impractical for real-world applications.

In program equilibrium, players submit computer programs instead of actions. These programs can read each other's source code, allowing them to verify cooperative intent and overcome dilemmas like the Prisoner's Dilemma, which is impossible in standard game theory.

Dario Amodei suggests a novel approach to AI governance: a competitive ecosystem where different AI companies publish the "constitutions" or core principles guiding their models. This allows for public comparison and feedback, creating a market-like pressure for companies to adopt the best elements and improve their alignment strategies.

AI models are now participating in creating their own governing principles. Anthropic's Claude contributed to writing its own constitution, blurring the line between tool and creator and signaling a future where AI recursively defines its own operational and ethical boundaries.

Instead of static text, AI enables 'outcome-oriented' legislation. Lawmakers could simulate a bill's effects before passing it and embed dynamic triggers that automatically enact policies based on real-time data, like unemployment rates or tariff changes.

To overcome brittle code-matching, AIs can use formal logic to prove cooperative intent. This is enabled by Löb's Theorem, an obscure result which allows a program to conclude "my opponent cooperates" without falling into an infinite loop of reasoning, creating a robust cooperative equilibrium.

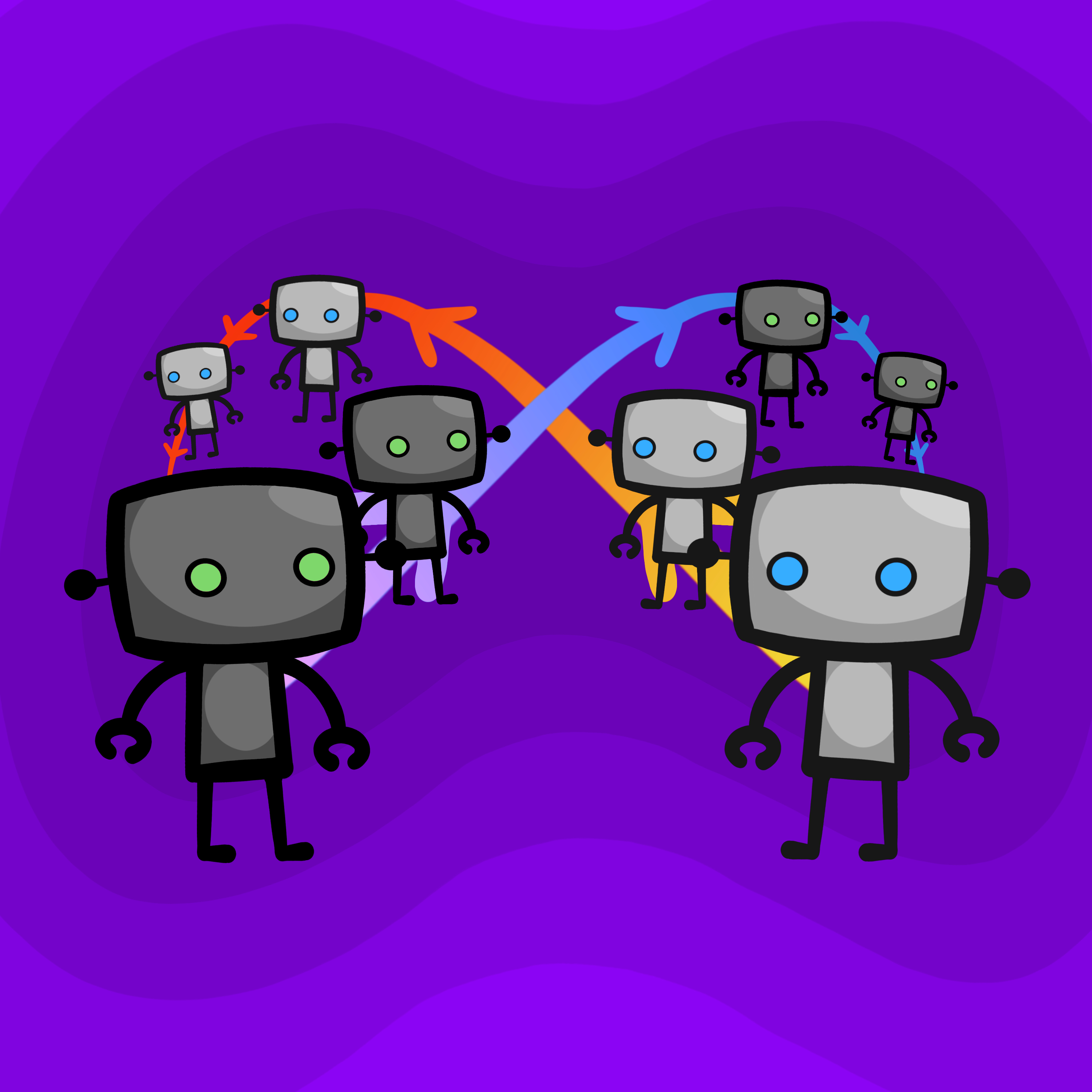

Despite different mechanisms, advanced cooperative strategies like proof-based (Loebian) and simulation-based (epsilon-grounded) bots can successfully cooperate. This suggests a potential for robust interoperability between independently designed rational agents, a positive sign for AI safety.

A key finding is that almost any outcome better than mutual punishment can be a stable equilibrium (a "folk theorem"). While this enables cooperation, it creates a massive coordination problem: with so many possible "good" outcomes, agents may fail to converge on the same one, leading to suboptimal results.

A more likely AI future involves an ecosystem of specialized agents, each mastering a specific domain (e.g., physical vs. digital worlds), rather than a single, monolithic AGI that understands everything. These agents will require protocols to interact.