Simulating strategies with memory (like "grim trigger") or with multiple players causes an exponential explosion of simulation branches. This can be solved by having all simulated agents draw from the same shared sequence of random numbers, which forces all simulation branches to halt at the same conceptual "time step."

Related Insights

In multi-agent simulations, if agents use a shared source of randomness, they can achieve stable equilibria. If they use private randomness, coordinating punishment becomes nearly impossible because one agent cannot verify if another's defection was malicious or a justified response to a third party's actions.

When tested at scale in Civilization, different LLMs don't just produce random outputs; they develop consistent and divergent strategic 'personalities.' One model might consistently play aggressively, while another favors diplomacy, revealing that LLMs encode coherent, stable reasoning styles.

Beyond supervised fine-tuning (SFT) and human feedback (RLHF), reinforcement learning (RL) in simulated environments is the next evolution. These "playgrounds" teach models to handle messy, multi-step, real-world tasks where current models often fail catastrophically.

The AI's ability to handle novel situations isn't just an emergent property of scale. Waive actively trains "world models," which are internal generative simulators. This enables the AI to reason about what might happen next, leading to sophisticated behaviors like nudging into intersections or slowing in fog.

Moonshot overcame the tendency of LLMs to default to sequential reasoning—a problem they call "serial collapse"—by using Parallel Agent Reinforcement Learning (PARL). They forced an orchestrator model to learn parallelization by giving it time and compute budgets that were impossible to meet sequentially, compelling it to delegate tasks.

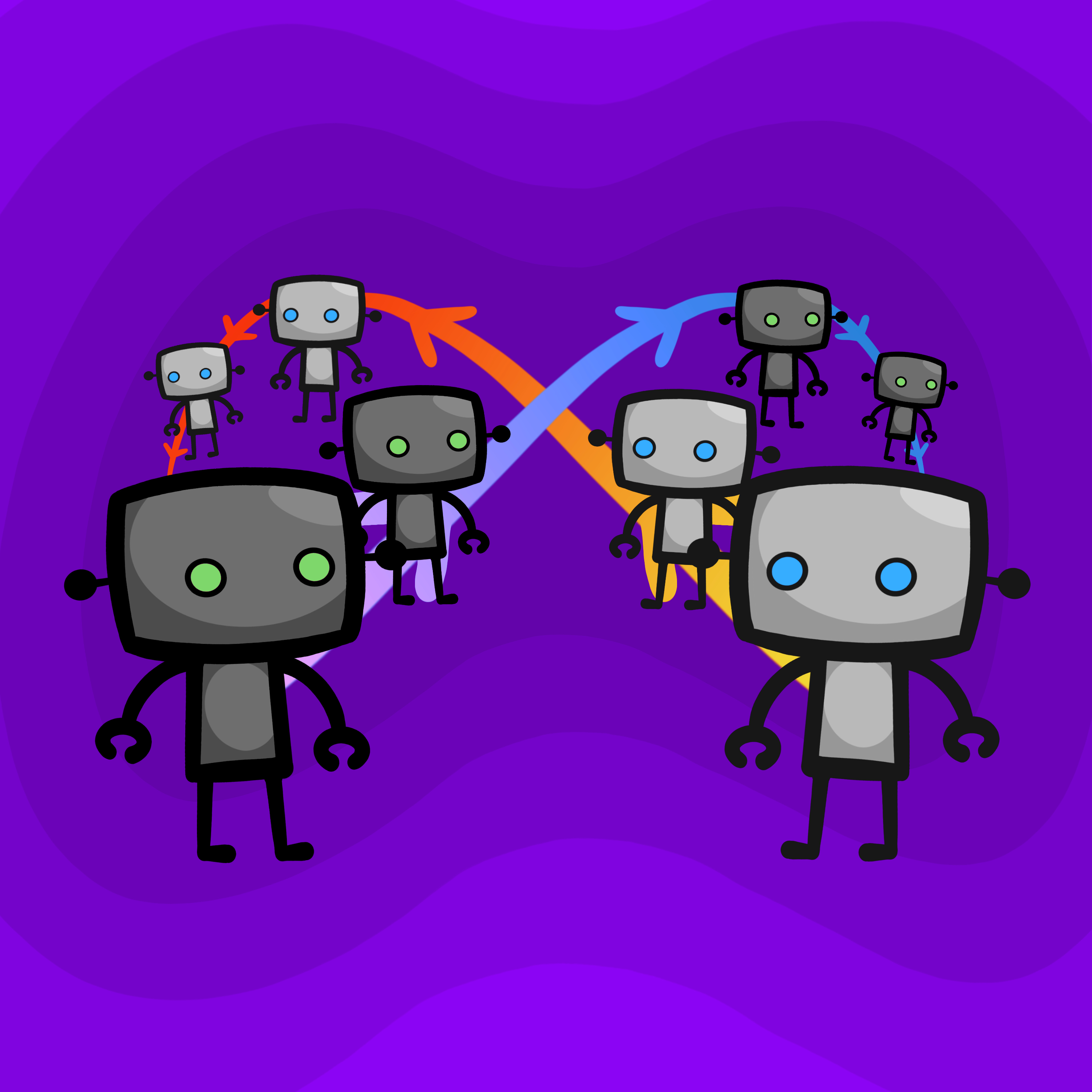

Softmax's technical approach involves training AIs in complex multi-agent simulations to learn cooperation, competition, and theory of mind. The goal is to build a foundational, generalizable model of sociality, which acts as a 'surrogate model for alignment' before fine-tuning for specific tasks.

An experiment showed that given a fixed compute budget, training a population of 16 agents produced a top performer that beat a single agent trained with the entire budget. This suggests that the co-evolution and diversity of strategies in a multi-agent setup can be more effective than raw computational power alone.

The "epsilon-grounded" simulation approach has a hidden cost: its runtime is inversely proportional to epsilon. To be very certain that simulations will terminate (a small epsilon), agents must accept potentially very long computation times, creating a direct trade-off between speed and reliability.

A simple way for AIs to cooperate is to simulate each other and copy the action. However, this creates an infinite loop if both do it. The fix is to introduce a small probability (epsilon) of cooperating unconditionally, which guarantees the simulation chain eventually terminates.

The stochastic, randomly generated nature of the game 'Hades' provided a mental model for designing Replit's AI agents. Because AI is also probabilistic and each 'run' can be different, the team adopted gaming terminology and concepts to build for this unpredictability.