AI's key advantage isn't superior intelligence but the ability to brute-force enumerate and then rapidly filter a vast number of hypotheses against existing literature and data. This systematic, high-volume approach uncovers novel insights that intuition-driven human processes might miss.

Related Insights

Wet lab experiments are slow and expensive, forcing scientists to pursue safer, incremental hypotheses. AI models can computationally test riskier, 'home run' ideas before committing lab resources. This de-risking makes scientists less hesitant to explore breakthrough concepts that could accelerate the field.

Generating truly novel and valid scientific hypotheses requires a specialized, multi-stage AI process. This involves using a reasoning model for idea generation, a literature-grounded model for validation, and a third system for checking originality against existing research. This layered approach overcomes the limitations of a single, general-purpose LLM.

In high-stakes fields like pharma, AI's ability to generate more ideas (e.g., drug targets) is less valuable than its ability to aid in decision-making. Physical constraints on experimentation mean you can't test everything. The real need is for tools that help humans evaluate, prioritize, and gain conviction on a few key bets.

The "bitter lesson" in AI research posits that methods leveraging massive computation scale better and ultimately win out over approaches that rely on human-designed domain knowledge or clever shortcuts, favoring scale over ingenuity.

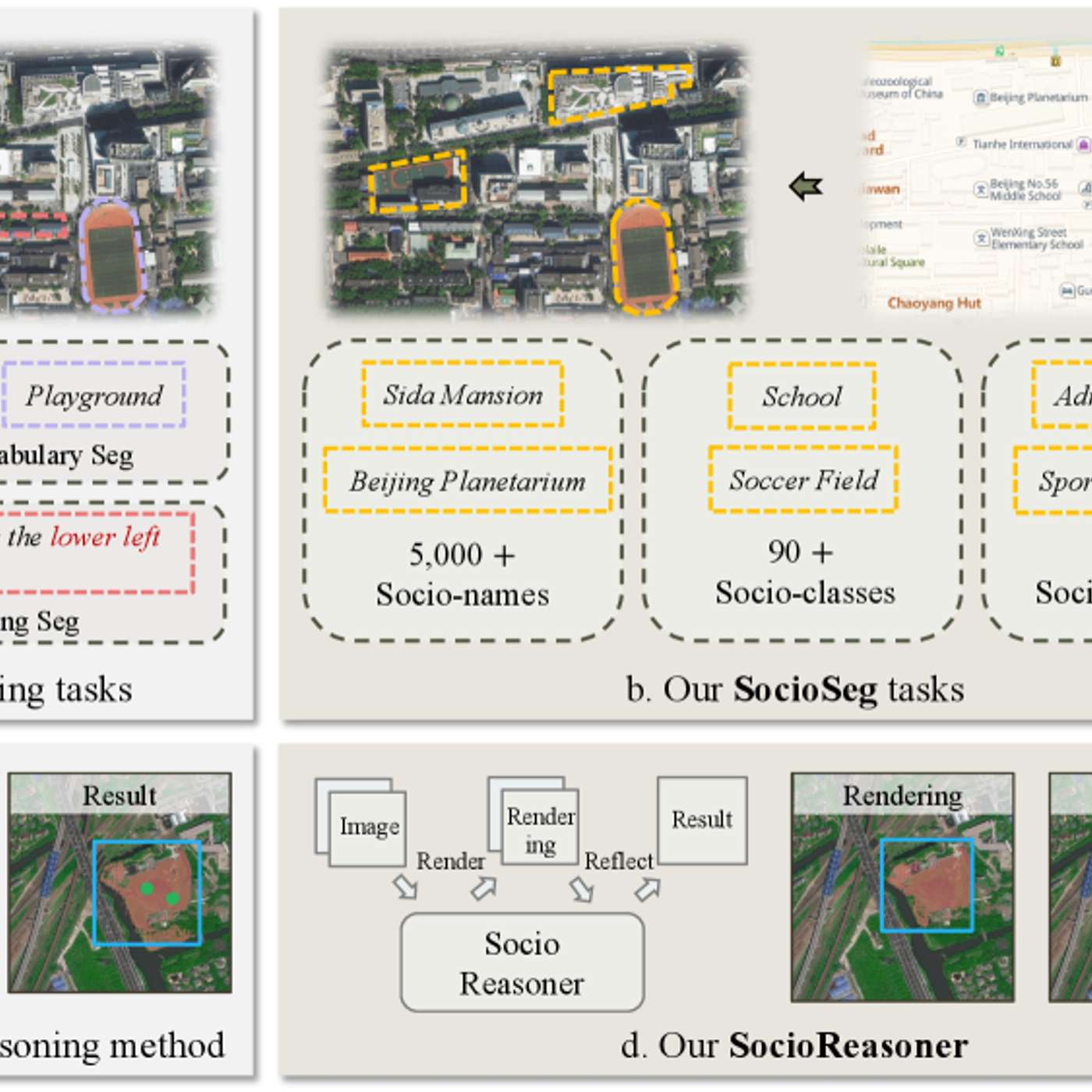

The featured AI model succeeds by reframing urban analysis as a reasoning problem. It uses a two-stage process—generating broad hypotheses then refining with detailed evidence—which mimics human cognition and outperforms traditional single-pass pattern recognition systems.

AlphaFold's success in identifying a key protein for human fertilization (out of 2,000 possibilities) showcases AI's power. It acts as a hypothesis generator, dramatically reducing the search space for expensive and time-consuming real-world experiments.

AI's primary value in early-stage drug discovery is not eliminating experimental validation, but drastically compressing the ideation-to-testing cycle. It reduces the in-silico (computer-based) validation of ideas from a multi-month process to a matter of days, massively accelerating the pace of research.

AI can generate hundreds of statistically novel ideas in seconds, but they lack context and feasibility. The bottleneck isn't a lack of ideas, but a lack of *good* ideas. Humans excel at filtering this volume through the lens of experience and strategic value, steering raw output toward a genuinely useful solution.

In an experiment testing AI-generated hypotheses for macular degeneration, the hypothesis that succeeded in lab tests was not the one ranked highest by ophthalmologists. This suggests expert intuition is an unreliable predictor of success compared to systematic, AI-driven exploration and verification.

The ultimate goal isn't just modeling specific systems (like protein folding), but automating the entire scientific method. This involves AI generating hypotheses, choosing experiments, analyzing results, and updating a 'world model' of a domain, creating a continuous loop of discovery.