The ability of a single encoder to excel at both understanding and generating images indicates these two tasks are not as distinct as they seem. It suggests they rely on a shared, fundamental structure of visual information that can be captured in one unified representation.

Related Insights

Historically, computer vision treated 3D reconstruction (capturing reality) and generation (creating content) as separate fields. New techniques like NeRFs are merging them, creating a unified approach where models can seamlessly move between perceiving and imagining 3D spaces. This represents a major paradigm shift.

To move beyond keyword search in their media archive, Tim McLear's system generates two vector embeddings for each asset: one from the image thumbnail and another from its AI-generated text description. Fusing these enables a powerful semantic search that understands visual similarity and conceptual relationships, not just exact text matches.

Anthropic strategically focuses on "vision in" (AI understanding visual information) over "vision out" (image generation). This mimics a real developer who needs to interpret a user interface to fix it, but can delegate image creation to other tools or people. The core bet is that the primary bottleneck is reasoning, not media generation.

The future of creative AI is moving beyond simple text-to-X prompts. Labs are working to merge text, image, and video models into a single "mega-model" that can accept any combination of inputs (e.g., a video plus text) to generate a complex, edited output, unlocking new paradigms for design.

Current multimodal models shoehorn visual data into a 1D text-based sequence. True spatial intelligence is different. It requires a native 3D/4D representation to understand a world governed by physics, not just human-generated language. This is a foundational architectural shift, not an extension of LLMs.

While GenAI continues the "learn by example" paradigm of machine learning, its ability to create novel content like images and language is a fundamental step-change. It moves beyond simply predicting patterns to generating entirely new outputs, representing a significant evolution in computing.

While SAM3 can act as a "tool" for LLMs, researchers argue that fundamental vision tasks like counting fingers should be a native, immediate capability of a frontier model, akin to human System 1 thinking. Relying on tool calls for simple perception indicates a critical missing capability in the core model.

When analyzing video, new generative models can create entirely new images that illustrate a described scene, rather than just pulling a direct screenshot. This allows AI to generate its own 'B-roll' or conceptual art that captures the essence of the source material.

Contrary to common perception shaped by their use in language, Transformers are not inherently sequential. Their core architecture operates on sets of tokens, with sequence information only injected via positional embeddings. This makes them powerful for non-sequential data like 3D objects or other unordered collections.

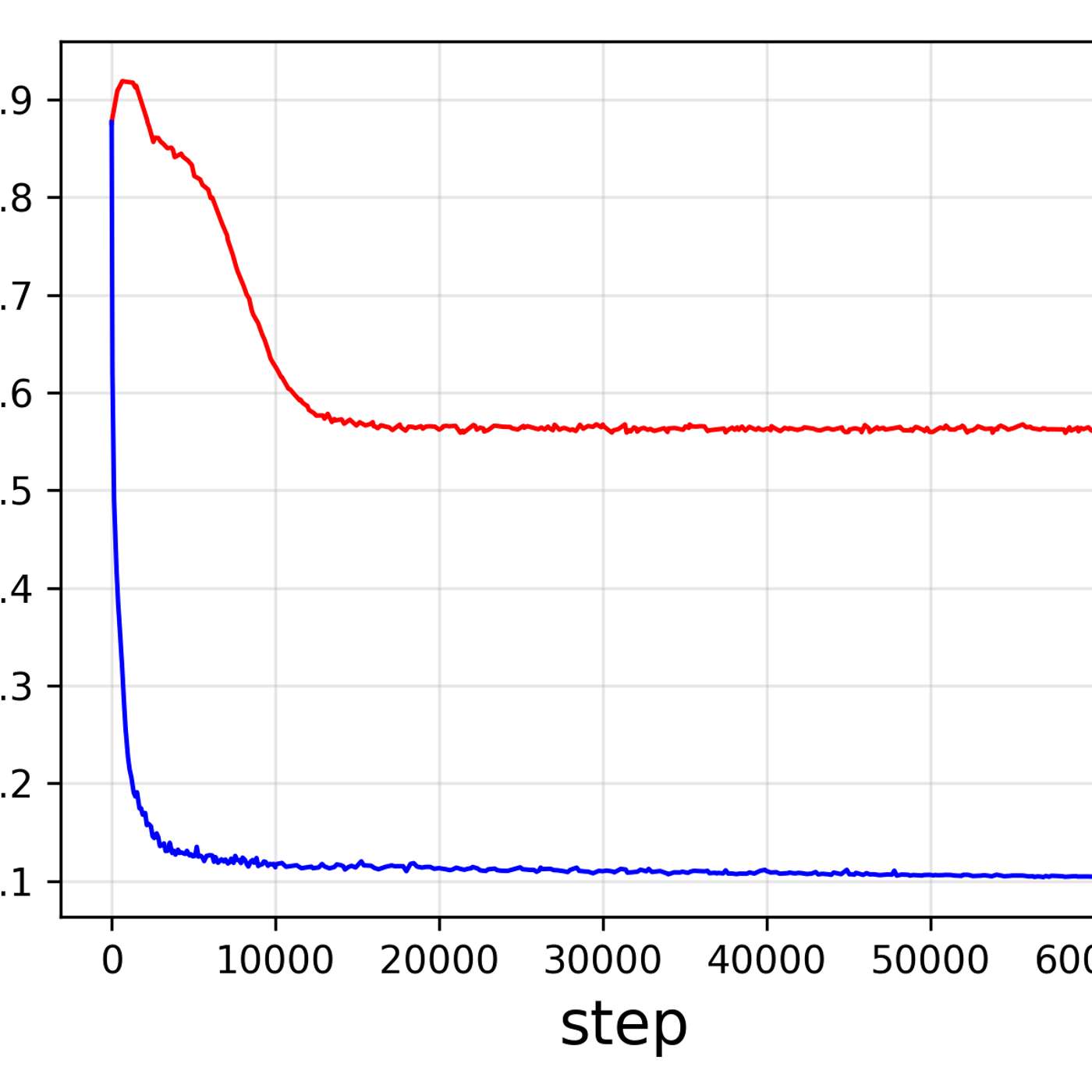

Adopting a single, unified architecture for both vision and generation tasks simplifies the engineering lifecycle. This approach reduces the cost and complexity of maintaining, updating, and deploying multiple specialized models, accelerating development.