Building production AI agents by patching together incompatible models for speech, retrieval, and safety creates significant integration challenges. These 'Frankenstein stacks' lead to compounded latency, accuracy degradation between components, and weak, bolt-on security, which are the primary causes of failure in real-world applications, not reasoning errors.

Related Insights

During a live test, multiple competing AI tools demonstrated the exact same failure mode. This indicates the flaw lies not with the individual tools but with the shared underlying language model (e.g., Claude Sonnet), a systemic weakness users might misattribute to a specific product.

After successfully deploying three or four AI agents, companies will encounter a new challenge: the agents have data conflicts and provide inconsistent answers. The solution, which is still nascent, is a "meta-agent" or orchestration layer to manage them.

The primary danger in AI safety is not a lack of theoretical solutions but the tendency for developers to implement defenses on a "just-in-time" basis. This leads to cutting corners and implementation errors, analogous to how strong cryptography is often defeated by sloppy code, not broken algorithms.

Building a functional AI agent demo is now straightforward. However, the true challenge lies in the final stage: making it secure, reliable, and scalable for enterprise use. This is the 'last mile' where the majority of projects falter due to unforeseen complexity in security, observability, and reliability.

When building Spiral, a single large language model trying to both interview the user and write content failed due to "context rot." The solution was a multi-agent system where an "interviewer" agent hands off the full context to a separate "writer" agent, improving performance and reliability.

The true building block of an AI feature is the "agent"—a combination of the model, system prompts, tool descriptions, and feedback loops. Swapping an LLM is not a simple drop-in replacement; it breaks the agent's behavior and requires re-engineering the entire system around it.

Anyone can build a simple "hackathon version" of an AI agent. The real, defensible moat comes from the painstaking engineering work to make the agent reliable enough for mission-critical enterprise use cases. This "schlep" of nailing the edge cases is a barrier that many, including big labs, are unmotivated to cross.

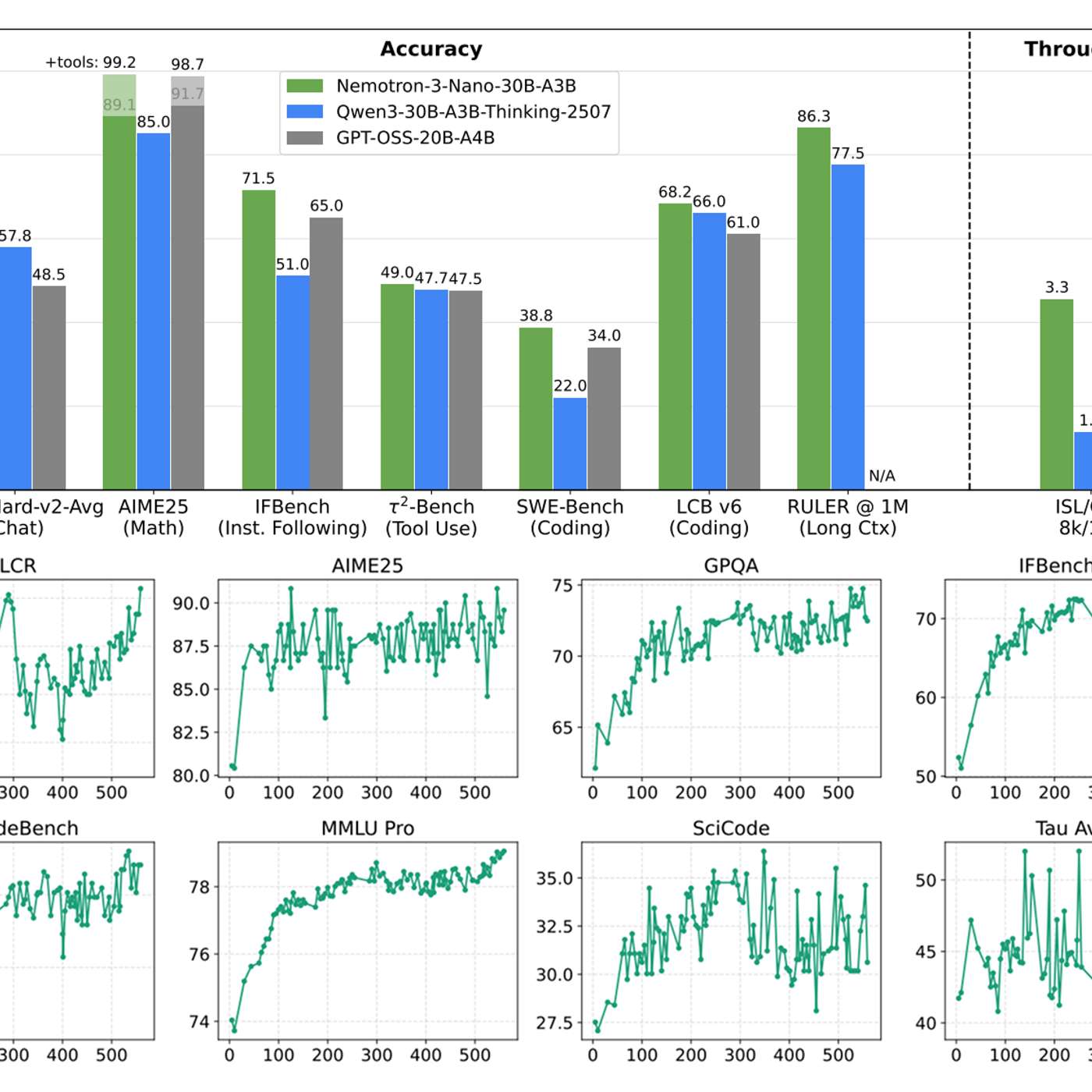

Contrary to the idea that infrastructure problems get commoditized, AI inference is growing more complex. This is driven by three factors: (1) increasing model scale (multi-trillion parameters), (2) greater diversity in model architectures and hardware, and (3) the shift to agentic systems that require managing long-lived, unpredictable state.

The current approach to AI safety involves identifying and patching specific failure modes (e.g., hallucinations, deception) as they emerge. This "leak by leak" approach fails to address the fundamental system dynamics, allowing overall pressure and risk to build continuously, leading to increasingly severe and sophisticated failures.

Salesforce's Chief AI Scientist explains that a true enterprise agent comprises four key parts: Memory (RAG), a Brain (reasoning engine), Actuators (API calls), and an Interface. A simple LLM is insufficient for enterprise tasks; the surrounding infrastructure provides the real functionality.