Claude's proficiency in writing is not accidental. Its development, backed by Amazon's Jeff Bezos (who owns The Washington Post), involved training on high-quality journalistic and literary sources. This strategic use of superior training data gives it a distinct advantage in crafting persuasive prose.

Related Insights

Despite the popularity of ChatGPT and Gemini, Claude is the superior tool for marketing and copy-related tasks. Its strength in persuasive writing makes it the go-to for creating scripts, emails, and sales pages that drive action.

When AI pioneers like Geoffrey Hinton see agency in an LLM, they are misinterpreting the output. What they are actually witnessing is a compressed, probabilistic reflection of the immense creativity and knowledge from all the humans who created its training data. It's an echo, not a mind.

Using adjectives like 'elite' (e.g., 'You are an elite photographer') isn't about flattery. It's a keyword that signals to the AI to operate within the higher-quality, expert-level subset of its training data, which is associated with those words, leading to better-quality output.

Earlier AI models would praise any writing given to them. A breakthrough occurred when the Spiral team found Claude 4 Opus could reliably judge writing quality, even its own. This capability enables building AI products with built-in feedback loops for self-improvement and developing taste.

When an AI expresses a negative view of humanity, it's not generating a novel opinion. It is reflecting the concepts and correlations it internalized from its training data—vast quantities of human text from the internet. The model learns that concepts like 'cheating' are associated with a broader 'badness' in human literature.

Unlike US firms performing massive web scrapes, European AI projects are constrained by the AI Act and authorship rights. This forces them to prioritize curated, "organic" datasets from sources like libraries and publishers. This difficult curation process becomes a competitive advantage, leading to higher-quality linguistic models.

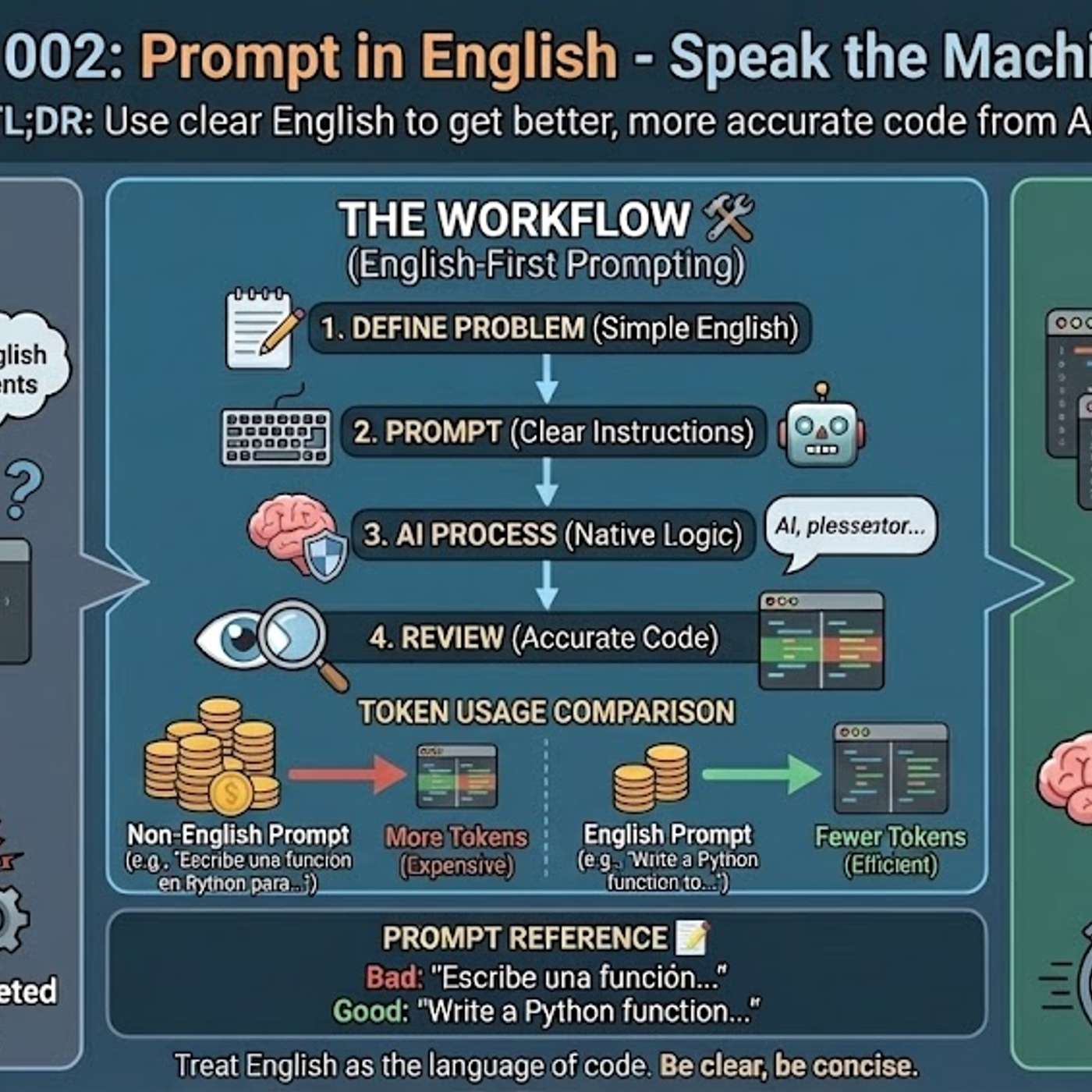

The primary reason AI models generate better code from English prompts is their training data composition. Over 90% of AI training sets, along with most technical libraries and documentation, are in English. This means the models' core reasoning pathways for code-related tasks are fundamentally optimized for English.

The best AI models are trained on data that reflects deep, subjective qualities—not just simple criteria. This "taste" is a key differentiator, influencing everything from code generation to creative writing, and is shaped by the values of the frontier lab.

Anthropic maintains a competitive edge by physically acquiring and digitizing thousands of old books, creating a massive, proprietary dataset of high-quality text. This multi-year effort to build a unique data library is difficult to replicate and may contribute to the distinct quality of its Claude models.

For superior AI-generated content, create a persistent knowledge base for the model using features like Claude's "Projects." Uploading actual sales call transcripts and customer interviews trains the AI on your specific customer's voice and pain points, resulting in more authentic and targeted marketing copy.