The capabilities of free, consumer-grade AI tools are over a year behind the paid, frontier models. Basing your understanding of AI's potential on these limited versions leads to a dangerously inaccurate assessment of the technology's trajectory.

Related Insights

AI models are surprisingly strong at certain tasks but bafflingly weak at others. This 'jagged frontier' of capability means that experience with AI can be inconsistent. The only way to navigate it is through direct experimentation within one's own domain of expertise.

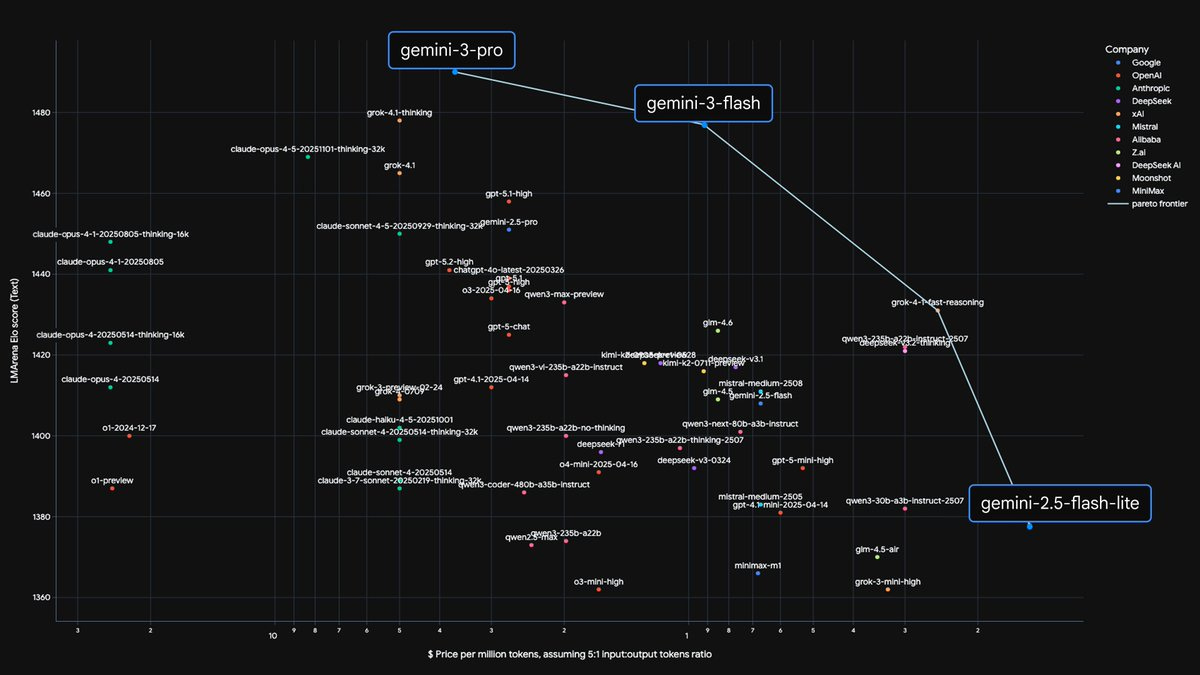

The 'Andy Warhol Coke' era, where everyone could access the best AI for a low price, is over. As inference costs for more powerful models rise, companies are introducing expensive tiered access. This will create significant inequality in who can use frontier AI, with implications for transparency and regulation.

Users frequently write off an AI's ability to perform a task after a single failure. However, with models improving dramatically every few months, what was impossible yesterday may be trivial today. This "capability blindness" prevents users from unlocking new value.

When developing AI-powered tools, don't be constrained by current model limitations. Given the exponential improvement curve, design your product for the capabilities you anticipate models will have in six months. This ensures your product is perfectly timed to shine when the underlying tech catches up.

Non-tech professionals often judge AI by obsolete limitations like six-fingered images or knowledge cutoffs. They don't realize they already consume sophisticated AI content daily, creating a significant perception gap between the technology's actual capabilities and its public reputation.

The public's perception of AI is largely based on free, less powerful versions. This creates a significant misunderstanding of the true capabilities available in top-tier paid models, leading to a dangerous underestimation of the technology's current state and imminent impact.

The perceived plateau in AI model performance is specific to consumer applications, where GPT-4 level reasoning is sufficient. The real future gains are in enterprise and code generation, which still have a massive runway for improvement. Consumer AI needs better integration, not just stronger models.

Judging an AI's capability by its base model alone is misleading. Its effectiveness is significantly amplified by surrounding tooling and frameworks, like developer environments. A good tool harness can make a decent model outperform a superior model that lacks such support.

The perceived limits of today's AI are not inherent to the models themselves but to our failure to build the right "agentic scaffold" around them. There's a "model capability overhang" where much more potential can be unlocked with better prompting, context engineering, and tool integrations.

Don't assume that a "good enough" cheap model will satisfy all future needs. Jeff Dean argues that as AI models become more capable, users' expectations and the complexity of their requests grow in tandem. This creates a perpetual need for pushing the performance frontier, as today's complex tasks become tomorrow's standard expectations.