For decades, the goal was a 'semantic web' with structured data for machines. Modern AI models achieve the same outcome by being so effective at understanding human-centric, unstructured web pages that they can extract meaning without needing special formatting. This is a major unlock for web automation.

Related Insights

A new wave of startups, like ex-Twitter CEO's Parallel, is attracting significant investment to build web infrastructure specifically for AI agents. Instead of ranking links for humans, these systems deliver optimized data directly to AI models, signaling a fundamental shift in how the internet will be structured and consumed.

The rise of AI browsers introduces 'agents' that automate tasks like research and form submissions. To capture leads from these agents, websites must feature simple, easily parsable forms and navigation, creating a new dimension of user experience focused on machine readability.

The vast majority of enterprise information, previously trapped in formats like PDFs and documents, was largely unusable. AI, through techniques like RAG and automated structure extraction, is unlocking this data for the first time, making it queryable and enabling new large-scale analysis.

The long-sought goal of "information at your fingertips," envisioned by Bill Gates, wasn't achieved through structured databases as expected. Instead, large neural networks unexpectedly became the key, capable of finding patterns in messy, unstructured enterprise data where rigid schemas failed.

Unlike screen-reading bots, web agents can leverage HTML's declarative nature. Tags like `<button>` explicitly state the purpose of UI elements, allowing agents to understand and interact with pages more reliably and efficiently. This structural property is a key advantage that has yet to be fully realized.

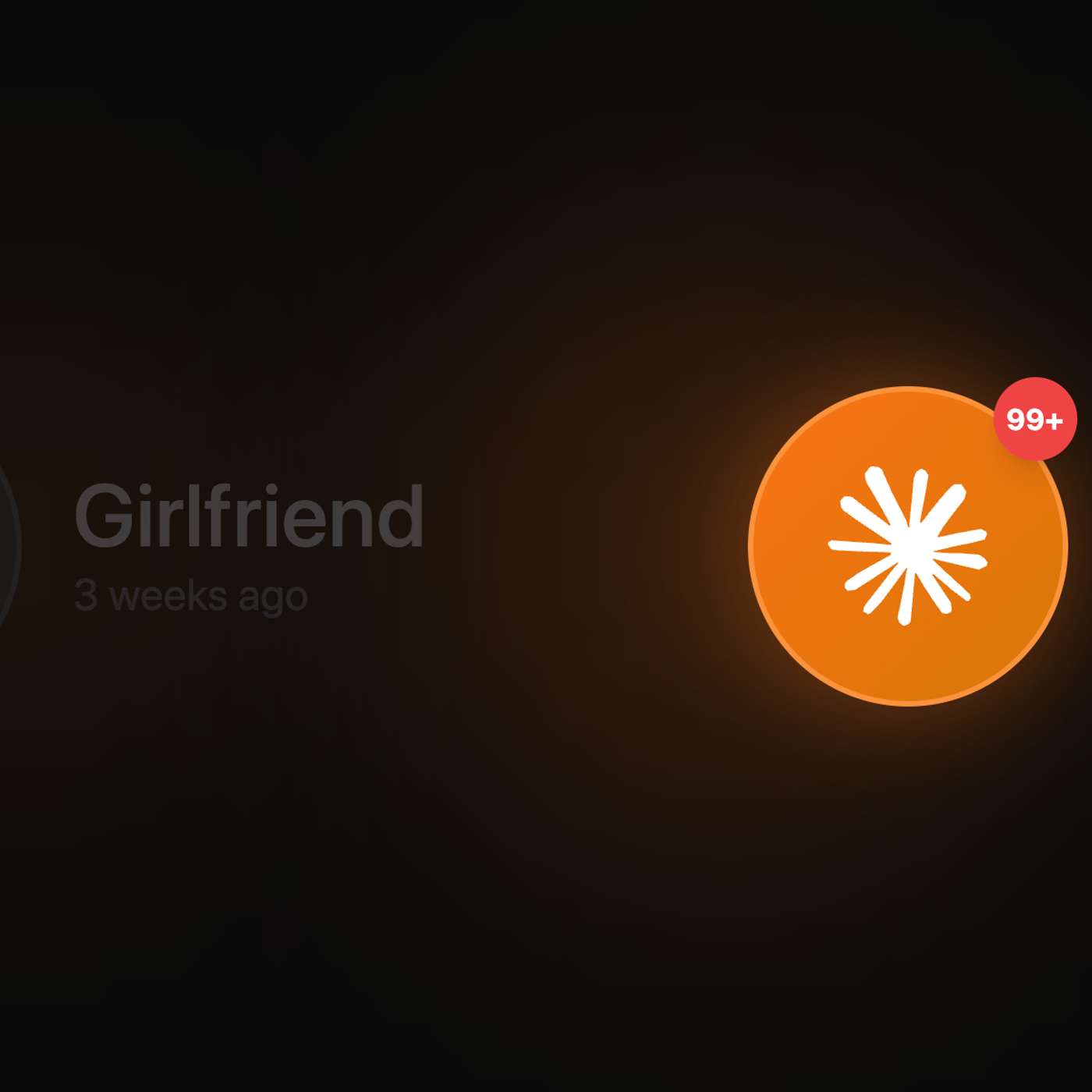

AI agents are becoming the dominant source of internet traffic, shifting the paradigm from human-centric UI to agent-friendly APIs. Developers optimizing for human users may be designing for a shrinking minority, as automated systems increasingly consume web services.

The original Semantic Web required creators to manually add structured metadata. Now, AI models extract that meaning from unstructured content, creating a machine-readable web through brute-force interpretation rather than voluntary participation.

The future of search is not linking to human-made webpages, but AI dynamically creating them. As quality content becomes an abundant commodity, search engines will compress all information into a knowledge graph. They will then construct synthetic, personalized webpage experiences to deliver the exact answer a user needs, making traditional pages redundant.

While language models are becoming incrementally better at conversation, the next significant leap in AI is defined by multimodal understanding and the ability to perform tasks, such as navigating websites. This shift from conversational prowess to agentic action marks the new frontier for a true "step change" in AI capabilities.

AI engines use Retrieval Augmented Generation (RAG), not simple keyword indexing. To be cited, your website must provide structured data (like schema.org) for machines to consume, shifting the focus from content creation to data provision.