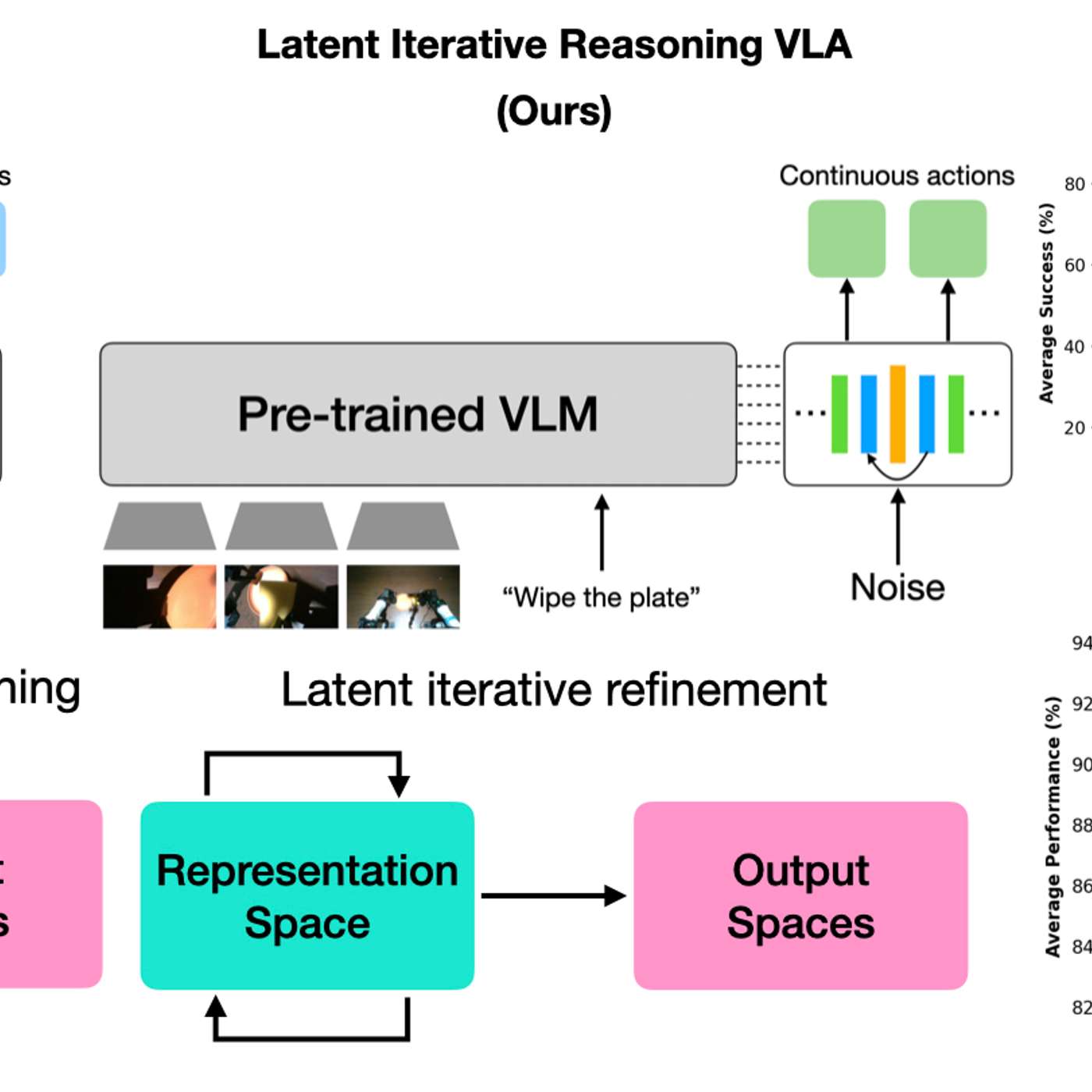

A new model architecture allows robots to vary their internal 'thinking' iterations at test time. This lets practitioners trade response speed for decision accuracy on a case-by-case basis, boosting performance on complex tasks without needing to retrain the model.

Related Insights

While letting a robot 'think' longer improves decision accuracy in lab tests, this added latency poses a significant risk in the real world. If the environment changes during the robot's reasoning period, its final decision may be outdated and dangerous, questioning its practical deployability.

Physical Intelligence demonstrated an emergent capability where its robotics model, after reaching a certain performance threshold, significantly improved by training on egocentric human video. This solves a major bottleneck by leveraging vast, existing video datasets instead of expensive, limited teleoperated data.

Unlike pre-programmed industrial robots, "Physical AI" systems sense their environment, make intelligent choices, and receive live feedback. This paradigm shift, similar to Waymo's self-driving cars versus simple cruise control, allows for autonomous and adaptive scientific experimentation rather than just repetitive tasks.

The adoption of powerful AI architectures like transformers in robotics was bottlenecked by data quality, not algorithmic invention. Only after data collection methods improved to capture more dexterous, high-fidelity human actions did these advanced models become effective, reversing the typical 'algorithm-first' narrative of AI progress.

The AI's ability to handle novel situations isn't just an emergent property of scale. Waive actively trains "world models," which are internal generative simulators. This enables the AI to reason about what might happen next, leading to sophisticated behaviors like nudging into intersections or slowing in fog.

By having AI models 'think' in a hidden latent space, robots gain efficiency without generating slow, text-based reasoning. This creates a black box, making it impossible for humans to understand the robot's logic, which is a major concern for safety-critical applications where interpretability is crucial.

The key to continual learning is not just a longer context window, but a new architecture with a spectrum of memory types. "Nested learning" proposes a model with different layers that update at different frequencies—from transient working memory to persistent core knowledge—mimicking how humans learn without catastrophic forgetting.

A major flaw in current AI is that models are frozen after training and don't learn from new interactions. "Nested Learning," a new technique from Google, offers a path for models to continually update, mimicking a key aspect of human intelligence and overcoming this static limitation.

A significant hurdle for AI, especially in replacing tasks like RPA, is that models are trained and then "frozen." They don't continuously learn from new interactions post-deployment. This makes them less adaptable than a true learning system.

Unlike older robots requiring precise maps and trajectory calculations, new robots use internet-scale common sense and learn motion by mimicking humans or simulations. This combination has “wiped the slate clean” for what is possible in the field.