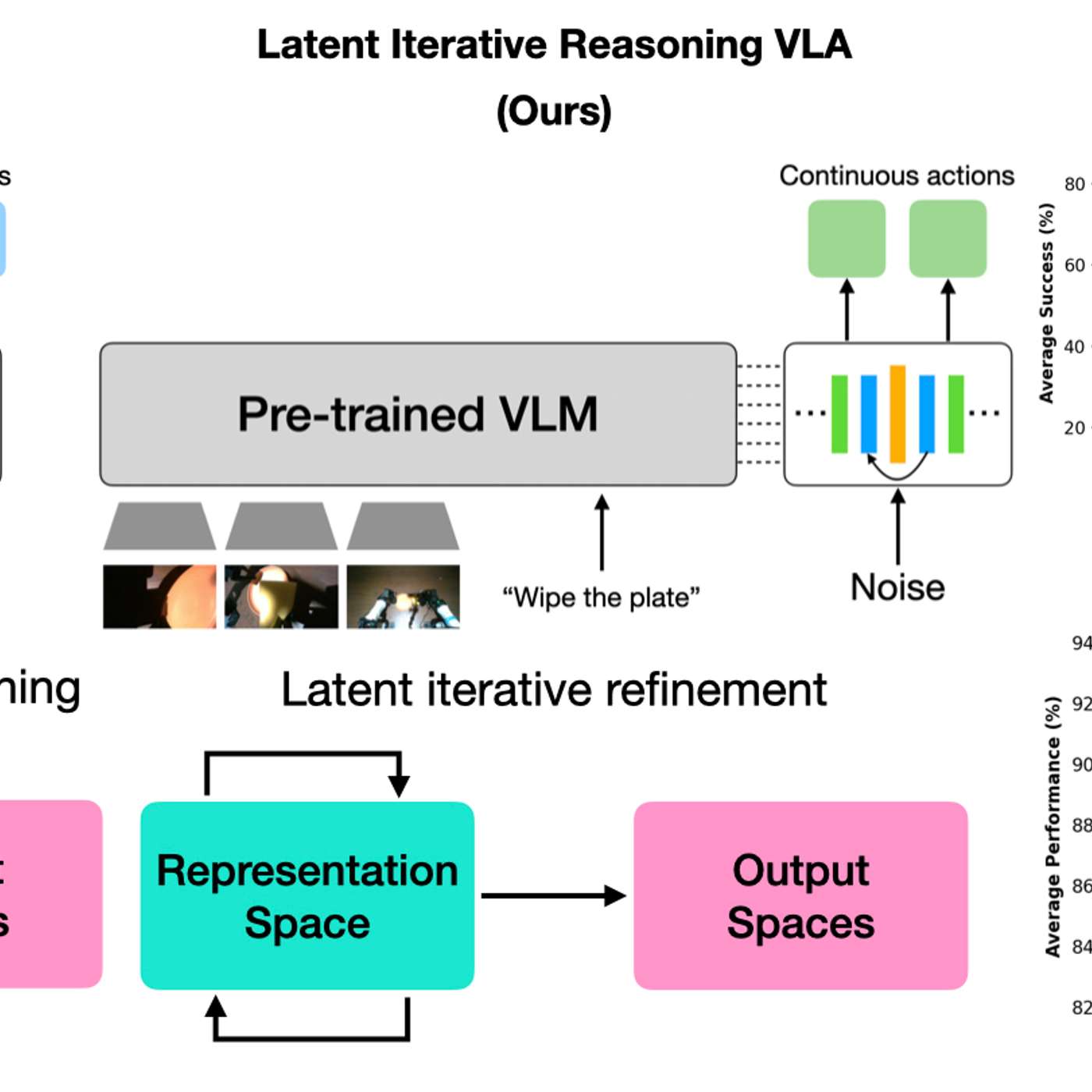

A new model architecture allows robots to vary their internal 'thinking' iterations at test time. This lets practitioners trade response speed for decision accuracy on a case-by-case basis, boosting performance on complex tasks without needing to retrain the model.

By having AI models 'think' in a hidden latent space, robots gain efficiency without generating slow, text-based reasoning. This creates a black box, making it impossible for humans to understand the robot's logic, which is a major concern for safety-critical applications where interpretability is crucial.

While letting a robot 'think' longer improves decision accuracy in lab tests, this added latency poses a significant risk in the real world. If the environment changes during the robot's reasoning period, its final decision may be outdated and dangerous, questioning its practical deployability.