The growth in computational demand is so relentlessly exponential that it will overwhelm all other energy needs. Even optimistic scenarios for new energy sources like fusion cannot keep pace, making energy availability the key constraint on civilizational progress for the next few decades.

Related Insights

Incremental increases in material production won't significantly move the needle on energy consumption. The next 10x in per capita energy use will be driven by two main factors: expanding aviation to billions of people and the explosive growth of AI compute, which acts as a 'per capita' increase in intelligence.

The primary bottleneck for scaling AI over the next decade may be the difficulty of bringing gigawatt-scale power online to support data centers. Smart money is already focused on this challenge, which is more complex than silicon supply.

The primary constraint on AI development is shifting from semiconductor availability to energy production. While the US has excelled at building data centers, its energy production growth is just 2.4%, compared to China's 6%. This disparity in energy infrastructure could become the deciding factor in the global AI race.

The focus in AI has evolved from rapid software capability gains to the physical constraints of its adoption. The demand for compute power is expected to significantly outstrip supply, making infrastructure—not algorithms—the defining bottleneck for future growth.

Pat Gelsinger contends that the true constraint on AI's expansion is energy availability. He frames the issue starkly: every gigawatt of power required by a new data center is equivalent to building a new nuclear reactor, a massive physical infrastructure challenge that will limit growth more than chips or capital.

The exponential growth of AI is fundamentally constrained by Earth's land, water, and power. By moving data centers to space, companies can access near-limitless solar energy and physical area, making off-planet compute a necessary step to overcome terrestrial bottlenecks and continue scaling.

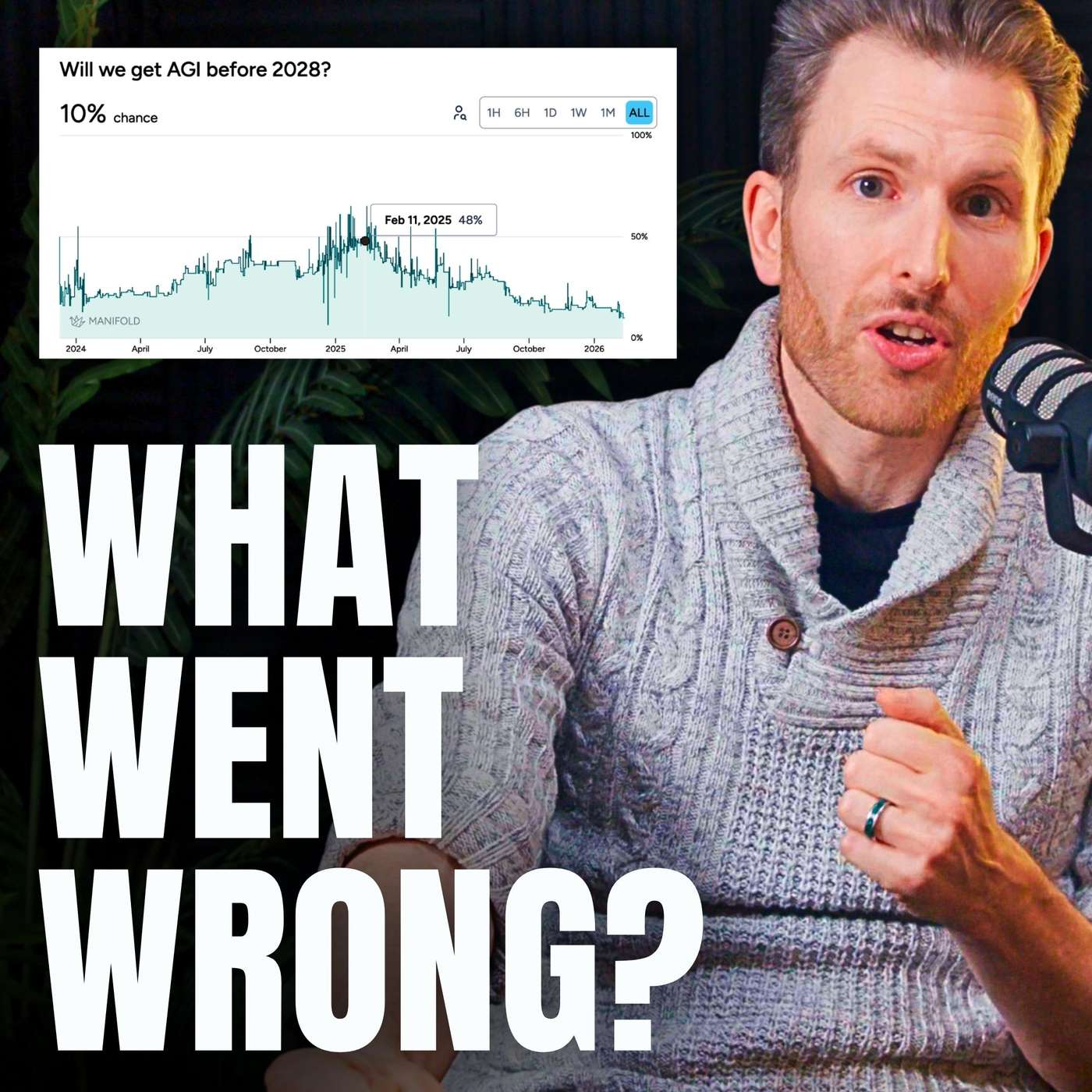

The AI industry's exponential growth in consuming compute, electricity, and talent is unsustainable. By 2032, it will have absorbed most available slack from other industries. Further progress will require potentially un-fundable trillion-dollar training runs, creating a critical period for AGI development.

While chip production typically scales to meet demand, the energy required to power massive AI data centers is a more fundamental constraint. This bottleneck is creating a strategic push towards nuclear power, with tech giants building data centers near nuclear plants.

Most of the world's energy capacity build-out over the next decade was planned using old models, completely omitting the exponential power demands of AI. This creates a looming, unpriced-in bottleneck for AI infrastructure development that will require significant new investment and planning.

As hyperscalers build massive new data centers for AI, the critical constraint is shifting from semiconductor supply to energy availability. The core challenge becomes sourcing enough power, raising new geopolitical and environmental questions that will define the next phase of the AI race.